A generic noninvasive neuromotor interface for human-computer interaction Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.1101/2024.02.23.581779

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.1101/2024.02.23.581779

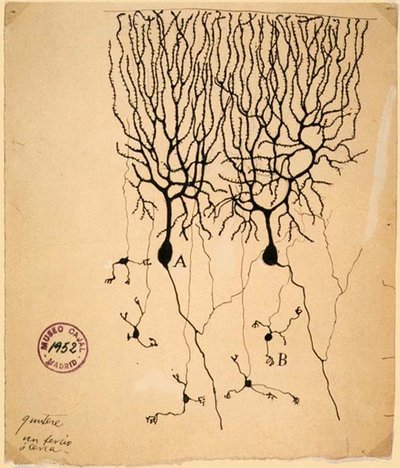

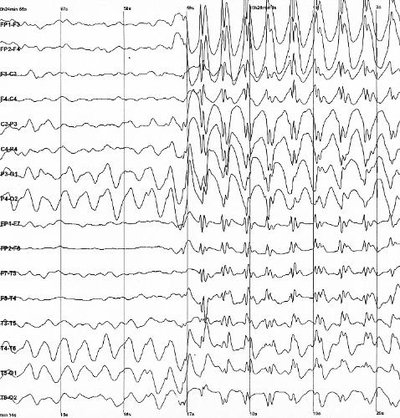

Since the advent of computing, humans have sought computer input technologies that are expressive, intuitive, and universal. While diverse modalities have been developed, including keyboards, mice, and touchscreens, they require interaction with an intermediary device that can be limiting, especially in mobile scenarios. Gesture-based systems utilize cameras or inertial sensors to avoid an intermediary device, but they tend to perform well only for unobscured or overt movements. Brain computer interfaces (BCIs) have been imagined for decades to solve the interface problem by allowing for input to computers via thought alone. However high-bandwidth communication has only been demonstrated using invasive BCIs with decoders designed for single individuals, and so cannot scale to the general public. In contrast, neuromotor signals found at the muscle offer access to subtle gestures and force information. Here we describe the development of a noninvasive neuromotor interface that allows for computer input using surface electromyography (sEMG). We developed a highly-sensitive and robust hardware platform that is easily donned/doffed to sense myoelectric activity at the wrist and transform intentional neuromotor commands into computer input. We paired this device with an infrastructure optimized to collect training data from thousands of consenting participants, which allowed us to develop generic sEMG neural network decoding models that work across many people without the need for per-person calibration. Test users not included in the training set demonstrate closed-loop median performance of gesture decoding at 0.5 target acquisitions per second in a continuous navigation task, 0.9 gesture detections per second in a discrete gesture task, and handwriting at 17.0 adjusted words per minute. We demonstrate that input bandwidth can be further improved up to 30% by personalizing sEMG decoding models to the individual, anticipating a future in which humans and machines co-adapt to provide seamless translation of human intent. To our knowledge this is the first high-bandwidth neuromotor interface that directly leverages biosignals with performant out-of-the-box generalization across people.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- https://doi.org/10.1101/2024.02.23.581779

- https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdf

- OA Status

- green

- Cited By

- 36

- References

- 82

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4392229384

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4392229384Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.1101/2024.02.23.581779Digital Object Identifier

- Title

-

A generic noninvasive neuromotor interface for human-computer interactionWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2024Year of publication

- Publication date

-

2024-02-28Full publication date if available

- Authors

-

David Sussillo, Patrick Kaifosh, Thomas R. ReardonList of authors in order

- Landing page

-

https://doi.org/10.1101/2024.02.23.581779Publisher landing page

- PDF URL

-

https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdfDirect link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdfDirect OA link when available

- Concepts

-

Computer science, Brain–computer interface, Gesture, Interface (matter), Human–computer interaction, Decoding methods, Task (project management), Modalities, Set (abstract data type), Gesture recognition, Input device, Artificial intelligence, Speech recognition, Computer vision, Computer hardware, Neuroscience, Programming language, Sociology, Parallel computing, Telecommunications, Biology, Social science, Electroencephalography, Management, Bubble, Maximum bubble pressure method, EconomicsTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

36Total citation count in OpenAlex

- Citations by year (recent)

-

2025: 19, 2024: 17Per-year citation counts (last 5 years)

- References (count)

-

82Number of works referenced by this work

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4392229384 |

|---|---|

| doi | https://doi.org/10.1101/2024.02.23.581779 |

| ids.doi | https://doi.org/10.1101/2024.02.23.581779 |

| ids.openalex | https://openalex.org/W4392229384 |

| fwci | 25.30082025 |

| type | preprint |

| title | A generic noninvasive neuromotor interface for human-computer interaction |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T10429 |

| topics[0].field.id | https://openalex.org/fields/28 |

| topics[0].field.display_name | Neuroscience |

| topics[0].score | 1.0 |

| topics[0].domain.id | https://openalex.org/domains/1 |

| topics[0].domain.display_name | Life Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/2805 |

| topics[0].subfield.display_name | Cognitive Neuroscience |

| topics[0].display_name | EEG and Brain-Computer Interfaces |

| topics[1].id | https://openalex.org/T10784 |

| topics[1].field.id | https://openalex.org/fields/22 |

| topics[1].field.display_name | Engineering |

| topics[1].score | 0.9990000128746033 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/2204 |

| topics[1].subfield.display_name | Biomedical Engineering |

| topics[1].display_name | Muscle activation and electromyography studies |

| topics[2].id | https://openalex.org/T11601 |

| topics[2].field.id | https://openalex.org/fields/28 |

| topics[2].field.display_name | Neuroscience |

| topics[2].score | 0.9990000128746033 |

| topics[2].domain.id | https://openalex.org/domains/1 |

| topics[2].domain.display_name | Life Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/2804 |

| topics[2].subfield.display_name | Cellular and Molecular Neuroscience |

| topics[2].display_name | Neuroscience and Neural Engineering |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C41008148 |

| concepts[0].level | 0 |

| concepts[0].score | 0.7703461647033691 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[0].display_name | Computer science |

| concepts[1].id | https://openalex.org/C173201364 |

| concepts[1].level | 3 |

| concepts[1].score | 0.7492325305938721 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q897410 |

| concepts[1].display_name | Brain–computer interface |

| concepts[2].id | https://openalex.org/C207347870 |

| concepts[2].level | 2 |

| concepts[2].score | 0.6929374933242798 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q371174 |

| concepts[2].display_name | Gesture |

| concepts[3].id | https://openalex.org/C113843644 |

| concepts[3].level | 4 |

| concepts[3].score | 0.6135090589523315 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q901882 |

| concepts[3].display_name | Interface (matter) |

| concepts[4].id | https://openalex.org/C107457646 |

| concepts[4].level | 1 |

| concepts[4].score | 0.6005441546440125 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q207434 |

| concepts[4].display_name | Human–computer interaction |

| concepts[5].id | https://openalex.org/C57273362 |

| concepts[5].level | 2 |

| concepts[5].score | 0.5347159504890442 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q576722 |

| concepts[5].display_name | Decoding methods |

| concepts[6].id | https://openalex.org/C2780451532 |

| concepts[6].level | 2 |

| concepts[6].score | 0.5137001276016235 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q759676 |

| concepts[6].display_name | Task (project management) |

| concepts[7].id | https://openalex.org/C2779903281 |

| concepts[7].level | 2 |

| concepts[7].score | 0.5104433298110962 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q6888026 |

| concepts[7].display_name | Modalities |

| concepts[8].id | https://openalex.org/C177264268 |

| concepts[8].level | 2 |

| concepts[8].score | 0.4616054594516754 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q1514741 |

| concepts[8].display_name | Set (abstract data type) |

| concepts[9].id | https://openalex.org/C159437735 |

| concepts[9].level | 3 |

| concepts[9].score | 0.4507550597190857 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q1519524 |

| concepts[9].display_name | Gesture recognition |

| concepts[10].id | https://openalex.org/C121449826 |

| concepts[10].level | 2 |

| concepts[10].score | 0.4320390522480011 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q864114 |

| concepts[10].display_name | Input device |

| concepts[11].id | https://openalex.org/C154945302 |

| concepts[11].level | 1 |

| concepts[11].score | 0.3856715261936188 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[11].display_name | Artificial intelligence |

| concepts[12].id | https://openalex.org/C28490314 |

| concepts[12].level | 1 |

| concepts[12].score | 0.36153644323349 |

| concepts[12].wikidata | https://www.wikidata.org/wiki/Q189436 |

| concepts[12].display_name | Speech recognition |

| concepts[13].id | https://openalex.org/C31972630 |

| concepts[13].level | 1 |

| concepts[13].score | 0.3521915674209595 |

| concepts[13].wikidata | https://www.wikidata.org/wiki/Q844240 |

| concepts[13].display_name | Computer vision |

| concepts[14].id | https://openalex.org/C9390403 |

| concepts[14].level | 1 |

| concepts[14].score | 0.20468711853027344 |

| concepts[14].wikidata | https://www.wikidata.org/wiki/Q3966 |

| concepts[14].display_name | Computer hardware |

| concepts[15].id | https://openalex.org/C169760540 |

| concepts[15].level | 1 |

| concepts[15].score | 0.08966484665870667 |

| concepts[15].wikidata | https://www.wikidata.org/wiki/Q207011 |

| concepts[15].display_name | Neuroscience |

| concepts[16].id | https://openalex.org/C199360897 |

| concepts[16].level | 1 |

| concepts[16].score | 0.0 |

| concepts[16].wikidata | https://www.wikidata.org/wiki/Q9143 |

| concepts[16].display_name | Programming language |

| concepts[17].id | https://openalex.org/C144024400 |

| concepts[17].level | 0 |

| concepts[17].score | 0.0 |

| concepts[17].wikidata | https://www.wikidata.org/wiki/Q21201 |

| concepts[17].display_name | Sociology |

| concepts[18].id | https://openalex.org/C173608175 |

| concepts[18].level | 1 |

| concepts[18].score | 0.0 |

| concepts[18].wikidata | https://www.wikidata.org/wiki/Q232661 |

| concepts[18].display_name | Parallel computing |

| concepts[19].id | https://openalex.org/C76155785 |

| concepts[19].level | 1 |

| concepts[19].score | 0.0 |

| concepts[19].wikidata | https://www.wikidata.org/wiki/Q418 |

| concepts[19].display_name | Telecommunications |

| concepts[20].id | https://openalex.org/C86803240 |

| concepts[20].level | 0 |

| concepts[20].score | 0.0 |

| concepts[20].wikidata | https://www.wikidata.org/wiki/Q420 |

| concepts[20].display_name | Biology |

| concepts[21].id | https://openalex.org/C36289849 |

| concepts[21].level | 1 |

| concepts[21].score | 0.0 |

| concepts[21].wikidata | https://www.wikidata.org/wiki/Q34749 |

| concepts[21].display_name | Social science |

| concepts[22].id | https://openalex.org/C522805319 |

| concepts[22].level | 2 |

| concepts[22].score | 0.0 |

| concepts[22].wikidata | https://www.wikidata.org/wiki/Q179965 |

| concepts[22].display_name | Electroencephalography |

| concepts[23].id | https://openalex.org/C187736073 |

| concepts[23].level | 1 |

| concepts[23].score | 0.0 |

| concepts[23].wikidata | https://www.wikidata.org/wiki/Q2920921 |

| concepts[23].display_name | Management |

| concepts[24].id | https://openalex.org/C157915830 |

| concepts[24].level | 2 |

| concepts[24].score | 0.0 |

| concepts[24].wikidata | https://www.wikidata.org/wiki/Q2928001 |

| concepts[24].display_name | Bubble |

| concepts[25].id | https://openalex.org/C129307140 |

| concepts[25].level | 3 |

| concepts[25].score | 0.0 |

| concepts[25].wikidata | https://www.wikidata.org/wiki/Q6795880 |

| concepts[25].display_name | Maximum bubble pressure method |

| concepts[26].id | https://openalex.org/C162324750 |

| concepts[26].level | 0 |

| concepts[26].score | 0.0 |

| concepts[26].wikidata | https://www.wikidata.org/wiki/Q8134 |

| concepts[26].display_name | Economics |

| keywords[0].id | https://openalex.org/keywords/computer-science |

| keywords[0].score | 0.7703461647033691 |

| keywords[0].display_name | Computer science |

| keywords[1].id | https://openalex.org/keywords/brain–computer-interface |

| keywords[1].score | 0.7492325305938721 |

| keywords[1].display_name | Brain–computer interface |

| keywords[2].id | https://openalex.org/keywords/gesture |

| keywords[2].score | 0.6929374933242798 |

| keywords[2].display_name | Gesture |

| keywords[3].id | https://openalex.org/keywords/interface |

| keywords[3].score | 0.6135090589523315 |

| keywords[3].display_name | Interface (matter) |

| keywords[4].id | https://openalex.org/keywords/human–computer-interaction |

| keywords[4].score | 0.6005441546440125 |

| keywords[4].display_name | Human–computer interaction |

| keywords[5].id | https://openalex.org/keywords/decoding-methods |

| keywords[5].score | 0.5347159504890442 |

| keywords[5].display_name | Decoding methods |

| keywords[6].id | https://openalex.org/keywords/task |

| keywords[6].score | 0.5137001276016235 |

| keywords[6].display_name | Task (project management) |

| keywords[7].id | https://openalex.org/keywords/modalities |

| keywords[7].score | 0.5104433298110962 |

| keywords[7].display_name | Modalities |

| keywords[8].id | https://openalex.org/keywords/set |

| keywords[8].score | 0.4616054594516754 |

| keywords[8].display_name | Set (abstract data type) |

| keywords[9].id | https://openalex.org/keywords/gesture-recognition |

| keywords[9].score | 0.4507550597190857 |

| keywords[9].display_name | Gesture recognition |

| keywords[10].id | https://openalex.org/keywords/input-device |

| keywords[10].score | 0.4320390522480011 |

| keywords[10].display_name | Input device |

| keywords[11].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[11].score | 0.3856715261936188 |

| keywords[11].display_name | Artificial intelligence |

| keywords[12].id | https://openalex.org/keywords/speech-recognition |

| keywords[12].score | 0.36153644323349 |

| keywords[12].display_name | Speech recognition |

| keywords[13].id | https://openalex.org/keywords/computer-vision |

| keywords[13].score | 0.3521915674209595 |

| keywords[13].display_name | Computer vision |

| keywords[14].id | https://openalex.org/keywords/computer-hardware |

| keywords[14].score | 0.20468711853027344 |

| keywords[14].display_name | Computer hardware |

| keywords[15].id | https://openalex.org/keywords/neuroscience |

| keywords[15].score | 0.08966484665870667 |

| keywords[15].display_name | Neuroscience |

| language | en |

| locations[0].id | doi:10.1101/2024.02.23.581779 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306402567 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | False |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | bioRxiv (Cold Spring Harbor Laboratory) |

| locations[0].source.host_organization | https://openalex.org/I2750212522 |

| locations[0].source.host_organization_name | Cold Spring Harbor Laboratory |

| locations[0].source.host_organization_lineage | https://openalex.org/I2750212522 |

| locations[0].license | cc-by-nc-nd |

| locations[0].pdf_url | https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdf |

| locations[0].version | acceptedVersion |

| locations[0].raw_type | posted-content |

| locations[0].license_id | https://openalex.org/licenses/cc-by-nc-nd |

| locations[0].is_accepted | True |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | https://doi.org/10.1101/2024.02.23.581779 |

| locations[1].id | pmh:oai:repository.cshl.edu:41490 |

| locations[1].is_oa | False |

| locations[1].source.id | https://openalex.org/S4306402288 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | False |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | Cold Spring Harbor Laboratory Institutional Repository (Cold Spring Harbor Laboratory) |

| locations[1].source.host_organization | https://openalex.org/I2750212522 |

| locations[1].source.host_organization_name | Cold Spring Harbor Laboratory |

| locations[1].source.host_organization_lineage | https://openalex.org/I2750212522 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | acceptedVersion |

| locations[1].raw_type | Paper |

| locations[1].license_id | |

| locations[1].is_accepted | True |

| locations[1].is_published | False |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | |

| indexed_in | crossref |

| authorships[0].author.id | https://openalex.org/A5054372964 |

| authorships[0].author.orcid | https://orcid.org/0000-0003-1620-1264 |

| authorships[0].author.display_name | David Sussillo |

| authorships[0].affiliations[0].raw_affiliation_string | Reality Labs |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | David Sussillo |

| authorships[0].is_corresponding | False |

| authorships[0].raw_affiliation_strings | Reality Labs |

| authorships[1].author.id | https://openalex.org/A5089125251 |

| authorships[1].author.orcid | https://orcid.org/0000-0002-1470-5701 |

| authorships[1].author.display_name | Patrick Kaifosh |

| authorships[1].affiliations[0].raw_affiliation_string | Reality Labs |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Patrick Kaifosh |

| authorships[1].is_corresponding | True |

| authorships[1].raw_affiliation_strings | Reality Labs |

| authorships[2].author.id | https://openalex.org/A5054982406 |

| authorships[2].author.orcid | |

| authorships[2].author.display_name | Thomas R. Reardon |

| authorships[2].affiliations[0].raw_affiliation_string | Reality Labs |

| authorships[2].author_position | last |

| authorships[2].raw_author_name | Thomas Reardon |

| authorships[2].is_corresponding | True |

| authorships[2].raw_affiliation_strings | Reality Labs |

| has_content.pdf | True |

| has_content.grobid_xml | True |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdf |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2024-03-05T00:00:00 |

| display_name | A generic noninvasive neuromotor interface for human-computer interaction |

| has_fulltext | True |

| is_retracted | False |

| updated_date | 2025-11-06T03:46:38.306776 |

| primary_topic.id | https://openalex.org/T10429 |

| primary_topic.field.id | https://openalex.org/fields/28 |

| primary_topic.field.display_name | Neuroscience |

| primary_topic.score | 1.0 |

| primary_topic.domain.id | https://openalex.org/domains/1 |

| primary_topic.domain.display_name | Life Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/2805 |

| primary_topic.subfield.display_name | Cognitive Neuroscience |

| primary_topic.display_name | EEG and Brain-Computer Interfaces |

| related_works | https://openalex.org/W2902873204, https://openalex.org/W2185750513, https://openalex.org/W2010878661, https://openalex.org/W3147379364, https://openalex.org/W2026258298, https://openalex.org/W3204639664, https://openalex.org/W2970836791, https://openalex.org/W2989699735, https://openalex.org/W4211075866, https://openalex.org/W2611498864 |

| cited_by_count | 36 |

| counts_by_year[0].year | 2025 |

| counts_by_year[0].cited_by_count | 19 |

| counts_by_year[1].year | 2024 |

| counts_by_year[1].cited_by_count | 17 |

| locations_count | 2 |

| best_oa_location.id | doi:10.1101/2024.02.23.581779 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306402567 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | False |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | bioRxiv (Cold Spring Harbor Laboratory) |

| best_oa_location.source.host_organization | https://openalex.org/I2750212522 |

| best_oa_location.source.host_organization_name | Cold Spring Harbor Laboratory |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I2750212522 |

| best_oa_location.license | cc-by-nc-nd |

| best_oa_location.pdf_url | https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdf |

| best_oa_location.version | acceptedVersion |

| best_oa_location.raw_type | posted-content |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by-nc-nd |

| best_oa_location.is_accepted | True |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | https://doi.org/10.1101/2024.02.23.581779 |

| primary_location.id | doi:10.1101/2024.02.23.581779 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306402567 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | False |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | bioRxiv (Cold Spring Harbor Laboratory) |

| primary_location.source.host_organization | https://openalex.org/I2750212522 |

| primary_location.source.host_organization_name | Cold Spring Harbor Laboratory |

| primary_location.source.host_organization_lineage | https://openalex.org/I2750212522 |

| primary_location.license | cc-by-nc-nd |

| primary_location.pdf_url | https://www.biorxiv.org/content/biorxiv/early/2024/02/28/2024.02.23.581779.full.pdf |

| primary_location.version | acceptedVersion |

| primary_location.raw_type | posted-content |

| primary_location.license_id | https://openalex.org/licenses/cc-by-nc-nd |

| primary_location.is_accepted | True |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | https://doi.org/10.1101/2024.02.23.581779 |

| publication_date | 2024-02-28 |

| publication_year | 2024 |

| referenced_works | https://openalex.org/W2900802277, https://openalex.org/W6931863653, https://openalex.org/W2096597330, https://openalex.org/W2339882287, https://openalex.org/W6776048684, https://openalex.org/W2198016226, https://openalex.org/W2132855977, https://openalex.org/W4281772850, https://openalex.org/W3107218417, https://openalex.org/W2592815340, https://openalex.org/W2784349096, https://openalex.org/W2000359198, https://openalex.org/W3082472058, https://openalex.org/W2144910141, https://openalex.org/W2962879438, https://openalex.org/W4387378260, https://openalex.org/W3016977963, https://openalex.org/W2488164446, https://openalex.org/W2156914236, https://openalex.org/W3021157419, https://openalex.org/W2049633694, https://openalex.org/W3206706278, https://openalex.org/W2126894902, https://openalex.org/W2066327120, https://openalex.org/W4239127880, https://openalex.org/W3209645235, https://openalex.org/W2166422604, https://openalex.org/W2127141656, https://openalex.org/W4243245460, https://openalex.org/W3097777922, https://openalex.org/W2972630480, https://openalex.org/W3163880120, https://openalex.org/W2087704839, https://openalex.org/W2064675550, https://openalex.org/W4308681469, https://openalex.org/W2942395439, https://openalex.org/W2804957865, https://openalex.org/W2771247593, https://openalex.org/W6772383348, https://openalex.org/W2766672259, https://openalex.org/W2538172027, https://openalex.org/W2144299314, https://openalex.org/W2559463885, https://openalex.org/W2794345050, https://openalex.org/W2168443748, https://openalex.org/W4304084051, https://openalex.org/W4386097872, https://openalex.org/W2293829681, https://openalex.org/W3200026530, https://openalex.org/W4220664982, https://openalex.org/W2744312188, https://openalex.org/W2506721812, https://openalex.org/W2980412925, https://openalex.org/W4386100600, https://openalex.org/W2980609230, https://openalex.org/W2062037031, https://openalex.org/W2979051136, https://openalex.org/W2936774411, https://openalex.org/W3156463266, https://openalex.org/W2886903801, https://openalex.org/W4223507406, https://openalex.org/W1507137415, https://openalex.org/W2152528000, https://openalex.org/W2003345831, https://openalex.org/W2032604151, https://openalex.org/W3049774894, https://openalex.org/W1978913152, https://openalex.org/W2538079766, https://openalex.org/W4220873541, https://openalex.org/W3003257820, https://openalex.org/W2402069821, https://openalex.org/W3161343553, https://openalex.org/W4386091044, https://openalex.org/W2991597663, https://openalex.org/W4387793624, https://openalex.org/W2138967075, https://openalex.org/W3163907627, https://openalex.org/W3172942063, https://openalex.org/W2209204668, https://openalex.org/W2887224044, https://openalex.org/W3102455230, https://openalex.org/W3104324110 |

| referenced_works_count | 82 |

| abstract_inverted_index.a | 137, 152, 238, 248, 281 |

| abstract_inverted_index.In | 115 |

| abstract_inverted_index.To | 296 |

| abstract_inverted_index.We | 150, 177, 260 |

| abstract_inverted_index.an | 33, 53, 182 |

| abstract_inverted_index.at | 120, 166, 231, 254 |

| abstract_inverted_index.be | 38, 266 |

| abstract_inverted_index.by | 82, 272 |

| abstract_inverted_index.in | 41, 220, 237, 247, 283 |

| abstract_inverted_index.is | 159, 300 |

| abstract_inverted_index.of | 4, 136, 191, 228, 293 |

| abstract_inverted_index.or | 48, 65 |

| abstract_inverted_index.so | 108 |

| abstract_inverted_index.to | 51, 59, 77, 86, 111, 125, 162, 185, 197, 270, 277, 289 |

| abstract_inverted_index.up | 269 |

| abstract_inverted_index.us | 196 |

| abstract_inverted_index.we | 132 |

| abstract_inverted_index.0.5 | 232 |

| abstract_inverted_index.0.9 | 242 |

| abstract_inverted_index.30% | 271 |

| abstract_inverted_index.and | 16, 27, 107, 128, 154, 169, 252, 286 |

| abstract_inverted_index.are | 13 |

| abstract_inverted_index.but | 56 |

| abstract_inverted_index.can | 37, 265 |

| abstract_inverted_index.for | 63, 75, 84, 104, 143, 213 |

| abstract_inverted_index.has | 94 |

| abstract_inverted_index.not | 218 |

| abstract_inverted_index.our | 297 |

| abstract_inverted_index.per | 235, 245, 258 |

| abstract_inverted_index.set | 223 |

| abstract_inverted_index.the | 2, 79, 112, 121, 134, 167, 211, 221, 278, 301 |

| abstract_inverted_index.via | 88 |

| abstract_inverted_index.17.0 | 255 |

| abstract_inverted_index.BCIs | 100 |

| abstract_inverted_index.Here | 131 |

| abstract_inverted_index.Test | 216 |

| abstract_inverted_index.been | 22, 73, 96 |

| abstract_inverted_index.data | 188 |

| abstract_inverted_index.from | 189 |

| abstract_inverted_index.have | 7, 21, 72 |

| abstract_inverted_index.into | 174 |

| abstract_inverted_index.many | 208 |

| abstract_inverted_index.need | 212 |

| abstract_inverted_index.only | 62, 95 |

| abstract_inverted_index.sEMG | 200, 274 |

| abstract_inverted_index.tend | 58 |

| abstract_inverted_index.that | 12, 36, 141, 158, 205, 262, 306 |

| abstract_inverted_index.they | 29, 57 |

| abstract_inverted_index.this | 179, 299 |

| abstract_inverted_index.well | 61 |

| abstract_inverted_index.with | 32, 101, 181, 310 |

| abstract_inverted_index.work | 206 |

| abstract_inverted_index.Brain | 68 |

| abstract_inverted_index.Since | 1 |

| abstract_inverted_index.While | 18 |

| abstract_inverted_index.avoid | 52 |

| abstract_inverted_index.first | 302 |

| abstract_inverted_index.force | 129 |

| abstract_inverted_index.found | 119 |

| abstract_inverted_index.human | 294 |

| abstract_inverted_index.input | 10, 85, 145, 263 |

| abstract_inverted_index.mice, | 26 |

| abstract_inverted_index.offer | 123 |

| abstract_inverted_index.overt | 66 |

| abstract_inverted_index.scale | 110 |

| abstract_inverted_index.sense | 163 |

| abstract_inverted_index.solve | 78 |

| abstract_inverted_index.task, | 241, 251 |

| abstract_inverted_index.users | 217 |

| abstract_inverted_index.using | 98, 146 |

| abstract_inverted_index.which | 194, 284 |

| abstract_inverted_index.words | 257 |

| abstract_inverted_index.wrist | 168 |

| abstract_inverted_index.(BCIs) | 71 |

| abstract_inverted_index.access | 124 |

| abstract_inverted_index.across | 207, 314 |

| abstract_inverted_index.advent | 3 |

| abstract_inverted_index.allows | 142 |

| abstract_inverted_index.alone. | 90 |

| abstract_inverted_index.cannot | 109 |

| abstract_inverted_index.device | 35, 180 |

| abstract_inverted_index.easily | 160 |

| abstract_inverted_index.future | 282 |

| abstract_inverted_index.humans | 6, 285 |

| abstract_inverted_index.input. | 176 |

| abstract_inverted_index.median | 226 |

| abstract_inverted_index.mobile | 42 |

| abstract_inverted_index.models | 204, 276 |

| abstract_inverted_index.muscle | 122 |

| abstract_inverted_index.neural | 201 |

| abstract_inverted_index.paired | 178 |

| abstract_inverted_index.people | 209 |

| abstract_inverted_index.robust | 155 |

| abstract_inverted_index.second | 236, 246 |

| abstract_inverted_index.single | 105 |

| abstract_inverted_index.sought | 8 |

| abstract_inverted_index.subtle | 126 |

| abstract_inverted_index.target | 233 |

| abstract_inverted_index.(sEMG). | 149 |

| abstract_inverted_index.However | 91 |

| abstract_inverted_index.allowed | 195 |

| abstract_inverted_index.cameras | 47 |

| abstract_inverted_index.collect | 186 |

| abstract_inverted_index.decades | 76 |

| abstract_inverted_index.develop | 198 |

| abstract_inverted_index.device, | 55 |

| abstract_inverted_index.diverse | 19 |

| abstract_inverted_index.further | 267 |

| abstract_inverted_index.general | 113 |

| abstract_inverted_index.generic | 199 |

| abstract_inverted_index.gesture | 229, 243, 250 |

| abstract_inverted_index.intent. | 295 |

| abstract_inverted_index.minute. | 259 |

| abstract_inverted_index.network | 202 |

| abstract_inverted_index.people. | 315 |

| abstract_inverted_index.perform | 60 |

| abstract_inverted_index.problem | 81 |

| abstract_inverted_index.provide | 290 |

| abstract_inverted_index.public. | 114 |

| abstract_inverted_index.require | 30 |

| abstract_inverted_index.sensors | 50 |

| abstract_inverted_index.signals | 118 |

| abstract_inverted_index.surface | 147 |

| abstract_inverted_index.systems | 45 |

| abstract_inverted_index.thought | 89 |

| abstract_inverted_index.utilize | 46 |

| abstract_inverted_index.without | 210 |

| abstract_inverted_index.Abstract | 0 |

| abstract_inverted_index.activity | 165 |

| abstract_inverted_index.adjusted | 256 |

| abstract_inverted_index.allowing | 83 |

| abstract_inverted_index.co-adapt | 288 |

| abstract_inverted_index.commands | 173 |

| abstract_inverted_index.computer | 9, 69, 144, 175 |

| abstract_inverted_index.decoders | 102 |

| abstract_inverted_index.decoding | 203, 230, 275 |

| abstract_inverted_index.describe | 133 |

| abstract_inverted_index.designed | 103 |

| abstract_inverted_index.directly | 307 |

| abstract_inverted_index.discrete | 249 |

| abstract_inverted_index.gestures | 127 |

| abstract_inverted_index.hardware | 156 |

| abstract_inverted_index.imagined | 74 |

| abstract_inverted_index.improved | 268 |

| abstract_inverted_index.included | 219 |

| abstract_inverted_index.inertial | 49 |

| abstract_inverted_index.invasive | 99 |

| abstract_inverted_index.machines | 287 |

| abstract_inverted_index.platform | 157 |

| abstract_inverted_index.seamless | 291 |

| abstract_inverted_index.training | 187, 222 |

| abstract_inverted_index.bandwidth | 264 |

| abstract_inverted_index.computers | 87 |

| abstract_inverted_index.contrast, | 116 |

| abstract_inverted_index.developed | 151 |

| abstract_inverted_index.including | 24 |

| abstract_inverted_index.interface | 80, 140, 305 |

| abstract_inverted_index.knowledge | 298 |

| abstract_inverted_index.leverages | 308 |

| abstract_inverted_index.limiting, | 39 |

| abstract_inverted_index.optimized | 184 |

| abstract_inverted_index.thousands | 190 |

| abstract_inverted_index.transform | 170 |

| abstract_inverted_index.biosignals | 309 |

| abstract_inverted_index.computing, | 5 |

| abstract_inverted_index.consenting | 192 |

| abstract_inverted_index.continuous | 239 |

| abstract_inverted_index.detections | 244 |

| abstract_inverted_index.developed, | 23 |

| abstract_inverted_index.especially | 40 |

| abstract_inverted_index.interfaces | 70 |

| abstract_inverted_index.intuitive, | 15 |

| abstract_inverted_index.keyboards, | 25 |

| abstract_inverted_index.modalities | 20 |

| abstract_inverted_index.movements. | 67 |

| abstract_inverted_index.navigation | 240 |

| abstract_inverted_index.neuromotor | 117, 139, 172, 304 |

| abstract_inverted_index.per-person | 214 |

| abstract_inverted_index.performant | 311 |

| abstract_inverted_index.scenarios. | 43 |

| abstract_inverted_index.universal. | 17 |

| abstract_inverted_index.unobscured | 64 |

| abstract_inverted_index.closed-loop | 225 |

| abstract_inverted_index.demonstrate | 224, 261 |

| abstract_inverted_index.development | 135 |

| abstract_inverted_index.expressive, | 14 |

| abstract_inverted_index.handwriting | 253 |

| abstract_inverted_index.individual, | 279 |

| abstract_inverted_index.intentional | 171 |

| abstract_inverted_index.interaction | 31 |

| abstract_inverted_index.myoelectric | 164 |

| abstract_inverted_index.noninvasive | 138 |

| abstract_inverted_index.performance | 227 |

| abstract_inverted_index.translation | 292 |

| abstract_inverted_index.acquisitions | 234 |

| abstract_inverted_index.anticipating | 280 |

| abstract_inverted_index.calibration. | 215 |

| abstract_inverted_index.demonstrated | 97 |

| abstract_inverted_index.individuals, | 106 |

| abstract_inverted_index.information. | 130 |

| abstract_inverted_index.intermediary | 34, 54 |

| abstract_inverted_index.technologies | 11 |

| abstract_inverted_index.Gesture-based | 44 |

| abstract_inverted_index.communication | 93 |

| abstract_inverted_index.donned/doffed | 161 |

| abstract_inverted_index.participants, | 193 |

| abstract_inverted_index.personalizing | 273 |

| abstract_inverted_index.touchscreens, | 28 |

| abstract_inverted_index.generalization | 313 |

| abstract_inverted_index.high-bandwidth | 92, 303 |

| abstract_inverted_index.infrastructure | 183 |

| abstract_inverted_index.out-of-the-box | 312 |

| abstract_inverted_index.electromyography | 148 |

| abstract_inverted_index.highly-sensitive | 153 |

| cited_by_percentile_year.max | 100 |

| cited_by_percentile_year.min | 99 |

| corresponding_author_ids | https://openalex.org/A5054982406, https://openalex.org/A5089125251 |

| countries_distinct_count | 0 |

| institutions_distinct_count | 3 |

| sustainable_development_goals[0].id | https://metadata.un.org/sdg/9 |

| sustainable_development_goals[0].score | 0.6399999856948853 |

| sustainable_development_goals[0].display_name | Industry, innovation and infrastructure |

| citation_normalized_percentile.value | 0.99583162 |

| citation_normalized_percentile.is_in_top_1_percent | True |

| citation_normalized_percentile.is_in_top_10_percent | True |