A simple squared-error reformulation for ordinal classification Article Swipe

Related Concepts

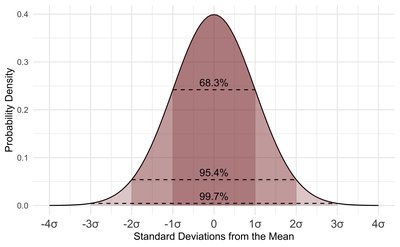

Softmax function

Mean squared error

Computer science

Simple (philosophy)

Context (archaeology)

Ordinal data

Ordinal regression

Artificial intelligence

Class (philosophy)

Pattern recognition (psychology)

Artificial neural network

Algorithm

Machine learning

Statistics

Mathematics

Epistemology

Biology

Philosophy

Paleontology

Christopher Beckham

,

Christopher Pal

·

YOU?

·

· 2016

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1612.00775

· OA: W2560046091

YOU?

·

· 2016

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1612.00775

· OA: W2560046091

YOU?

·

· 2016

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1612.00775

· OA: W2560046091

YOU?

·

· 2016

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1612.00775

· OA: W2560046091

In this paper, we explore ordinal classification (in the context of deep neural networks) through a simple modification of the squared error loss which not only allows it to not only be sensitive to class ordering, but also allows the possibility of having a discrete probability distribution over the classes. Our formulation is based on the use of a softmax hidden layer, which has received relatively little attention in the literature. We empirically evaluate its performance on the Kaggle diabetic retinopathy dataset, an ordinal and high-resolution dataset and show that it outperforms all of the baselines employed.

Related Topics

Finding more related topics…