Beyond Appearance: Geometric Cues for Robust Video Instance Segmentation Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2507.05948

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2507.05948

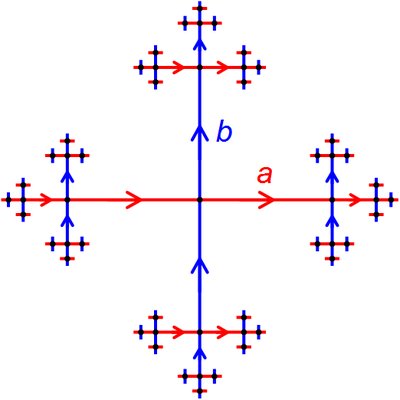

Video Instance Segmentation (VIS) fundamentally struggles with pervasive challenges including object occlusions, motion blur, and appearance variations during temporal association. To overcome these limitations, this work introduces geometric awareness to enhance VIS robustness by strategically leveraging monocular depth estimation. We systematically investigate three distinct integration paradigms. Expanding Depth Channel (EDC) method concatenates the depth map as input channel to segmentation networks; Sharing ViT (SV) designs a uniform ViT backbone, shared between depth estimation and segmentation branches; Depth Supervision (DS) makes use of depth prediction as an auxiliary training guide for feature learning. Though DS exhibits limited effectiveness, benchmark evaluations demonstrate that EDC and SV significantly enhance the robustness of VIS. When with Swin-L backbone, our EDC method gets 56.2 AP, which sets a new state-of-the-art result on OVIS benchmark. This work conclusively establishes depth cues as critical enablers for robust video understanding.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2507.05948

- https://arxiv.org/pdf/2507.05948

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W4415972390

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4415972390Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2507.05948Digital Object Identifier

- Title

-

Beyond Appearance: Geometric Cues for Robust Video Instance SegmentationWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2025Year of publication

- Publication date

-

2025-07-08Full publication date if available

- Authors

-

Q. Jason Niu, Yikang Zhou, Shihao Chen, Tao Zhang, Shunping JiList of authors in order

- Landing page

-

https://arxiv.org/abs/2507.05948Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2507.05948Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2507.05948Direct OA link when available

- Cited by

-

0Total citation count in OpenAlex

Full payload

| id | https://openalex.org/W4415972390 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2507.05948 |

| ids.doi | https://doi.org/10.48550/arxiv.2507.05948 |

| ids.openalex | https://openalex.org/W4415972390 |

| fwci | |

| type | preprint |

| title | Beyond Appearance: Geometric Cues for Robust Video Instance Segmentation |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2507.05948 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | https://arxiv.org/pdf/2507.05948 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2507.05948 |

| locations[1].id | doi:10.48550/arxiv.2507.05948 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2507.05948 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5112450656 |

| authorships[0].author.orcid | |

| authorships[0].author.display_name | Q. Jason Niu |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Niu, Quanzhu |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5104162474 |

| authorships[1].author.orcid | |

| authorships[1].author.display_name | Yikang Zhou |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Zhou, Yikang |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5055000437 |

| authorships[2].author.orcid | https://orcid.org/0000-0001-7646-8003 |

| authorships[2].author.display_name | Shihao Chen |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Chen, Shihao |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5100375717 |

| authorships[3].author.orcid | https://orcid.org/0000-0001-7279-8929 |

| authorships[3].author.display_name | Tao Zhang |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Zhang, Tao |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5031588692 |

| authorships[4].author.orcid | https://orcid.org/0000-0002-3088-1481 |

| authorships[4].author.display_name | Shunping Ji |

| authorships[4].author_position | last |

| authorships[4].raw_author_name | Ji, Shunping |

| authorships[4].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2507.05948 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Beyond Appearance: Geometric Cues for Robust Video Instance Segmentation |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-07T23:20:04.922697 |

| primary_topic | |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2507.05948 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2507.05948 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2507.05948 |

| primary_location.id | pmh:oai:arXiv.org:2507.05948 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | https://arxiv.org/pdf/2507.05948 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2507.05948 |

| publication_date | 2025-07-08 |

| publication_year | 2025 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 65, 122 |

| abstract_inverted_index.DS | 93 |

| abstract_inverted_index.SV | 103 |

| abstract_inverted_index.To | 20 |

| abstract_inverted_index.We | 39 |

| abstract_inverted_index.an | 85 |

| abstract_inverted_index.as | 55, 84, 135 |

| abstract_inverted_index.by | 33 |

| abstract_inverted_index.of | 81, 108 |

| abstract_inverted_index.on | 126 |

| abstract_inverted_index.to | 29, 58 |

| abstract_inverted_index.AP, | 119 |

| abstract_inverted_index.EDC | 101, 115 |

| abstract_inverted_index.VIS | 31 |

| abstract_inverted_index.ViT | 62, 67 |

| abstract_inverted_index.and | 14, 73, 102 |

| abstract_inverted_index.for | 89, 138 |

| abstract_inverted_index.map | 54 |

| abstract_inverted_index.new | 123 |

| abstract_inverted_index.our | 114 |

| abstract_inverted_index.the | 52, 106 |

| abstract_inverted_index.use | 80 |

| abstract_inverted_index.(DS) | 78 |

| abstract_inverted_index.(SV) | 63 |

| abstract_inverted_index.56.2 | 118 |

| abstract_inverted_index.OVIS | 127 |

| abstract_inverted_index.This | 129 |

| abstract_inverted_index.VIS. | 109 |

| abstract_inverted_index.When | 110 |

| abstract_inverted_index.cues | 134 |

| abstract_inverted_index.gets | 117 |

| abstract_inverted_index.sets | 121 |

| abstract_inverted_index.that | 100 |

| abstract_inverted_index.this | 24 |

| abstract_inverted_index.with | 6, 111 |

| abstract_inverted_index.work | 25, 130 |

| abstract_inverted_index.(EDC) | 49 |

| abstract_inverted_index.(VIS) | 3 |

| abstract_inverted_index.Depth | 47, 76 |

| abstract_inverted_index.Video | 0 |

| abstract_inverted_index.blur, | 13 |

| abstract_inverted_index.depth | 37, 53, 71, 82, 133 |

| abstract_inverted_index.guide | 88 |

| abstract_inverted_index.input | 56 |

| abstract_inverted_index.makes | 79 |

| abstract_inverted_index.these | 22 |

| abstract_inverted_index.three | 42 |

| abstract_inverted_index.video | 140 |

| abstract_inverted_index.which | 120 |

| abstract_inverted_index.Swin-L | 112 |

| abstract_inverted_index.Though | 92 |

| abstract_inverted_index.during | 17 |

| abstract_inverted_index.method | 50, 116 |

| abstract_inverted_index.motion | 12 |

| abstract_inverted_index.object | 10 |

| abstract_inverted_index.result | 125 |

| abstract_inverted_index.robust | 139 |

| abstract_inverted_index.shared | 69 |

| abstract_inverted_index.Channel | 48 |

| abstract_inverted_index.Sharing | 61 |

| abstract_inverted_index.between | 70 |

| abstract_inverted_index.channel | 57 |

| abstract_inverted_index.designs | 64 |

| abstract_inverted_index.enhance | 30, 105 |

| abstract_inverted_index.feature | 90 |

| abstract_inverted_index.limited | 95 |

| abstract_inverted_index.uniform | 66 |

| abstract_inverted_index.Instance | 1 |

| abstract_inverted_index.critical | 136 |

| abstract_inverted_index.distinct | 43 |

| abstract_inverted_index.enablers | 137 |

| abstract_inverted_index.exhibits | 94 |

| abstract_inverted_index.overcome | 21 |

| abstract_inverted_index.temporal | 18 |

| abstract_inverted_index.training | 87 |

| abstract_inverted_index.Expanding | 46 |

| abstract_inverted_index.auxiliary | 86 |

| abstract_inverted_index.awareness | 28 |

| abstract_inverted_index.backbone, | 68, 113 |

| abstract_inverted_index.benchmark | 97 |

| abstract_inverted_index.branches; | 75 |

| abstract_inverted_index.geometric | 27 |

| abstract_inverted_index.including | 9 |

| abstract_inverted_index.learning. | 91 |

| abstract_inverted_index.monocular | 36 |

| abstract_inverted_index.networks; | 60 |

| abstract_inverted_index.pervasive | 7 |

| abstract_inverted_index.struggles | 5 |

| abstract_inverted_index.appearance | 15 |

| abstract_inverted_index.benchmark. | 128 |

| abstract_inverted_index.challenges | 8 |

| abstract_inverted_index.estimation | 72 |

| abstract_inverted_index.introduces | 26 |

| abstract_inverted_index.leveraging | 35 |

| abstract_inverted_index.paradigms. | 45 |

| abstract_inverted_index.prediction | 83 |

| abstract_inverted_index.robustness | 32, 107 |

| abstract_inverted_index.variations | 16 |

| abstract_inverted_index.Supervision | 77 |

| abstract_inverted_index.demonstrate | 99 |

| abstract_inverted_index.establishes | 132 |

| abstract_inverted_index.estimation. | 38 |

| abstract_inverted_index.evaluations | 98 |

| abstract_inverted_index.integration | 44 |

| abstract_inverted_index.investigate | 41 |

| abstract_inverted_index.occlusions, | 11 |

| abstract_inverted_index.Segmentation | 2 |

| abstract_inverted_index.association. | 19 |

| abstract_inverted_index.concatenates | 51 |

| abstract_inverted_index.conclusively | 131 |

| abstract_inverted_index.limitations, | 23 |

| abstract_inverted_index.segmentation | 59, 74 |

| abstract_inverted_index.fundamentally | 4 |

| abstract_inverted_index.significantly | 104 |

| abstract_inverted_index.strategically | 34 |

| abstract_inverted_index.effectiveness, | 96 |

| abstract_inverted_index.systematically | 40 |

| abstract_inverted_index.understanding. | 141 |

| abstract_inverted_index.state-of-the-art | 124 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 5 |

| citation_normalized_percentile |