Beyond imitation: Zero-shot task transfer on robots by learning concepts as cognitive programs Article Swipe

YOU?

·

· 2018

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1812.02788

YOU?

·

· 2018

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1812.02788

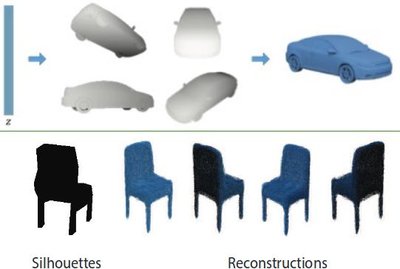

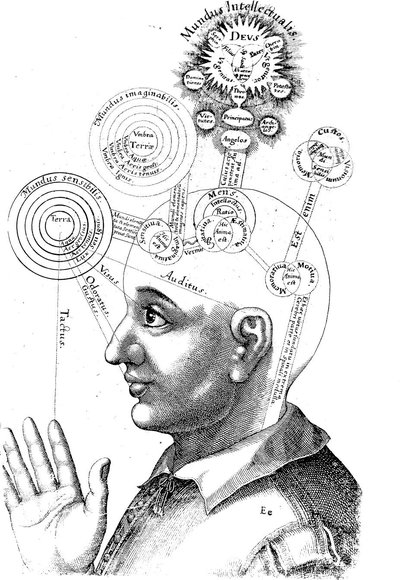

Humans can infer concepts from image pairs and apply those in the physical world in a completely different setting, enabling tasks like IKEA assembly from diagrams. If robots could represent and infer high-level concepts, it would significantly improve their ability to understand our intent and to transfer tasks between different environments. To that end, we introduce a computational framework that replicates aspects of human concept learning. Concepts are represented as programs on a novel computer architecture consisting of a visual perception system, working memory, and action controller. The instruction set of this "cognitive computer" has commands for parsing a visual scene, directing gaze and attention, imagining new objects, manipulating the contents of a visual working memory, and controlling arm movement. Inferring a concept corresponds to inducing a program that can transform the input to the output. Some concepts require the use of imagination and recursion. Previously learned concepts simplify the learning of subsequent more elaborate concepts, and create a hierarchy of abstractions. We demonstrate how a robot can use these abstractions to interpret novel concepts presented to it as schematic images, and then apply those concepts in dramatically different situations. By bringing cognitive science ideas on mental imagery, perceptual symbols, embodied cognition, and deictic mechanisms into the realm of machine learning, our work brings us closer to the goal of building robots that have interpretable representations and commonsense.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- https://arxiv.org/abs/1812.02788

- OA Status

- green

- Related Works

- 20

- OpenAlex ID

- https://openalex.org/W2952295220

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W2952295220Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.1812.02788Digital Object Identifier

- Title

-

Beyond imitation: Zero-shot task transfer on robots by learning concepts as cognitive programsWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2018Year of publication

- Publication date

-

2018-12-06Full publication date if available

- Authors

-

Miguel Lázaro-Gredilla, Dianhuan Lin, J. Swaroop Guntupalli, Dileep GeorgeList of authors in order

- Landing page

-

https://arxiv.org/abs/1812.02788Publisher landing page

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/abs/1812.02788Direct OA link when available

- Concepts

-

Computer science, Human–computer interaction, Robot, Soar, Embodied cognition, Set (abstract data type), Cognitive robotics, Artificial intelligence, Task (project management), Perception, Cognitive architecture, Cognition, Cognitive science, Psychology, Programming language, Economics, Neuroscience, ManagementTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

0Total citation count in OpenAlex

- Related works (count)

-

20Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W2952295220 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.1812.02788 |

| ids.doi | https://doi.org/10.48550/arxiv.1812.02788 |

| ids.mag | 2952295220 |

| ids.openalex | https://openalex.org/W2952295220 |

| fwci | |

| type | preprint |

| title | Beyond imitation: Zero-shot task transfer on robots by learning concepts as cognitive programs |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T11714 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.9783999919891357 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1707 |

| topics[0].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[0].display_name | Multimodal Machine Learning Applications |

| topics[1].id | https://openalex.org/T11307 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9599999785423279 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1702 |

| topics[1].subfield.display_name | Artificial Intelligence |

| topics[1].display_name | Domain Adaptation and Few-Shot Learning |

| topics[2].id | https://openalex.org/T10653 |

| topics[2].field.id | https://openalex.org/fields/22 |

| topics[2].field.display_name | Engineering |

| topics[2].score | 0.9567999839782715 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/2207 |

| topics[2].subfield.display_name | Control and Systems Engineering |

| topics[2].display_name | Robot Manipulation and Learning |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C41008148 |

| concepts[0].level | 0 |

| concepts[0].score | 0.7391957640647888 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[0].display_name | Computer science |

| concepts[1].id | https://openalex.org/C107457646 |

| concepts[1].level | 1 |

| concepts[1].score | 0.5757599472999573 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q207434 |

| concepts[1].display_name | Human–computer interaction |

| concepts[2].id | https://openalex.org/C90509273 |

| concepts[2].level | 2 |

| concepts[2].score | 0.5718104839324951 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q11012 |

| concepts[2].display_name | Robot |

| concepts[3].id | https://openalex.org/C17305859 |

| concepts[3].level | 2 |

| concepts[3].score | 0.5343419909477234 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q382944 |

| concepts[3].display_name | Soar |

| concepts[4].id | https://openalex.org/C100609095 |

| concepts[4].level | 2 |

| concepts[4].score | 0.531401515007019 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q1335050 |

| concepts[4].display_name | Embodied cognition |

| concepts[5].id | https://openalex.org/C177264268 |

| concepts[5].level | 2 |

| concepts[5].score | 0.5165073275566101 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q1514741 |

| concepts[5].display_name | Set (abstract data type) |

| concepts[6].id | https://openalex.org/C192327766 |

| concepts[6].level | 3 |

| concepts[6].score | 0.4979119300842285 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q1038799 |

| concepts[6].display_name | Cognitive robotics |

| concepts[7].id | https://openalex.org/C154945302 |

| concepts[7].level | 1 |

| concepts[7].score | 0.493071049451828 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[7].display_name | Artificial intelligence |

| concepts[8].id | https://openalex.org/C2780451532 |

| concepts[8].level | 2 |

| concepts[8].score | 0.45594465732574463 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q759676 |

| concepts[8].display_name | Task (project management) |

| concepts[9].id | https://openalex.org/C26760741 |

| concepts[9].level | 2 |

| concepts[9].score | 0.4319363236427307 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q160402 |

| concepts[9].display_name | Perception |

| concepts[10].id | https://openalex.org/C20854674 |

| concepts[10].level | 3 |

| concepts[10].score | 0.4234405755996704 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q4386060 |

| concepts[10].display_name | Cognitive architecture |

| concepts[11].id | https://openalex.org/C169900460 |

| concepts[11].level | 2 |

| concepts[11].score | 0.39402949810028076 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q2200417 |

| concepts[11].display_name | Cognition |

| concepts[12].id | https://openalex.org/C188147891 |

| concepts[12].level | 1 |

| concepts[12].score | 0.3904590606689453 |

| concepts[12].wikidata | https://www.wikidata.org/wiki/Q147638 |

| concepts[12].display_name | Cognitive science |

| concepts[13].id | https://openalex.org/C15744967 |

| concepts[13].level | 0 |

| concepts[13].score | 0.11578688025474548 |

| concepts[13].wikidata | https://www.wikidata.org/wiki/Q9418 |

| concepts[13].display_name | Psychology |

| concepts[14].id | https://openalex.org/C199360897 |

| concepts[14].level | 1 |

| concepts[14].score | 0.11576735973358154 |

| concepts[14].wikidata | https://www.wikidata.org/wiki/Q9143 |

| concepts[14].display_name | Programming language |

| concepts[15].id | https://openalex.org/C162324750 |

| concepts[15].level | 0 |

| concepts[15].score | 0.0 |

| concepts[15].wikidata | https://www.wikidata.org/wiki/Q8134 |

| concepts[15].display_name | Economics |

| concepts[16].id | https://openalex.org/C169760540 |

| concepts[16].level | 1 |

| concepts[16].score | 0.0 |

| concepts[16].wikidata | https://www.wikidata.org/wiki/Q207011 |

| concepts[16].display_name | Neuroscience |

| concepts[17].id | https://openalex.org/C187736073 |

| concepts[17].level | 1 |

| concepts[17].score | 0.0 |

| concepts[17].wikidata | https://www.wikidata.org/wiki/Q2920921 |

| concepts[17].display_name | Management |

| keywords[0].id | https://openalex.org/keywords/computer-science |

| keywords[0].score | 0.7391957640647888 |

| keywords[0].display_name | Computer science |

| keywords[1].id | https://openalex.org/keywords/human–computer-interaction |

| keywords[1].score | 0.5757599472999573 |

| keywords[1].display_name | Human–computer interaction |

| keywords[2].id | https://openalex.org/keywords/robot |

| keywords[2].score | 0.5718104839324951 |

| keywords[2].display_name | Robot |

| keywords[3].id | https://openalex.org/keywords/soar |

| keywords[3].score | 0.5343419909477234 |

| keywords[3].display_name | Soar |

| keywords[4].id | https://openalex.org/keywords/embodied-cognition |

| keywords[4].score | 0.531401515007019 |

| keywords[4].display_name | Embodied cognition |

| keywords[5].id | https://openalex.org/keywords/set |

| keywords[5].score | 0.5165073275566101 |

| keywords[5].display_name | Set (abstract data type) |

| keywords[6].id | https://openalex.org/keywords/cognitive-robotics |

| keywords[6].score | 0.4979119300842285 |

| keywords[6].display_name | Cognitive robotics |

| keywords[7].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[7].score | 0.493071049451828 |

| keywords[7].display_name | Artificial intelligence |

| keywords[8].id | https://openalex.org/keywords/task |

| keywords[8].score | 0.45594465732574463 |

| keywords[8].display_name | Task (project management) |

| keywords[9].id | https://openalex.org/keywords/perception |

| keywords[9].score | 0.4319363236427307 |

| keywords[9].display_name | Perception |

| keywords[10].id | https://openalex.org/keywords/cognitive-architecture |

| keywords[10].score | 0.4234405755996704 |

| keywords[10].display_name | Cognitive architecture |

| keywords[11].id | https://openalex.org/keywords/cognition |

| keywords[11].score | 0.39402949810028076 |

| keywords[11].display_name | Cognition |

| keywords[12].id | https://openalex.org/keywords/cognitive-science |

| keywords[12].score | 0.3904590606689453 |

| keywords[12].display_name | Cognitive science |

| keywords[13].id | https://openalex.org/keywords/psychology |

| keywords[13].score | 0.11578688025474548 |

| keywords[13].display_name | Psychology |

| keywords[14].id | https://openalex.org/keywords/programming-language |

| keywords[14].score | 0.11576735973358154 |

| keywords[14].display_name | Programming language |

| language | en |

| locations[0].id | mag:2952295220 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | |

| locations[0].version | submittedVersion |

| locations[0].raw_type | |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | arXiv (Cornell University) |

| locations[0].landing_page_url | https://arxiv.org/abs/1812.02788 |

| locations[1].id | doi:10.48550/arxiv.1812.02788 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.1812.02788 |

| indexed_in | datacite |

| authorships[0].author.id | https://openalex.org/A5076182014 |

| authorships[0].author.orcid | https://orcid.org/0000-0002-4528-5084 |

| authorships[0].author.display_name | Miguel Lázaro-Gredilla |

| authorships[0].affiliations[0].raw_affiliation_string | Vicarious AI, CA, USA. |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Miguel Lázaro-Gredilla |

| authorships[0].is_corresponding | False |

| authorships[0].raw_affiliation_strings | Vicarious AI, CA, USA. |

| authorships[1].author.id | https://openalex.org/A5073265599 |

| authorships[1].author.orcid | |

| authorships[1].author.display_name | Dianhuan Lin |

| authorships[1].affiliations[0].raw_affiliation_string | Vicarious AI, CA, USA. |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Dianhuan Lin |

| authorships[1].is_corresponding | False |

| authorships[1].raw_affiliation_strings | Vicarious AI, CA, USA. |

| authorships[2].author.id | https://openalex.org/A5009263663 |

| authorships[2].author.orcid | https://orcid.org/0000-0002-0677-5590 |

| authorships[2].author.display_name | J. Swaroop Guntupalli |

| authorships[2].affiliations[0].raw_affiliation_string | Vicarious AI, CA, USA. |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | J. Swaroop Guntupalli |

| authorships[2].is_corresponding | False |

| authorships[2].raw_affiliation_strings | Vicarious AI, CA, USA. |

| authorships[3].author.id | https://openalex.org/A5101896611 |

| authorships[3].author.orcid | https://orcid.org/0000-0002-4948-6297 |

| authorships[3].author.display_name | Dileep George |

| authorships[3].affiliations[0].raw_affiliation_string | Vicarious AI, CA, USA. |

| authorships[3].author_position | last |

| authorships[3].raw_author_name | Dileep George |

| authorships[3].is_corresponding | False |

| authorships[3].raw_affiliation_strings | Vicarious AI, CA, USA. |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/abs/1812.02788 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Beyond imitation: Zero-shot task transfer on robots by learning concepts as cognitive programs |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T11714 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.9783999919891357 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1707 |

| primary_topic.subfield.display_name | Computer Vision and Pattern Recognition |

| primary_topic.display_name | Multimodal Machine Learning Applications |

| related_works | https://openalex.org/W2905304202, https://openalex.org/W2253442059, https://openalex.org/W2801033965, https://openalex.org/W2199045182, https://openalex.org/W3089903515, https://openalex.org/W2788927107, https://openalex.org/W3124628996, https://openalex.org/W3011910373, https://openalex.org/W145061141, https://openalex.org/W2185716157, https://openalex.org/W203070945, https://openalex.org/W2981063026, https://openalex.org/W3118914687, https://openalex.org/W2117111003, https://openalex.org/W2253509726, https://openalex.org/W3034230991, https://openalex.org/W2964313317, https://openalex.org/W3037075923, https://openalex.org/W3110393195, https://openalex.org/W81136467 |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | mag:2952295220 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | arXiv (Cornell University) |

| best_oa_location.landing_page_url | https://arxiv.org/abs/1812.02788 |

| primary_location.id | mag:2952295220 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | |

| primary_location.version | submittedVersion |

| primary_location.raw_type | |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | arXiv (Cornell University) |

| primary_location.landing_page_url | https://arxiv.org/abs/1812.02788 |

| publication_date | 2018-12-06 |

| publication_year | 2018 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 15, 56, 72, 78, 98, 112, 121, 126, 158, 165 |

| abstract_inverted_index.By | 190 |

| abstract_inverted_index.If | 26 |

| abstract_inverted_index.To | 51 |

| abstract_inverted_index.We | 162 |

| abstract_inverted_index.as | 69, 178 |

| abstract_inverted_index.in | 10, 14, 186 |

| abstract_inverted_index.it | 34, 177 |

| abstract_inverted_index.of | 62, 77, 90, 111, 141, 151, 160, 208, 219 |

| abstract_inverted_index.on | 71, 195 |

| abstract_inverted_index.to | 40, 45, 124, 133, 171, 176, 216 |

| abstract_inverted_index.us | 214 |

| abstract_inverted_index.we | 54 |

| abstract_inverted_index.The | 87 |

| abstract_inverted_index.and | 7, 30, 44, 84, 103, 116, 143, 156, 181, 202, 226 |

| abstract_inverted_index.are | 67 |

| abstract_inverted_index.arm | 118 |

| abstract_inverted_index.can | 1, 129, 167 |

| abstract_inverted_index.for | 96 |

| abstract_inverted_index.has | 94 |

| abstract_inverted_index.how | 164 |

| abstract_inverted_index.new | 106 |

| abstract_inverted_index.our | 42, 211 |

| abstract_inverted_index.set | 89 |

| abstract_inverted_index.the | 11, 109, 131, 134, 139, 149, 206, 217 |

| abstract_inverted_index.use | 140, 168 |

| abstract_inverted_index.IKEA | 22 |

| abstract_inverted_index.Some | 136 |

| abstract_inverted_index.end, | 53 |

| abstract_inverted_index.from | 4, 24 |

| abstract_inverted_index.gaze | 102 |

| abstract_inverted_index.goal | 218 |

| abstract_inverted_index.have | 223 |

| abstract_inverted_index.into | 205 |

| abstract_inverted_index.like | 21 |

| abstract_inverted_index.more | 153 |

| abstract_inverted_index.that | 52, 59, 128, 222 |

| abstract_inverted_index.then | 182 |

| abstract_inverted_index.this | 91 |

| abstract_inverted_index.work | 212 |

| abstract_inverted_index.apply | 8, 183 |

| abstract_inverted_index.could | 28 |

| abstract_inverted_index.human | 63 |

| abstract_inverted_index.ideas | 194 |

| abstract_inverted_index.image | 5 |

| abstract_inverted_index.infer | 2, 31 |

| abstract_inverted_index.input | 132 |

| abstract_inverted_index.novel | 73, 173 |

| abstract_inverted_index.pairs | 6 |

| abstract_inverted_index.realm | 207 |

| abstract_inverted_index.robot | 166 |

| abstract_inverted_index.tasks | 20, 47 |

| abstract_inverted_index.their | 38 |

| abstract_inverted_index.these | 169 |

| abstract_inverted_index.those | 9, 184 |

| abstract_inverted_index.world | 13 |

| abstract_inverted_index.would | 35 |

| abstract_inverted_index.Humans | 0 |

| abstract_inverted_index.action | 85 |

| abstract_inverted_index.brings | 213 |

| abstract_inverted_index.closer | 215 |

| abstract_inverted_index.create | 157 |

| abstract_inverted_index.intent | 43 |

| abstract_inverted_index.mental | 196 |

| abstract_inverted_index.robots | 27, 221 |

| abstract_inverted_index.scene, | 100 |

| abstract_inverted_index.visual | 79, 99, 113 |

| abstract_inverted_index.ability | 39 |

| abstract_inverted_index.aspects | 61 |

| abstract_inverted_index.between | 48 |

| abstract_inverted_index.concept | 64, 122 |

| abstract_inverted_index.deictic | 203 |

| abstract_inverted_index.images, | 180 |

| abstract_inverted_index.improve | 37 |

| abstract_inverted_index.learned | 146 |

| abstract_inverted_index.machine | 209 |

| abstract_inverted_index.memory, | 83, 115 |

| abstract_inverted_index.output. | 135 |

| abstract_inverted_index.parsing | 97 |

| abstract_inverted_index.program | 127 |

| abstract_inverted_index.require | 138 |

| abstract_inverted_index.science | 193 |

| abstract_inverted_index.system, | 81 |

| abstract_inverted_index.working | 82, 114 |

| abstract_inverted_index.Concepts | 66 |

| abstract_inverted_index.assembly | 23 |

| abstract_inverted_index.bringing | 191 |

| abstract_inverted_index.building | 220 |

| abstract_inverted_index.commands | 95 |

| abstract_inverted_index.computer | 74 |

| abstract_inverted_index.concepts | 3, 137, 147, 174, 185 |

| abstract_inverted_index.contents | 110 |

| abstract_inverted_index.embodied | 200 |

| abstract_inverted_index.enabling | 19 |

| abstract_inverted_index.imagery, | 197 |

| abstract_inverted_index.inducing | 125 |

| abstract_inverted_index.learning | 150 |

| abstract_inverted_index.objects, | 107 |

| abstract_inverted_index.physical | 12 |

| abstract_inverted_index.programs | 70 |

| abstract_inverted_index.setting, | 18 |

| abstract_inverted_index.simplify | 148 |

| abstract_inverted_index.symbols, | 199 |

| abstract_inverted_index.transfer | 46 |

| abstract_inverted_index.Inferring | 120 |

| abstract_inverted_index.cognitive | 192 |

| abstract_inverted_index.computer" | 93 |

| abstract_inverted_index.concepts, | 33, 155 |

| abstract_inverted_index.diagrams. | 25 |

| abstract_inverted_index.different | 17, 49, 188 |

| abstract_inverted_index.directing | 101 |

| abstract_inverted_index.elaborate | 154 |

| abstract_inverted_index.framework | 58 |

| abstract_inverted_index.hierarchy | 159 |

| abstract_inverted_index.imagining | 105 |

| abstract_inverted_index.interpret | 172 |

| abstract_inverted_index.introduce | 55 |

| abstract_inverted_index.learning, | 210 |

| abstract_inverted_index.learning. | 65 |

| abstract_inverted_index.movement. | 119 |

| abstract_inverted_index.presented | 175 |

| abstract_inverted_index.represent | 29 |

| abstract_inverted_index.schematic | 179 |

| abstract_inverted_index.transform | 130 |

| abstract_inverted_index."cognitive | 92 |

| abstract_inverted_index.Previously | 145 |

| abstract_inverted_index.attention, | 104 |

| abstract_inverted_index.cognition, | 201 |

| abstract_inverted_index.completely | 16 |

| abstract_inverted_index.consisting | 76 |

| abstract_inverted_index.high-level | 32 |

| abstract_inverted_index.mechanisms | 204 |

| abstract_inverted_index.perception | 80 |

| abstract_inverted_index.perceptual | 198 |

| abstract_inverted_index.recursion. | 144 |

| abstract_inverted_index.replicates | 60 |

| abstract_inverted_index.subsequent | 152 |

| abstract_inverted_index.understand | 41 |

| abstract_inverted_index.controller. | 86 |

| abstract_inverted_index.controlling | 117 |

| abstract_inverted_index.corresponds | 123 |

| abstract_inverted_index.demonstrate | 163 |

| abstract_inverted_index.imagination | 142 |

| abstract_inverted_index.instruction | 88 |

| abstract_inverted_index.represented | 68 |

| abstract_inverted_index.situations. | 189 |

| abstract_inverted_index.abstractions | 170 |

| abstract_inverted_index.architecture | 75 |

| abstract_inverted_index.commonsense. | 227 |

| abstract_inverted_index.dramatically | 187 |

| abstract_inverted_index.manipulating | 108 |

| abstract_inverted_index.abstractions. | 161 |

| abstract_inverted_index.computational | 57 |

| abstract_inverted_index.environments. | 50 |

| abstract_inverted_index.interpretable | 224 |

| abstract_inverted_index.significantly | 36 |

| abstract_inverted_index.representations | 225 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 4 |

| sustainable_development_goals[0].id | https://metadata.un.org/sdg/4 |

| sustainable_development_goals[0].score | 0.4099999964237213 |

| sustainable_development_goals[0].display_name | Quality Education |

| citation_normalized_percentile |