DAGANFuse: Infrared and Visible Image Fusion Based on Differential Features Attention Generative Adversarial Networks Article Swipe

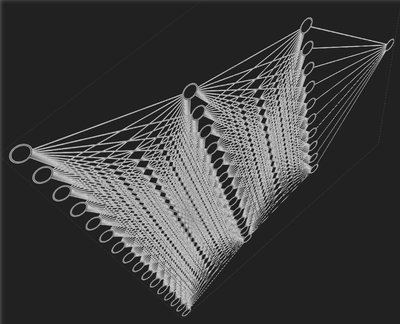

The purpose of multi-modal visual information fusion is to integrate the data of multi-sensors to generate an image with higher quality, more information, and greater clarity so that it contains more complementary information and fewer redundant features. Infrared sensors detect thermal radiation emitted by objects, which is related to their temperature, whereas visible light sensors generate images by capturing the light that interacts with objects, including reflection, diffusion, and transmission. However, due to the different principles of infrared and visible light sensors, there is a large similarity difference between the generated infrared and visible images, which makes it difficult to extract complementary information. Existing methods generally use simple splicing or addition methods to fuse features at the fusion layer without considering the intrinsic features of different modal images and the interaction of features between different scales. Moreover, only correlation is considered. On the contrary, the image fusion task needs to pay more attention to their complementarity. For this reason, we introduce a cross-scale differential features attention generative adversarial fusion network, namely DAGANFuse. In the generator, we designed a cross-modal differential features attention module to fuse the intrinsic content of different modal images. We proposed a parallel path calculation of differential features and fusion features for attention weights and performed parallel spatial and channel attention weight calculations on the two paths. In the discriminator, a dual discriminator was used to maintain the information balance between different modalities and avoid common problems such as information blurring and loss of texture details. Experimental results show that our DAGANFuse has state-of-the-art (SOTA) performance and is superior to existing methods in terms of fusion performance.

Related Topics

- Type

- article

- Language

- en

- Landing Page

- https://doi.org/10.3390/app15084560

- https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320

- OA Status

- gold

- Cited By

- 2

- References

- 46

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4409621523

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4409621523Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.3390/app15084560Digital Object Identifier

- Title

-

DAGANFuse: Infrared and Visible Image Fusion Based on Differential Features Attention Generative Adversarial NetworksWork title

- Type

-

articleOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2025Year of publication

- Publication date

-

2025-04-21Full publication date if available

- Authors

-

Yuxin Wen, Wenjun LiuList of authors in order

- Landing page

-

https://doi.org/10.3390/app15084560Publisher landing page

- PDF URL

-

https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

goldOpen access status per OpenAlex

- OA URL

-

https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320Direct OA link when available

- Concepts

-

Adversarial system, Computer science, Artificial intelligence, Generative grammar, Generative adversarial network, Computer vision, Image (mathematics)Top concepts (fields/topics) attached by OpenAlex

- Cited by

-

2Total citation count in OpenAlex

- Citations by year (recent)

-

2025: 2Per-year citation counts (last 5 years)

- References (count)

-

46Number of works referenced by this work

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4409621523 |

|---|---|

| doi | https://doi.org/10.3390/app15084560 |

| ids.doi | https://doi.org/10.3390/app15084560 |

| ids.openalex | https://openalex.org/W4409621523 |

| fwci | 7.03394381 |

| type | article |

| title | DAGANFuse: Infrared and Visible Image Fusion Based on Differential Features Attention Generative Adversarial Networks |

| biblio.issue | 8 |

| biblio.volume | 15 |

| biblio.last_page | 4560 |

| biblio.first_page | 4560 |

| topics[0].id | https://openalex.org/T11659 |

| topics[0].field.id | https://openalex.org/fields/22 |

| topics[0].field.display_name | Engineering |

| topics[0].score | 1.0 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/2214 |

| topics[0].subfield.display_name | Media Technology |

| topics[0].display_name | Advanced Image Fusion Techniques |

| topics[1].id | https://openalex.org/T10689 |

| topics[1].field.id | https://openalex.org/fields/22 |

| topics[1].field.display_name | Engineering |

| topics[1].score | 0.995199978351593 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/2214 |

| topics[1].subfield.display_name | Media Technology |

| topics[1].display_name | Remote-Sensing Image Classification |

| topics[2].id | https://openalex.org/T11019 |

| topics[2].field.id | https://openalex.org/fields/17 |

| topics[2].field.display_name | Computer Science |

| topics[2].score | 0.9948999881744385 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1707 |

| topics[2].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[2].display_name | Image Enhancement Techniques |

| is_xpac | False |

| apc_list.value | 2300 |

| apc_list.currency | CHF |

| apc_list.value_usd | 2490 |

| apc_paid.value | 2300 |

| apc_paid.currency | CHF |

| apc_paid.value_usd | 2490 |

| concepts[0].id | https://openalex.org/C37736160 |

| concepts[0].level | 2 |

| concepts[0].score | 0.6041284203529358 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q1801315 |

| concepts[0].display_name | Adversarial system |

| concepts[1].id | https://openalex.org/C41008148 |

| concepts[1].level | 0 |

| concepts[1].score | 0.5043076276779175 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[1].display_name | Computer science |

| concepts[2].id | https://openalex.org/C154945302 |

| concepts[2].level | 1 |

| concepts[2].score | 0.49518099427223206 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[2].display_name | Artificial intelligence |

| concepts[3].id | https://openalex.org/C39890363 |

| concepts[3].level | 2 |

| concepts[3].score | 0.4915200173854828 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q36108 |

| concepts[3].display_name | Generative grammar |

| concepts[4].id | https://openalex.org/C2988773926 |

| concepts[4].level | 3 |

| concepts[4].score | 0.42859771847724915 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q25104379 |

| concepts[4].display_name | Generative adversarial network |

| concepts[5].id | https://openalex.org/C31972630 |

| concepts[5].level | 1 |

| concepts[5].score | 0.4262318015098572 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q844240 |

| concepts[5].display_name | Computer vision |

| concepts[6].id | https://openalex.org/C115961682 |

| concepts[6].level | 2 |

| concepts[6].score | 0.27507761120796204 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q860623 |

| concepts[6].display_name | Image (mathematics) |

| keywords[0].id | https://openalex.org/keywords/adversarial-system |

| keywords[0].score | 0.6041284203529358 |

| keywords[0].display_name | Adversarial system |

| keywords[1].id | https://openalex.org/keywords/computer-science |

| keywords[1].score | 0.5043076276779175 |

| keywords[1].display_name | Computer science |

| keywords[2].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[2].score | 0.49518099427223206 |

| keywords[2].display_name | Artificial intelligence |

| keywords[3].id | https://openalex.org/keywords/generative-grammar |

| keywords[3].score | 0.4915200173854828 |

| keywords[3].display_name | Generative grammar |

| keywords[4].id | https://openalex.org/keywords/generative-adversarial-network |

| keywords[4].score | 0.42859771847724915 |

| keywords[4].display_name | Generative adversarial network |

| keywords[5].id | https://openalex.org/keywords/computer-vision |

| keywords[5].score | 0.4262318015098572 |

| keywords[5].display_name | Computer vision |

| keywords[6].id | https://openalex.org/keywords/image |

| keywords[6].score | 0.27507761120796204 |

| keywords[6].display_name | Image (mathematics) |

| language | en |

| locations[0].id | doi:10.3390/app15084560 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4210205812 |

| locations[0].source.issn | 2076-3417 |

| locations[0].source.type | journal |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | 2076-3417 |

| locations[0].source.is_core | True |

| locations[0].source.is_in_doaj | True |

| locations[0].source.display_name | Applied Sciences |

| locations[0].source.host_organization | https://openalex.org/P4310310987 |

| locations[0].source.host_organization_name | Multidisciplinary Digital Publishing Institute |

| locations[0].source.host_organization_lineage | https://openalex.org/P4310310987 |

| locations[0].source.host_organization_lineage_names | Multidisciplinary Digital Publishing Institute |

| locations[0].license | cc-by |

| locations[0].pdf_url | https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320 |

| locations[0].version | publishedVersion |

| locations[0].raw_type | journal-article |

| locations[0].license_id | https://openalex.org/licenses/cc-by |

| locations[0].is_accepted | True |

| locations[0].is_published | True |

| locations[0].raw_source_name | Applied Sciences |

| locations[0].landing_page_url | https://doi.org/10.3390/app15084560 |

| locations[1].id | pmh:oai:doaj.org/article:3950c0184e394e80bee911182323b097 |

| locations[1].is_oa | False |

| locations[1].source.id | https://openalex.org/S4306401280 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | False |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | DOAJ (DOAJ: Directory of Open Access Journals) |

| locations[1].source.host_organization | |

| locations[1].source.host_organization_name | |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | submittedVersion |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | False |

| locations[1].raw_source_name | Applied Sciences, Vol 15, Iss 8, p 4560 (2025) |

| locations[1].landing_page_url | https://doaj.org/article/3950c0184e394e80bee911182323b097 |

| indexed_in | crossref, doaj |

| authorships[0].author.id | https://openalex.org/A5078840961 |

| authorships[0].author.orcid | |

| authorships[0].author.display_name | Yuxin Wen |

| authorships[0].countries | CN |

| authorships[0].affiliations[0].institution_ids | https://openalex.org/I4210144662 |

| authorships[0].affiliations[0].raw_affiliation_string | Xi’an Institute of Optics and Precision Mechanics of CAS, Xi’an 710119, China |

| authorships[0].affiliations[1].institution_ids | https://openalex.org/I4210165038 |

| authorships[0].affiliations[1].raw_affiliation_string | School of Optoelectronics, University of Chinese Academy of Sciences, Beijing 100049, China |

| authorships[0].institutions[0].id | https://openalex.org/I4210165038 |

| authorships[0].institutions[0].ror | https://ror.org/05qbk4x57 |

| authorships[0].institutions[0].type | education |

| authorships[0].institutions[0].lineage | https://openalex.org/I19820366, https://openalex.org/I4210165038 |

| authorships[0].institutions[0].country_code | CN |

| authorships[0].institutions[0].display_name | University of Chinese Academy of Sciences |

| authorships[0].institutions[1].id | https://openalex.org/I4210144662 |

| authorships[0].institutions[1].ror | https://ror.org/0444j5556 |

| authorships[0].institutions[1].type | facility |

| authorships[0].institutions[1].lineage | https://openalex.org/I19820366, https://openalex.org/I4210144662 |

| authorships[0].institutions[1].country_code | CN |

| authorships[0].institutions[1].display_name | Xi'an Institute of Optics and Precision Mechanics |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Yuxin Wen |

| authorships[0].is_corresponding | False |

| authorships[0].raw_affiliation_strings | School of Optoelectronics, University of Chinese Academy of Sciences, Beijing 100049, China, Xi’an Institute of Optics and Precision Mechanics of CAS, Xi’an 710119, China |

| authorships[1].author.id | https://openalex.org/A5052333801 |

| authorships[1].author.orcid | https://orcid.org/0000-0001-9380-2990 |

| authorships[1].author.display_name | Wenjun Liu |

| authorships[1].countries | CN |

| authorships[1].affiliations[0].institution_ids | https://openalex.org/I4210144662 |

| authorships[1].affiliations[0].raw_affiliation_string | Xi’an Institute of Optics and Precision Mechanics of CAS, Xi’an 710119, China |

| authorships[1].institutions[0].id | https://openalex.org/I4210144662 |

| authorships[1].institutions[0].ror | https://ror.org/0444j5556 |

| authorships[1].institutions[0].type | facility |

| authorships[1].institutions[0].lineage | https://openalex.org/I19820366, https://openalex.org/I4210144662 |

| authorships[1].institutions[0].country_code | CN |

| authorships[1].institutions[0].display_name | Xi'an Institute of Optics and Precision Mechanics |

| authorships[1].author_position | last |

| authorships[1].raw_author_name | Wen Liu |

| authorships[1].is_corresponding | False |

| authorships[1].raw_affiliation_strings | Xi’an Institute of Optics and Precision Mechanics of CAS, Xi’an 710119, China |

| has_content.pdf | True |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320 |

| open_access.oa_status | gold |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | DAGANFuse: Infrared and Visible Image Fusion Based on Differential Features Attention Generative Adversarial Networks |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T03:46:38.306776 |

| primary_topic.id | https://openalex.org/T11659 |

| primary_topic.field.id | https://openalex.org/fields/22 |

| primary_topic.field.display_name | Engineering |

| primary_topic.score | 1.0 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/2214 |

| primary_topic.subfield.display_name | Media Technology |

| primary_topic.display_name | Advanced Image Fusion Techniques |

| related_works | https://openalex.org/W2772917594, https://openalex.org/W2036807459, https://openalex.org/W2058170566, https://openalex.org/W2755342338, https://openalex.org/W2166024367, https://openalex.org/W3116076068, https://openalex.org/W2229312674, https://openalex.org/W2951359407, https://openalex.org/W2079911747, https://openalex.org/W1969923398 |

| cited_by_count | 2 |

| counts_by_year[0].year | 2025 |

| counts_by_year[0].cited_by_count | 2 |

| locations_count | 2 |

| best_oa_location.id | doi:10.3390/app15084560 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4210205812 |

| best_oa_location.source.issn | 2076-3417 |

| best_oa_location.source.type | journal |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | 2076-3417 |

| best_oa_location.source.is_core | True |

| best_oa_location.source.is_in_doaj | True |

| best_oa_location.source.display_name | Applied Sciences |

| best_oa_location.source.host_organization | https://openalex.org/P4310310987 |

| best_oa_location.source.host_organization_name | Multidisciplinary Digital Publishing Institute |

| best_oa_location.source.host_organization_lineage | https://openalex.org/P4310310987 |

| best_oa_location.source.host_organization_lineage_names | Multidisciplinary Digital Publishing Institute |

| best_oa_location.license | cc-by |

| best_oa_location.pdf_url | https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320 |

| best_oa_location.version | publishedVersion |

| best_oa_location.raw_type | journal-article |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by |

| best_oa_location.is_accepted | True |

| best_oa_location.is_published | True |

| best_oa_location.raw_source_name | Applied Sciences |

| best_oa_location.landing_page_url | https://doi.org/10.3390/app15084560 |

| primary_location.id | doi:10.3390/app15084560 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4210205812 |

| primary_location.source.issn | 2076-3417 |

| primary_location.source.type | journal |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | 2076-3417 |

| primary_location.source.is_core | True |

| primary_location.source.is_in_doaj | True |

| primary_location.source.display_name | Applied Sciences |

| primary_location.source.host_organization | https://openalex.org/P4310310987 |

| primary_location.source.host_organization_name | Multidisciplinary Digital Publishing Institute |

| primary_location.source.host_organization_lineage | https://openalex.org/P4310310987 |

| primary_location.source.host_organization_lineage_names | Multidisciplinary Digital Publishing Institute |

| primary_location.license | cc-by |

| primary_location.pdf_url | https://www.mdpi.com/2076-3417/15/8/4560/pdf?version=1745223320 |

| primary_location.version | publishedVersion |

| primary_location.raw_type | journal-article |

| primary_location.license_id | https://openalex.org/licenses/cc-by |

| primary_location.is_accepted | True |

| primary_location.is_published | True |

| primary_location.raw_source_name | Applied Sciences |

| primary_location.landing_page_url | https://doi.org/10.3390/app15084560 |

| publication_date | 2025-04-21 |

| publication_year | 2025 |

| referenced_works | https://openalex.org/W2809795042, https://openalex.org/W2044557921, https://openalex.org/W2532801510, https://openalex.org/W2035848186, https://openalex.org/W2589745805, https://openalex.org/W2266694576, https://openalex.org/W2474462684, https://openalex.org/W3039415429, https://openalex.org/W3158080681, https://openalex.org/W2739748921, https://openalex.org/W3011768656, https://openalex.org/W4283461338, https://openalex.org/W2798987894, https://openalex.org/W3035467948, https://openalex.org/W3172595589, https://openalex.org/W3083923056, https://openalex.org/W6806473657, https://openalex.org/W3198582484, https://openalex.org/W2772136803, https://openalex.org/W2999838674, https://openalex.org/W3107998196, https://openalex.org/W2549139847, https://openalex.org/W2963446712, https://openalex.org/W4220893768, https://openalex.org/W4206713196, https://openalex.org/W3046194589, https://openalex.org/W2912147220, https://openalex.org/W2963134949, https://openalex.org/W6787015245, https://openalex.org/W3133692381, https://openalex.org/W4288045761, https://openalex.org/W3030921250, https://openalex.org/W3216678721, https://openalex.org/W2884585870, https://openalex.org/W3094253649, https://openalex.org/W3007891240, https://openalex.org/W3126855404, https://openalex.org/W1997596006, https://openalex.org/W3143068962, https://openalex.org/W2054273865, https://openalex.org/W2153777140, https://openalex.org/W3102411220, https://openalex.org/W4206390057, https://openalex.org/W3104771364, https://openalex.org/W3105639468, https://openalex.org/W3108042295 |

| referenced_works_count | 46 |

| abstract_inverted_index.a | 84, 161, 177, 194, 223 |

| abstract_inverted_index.In | 172, 220 |

| abstract_inverted_index.On | 141 |

| abstract_inverted_index.We | 192 |

| abstract_inverted_index.an | 16 |

| abstract_inverted_index.as | 241 |

| abstract_inverted_index.at | 115 |

| abstract_inverted_index.by | 43, 57 |

| abstract_inverted_index.in | 265 |

| abstract_inverted_index.is | 7, 46, 83, 139, 260 |

| abstract_inverted_index.it | 28, 97 |

| abstract_inverted_index.of | 2, 12, 76, 124, 131, 188, 198, 246, 267 |

| abstract_inverted_index.on | 216 |

| abstract_inverted_index.or | 109 |

| abstract_inverted_index.so | 26 |

| abstract_inverted_index.to | 8, 14, 48, 72, 99, 112, 149, 153, 183, 228, 262 |

| abstract_inverted_index.we | 159, 175 |

| abstract_inverted_index.For | 156 |

| abstract_inverted_index.The | 0 |

| abstract_inverted_index.and | 23, 33, 68, 78, 92, 128, 201, 207, 211, 236, 244, 259 |

| abstract_inverted_index.due | 71 |

| abstract_inverted_index.for | 204 |

| abstract_inverted_index.has | 255 |

| abstract_inverted_index.our | 253 |

| abstract_inverted_index.pay | 150 |

| abstract_inverted_index.the | 10, 59, 73, 89, 116, 121, 129, 142, 144, 173, 185, 217, 221, 230 |

| abstract_inverted_index.two | 218 |

| abstract_inverted_index.use | 106 |

| abstract_inverted_index.was | 226 |

| abstract_inverted_index.data | 11 |

| abstract_inverted_index.dual | 224 |

| abstract_inverted_index.fuse | 113, 184 |

| abstract_inverted_index.loss | 245 |

| abstract_inverted_index.more | 21, 30, 151 |

| abstract_inverted_index.only | 137 |

| abstract_inverted_index.path | 196 |

| abstract_inverted_index.show | 251 |

| abstract_inverted_index.such | 240 |

| abstract_inverted_index.task | 147 |

| abstract_inverted_index.that | 27, 61, 252 |

| abstract_inverted_index.this | 157 |

| abstract_inverted_index.used | 227 |

| abstract_inverted_index.with | 18, 63 |

| abstract_inverted_index.avoid | 237 |

| abstract_inverted_index.fewer | 34 |

| abstract_inverted_index.image | 17, 145 |

| abstract_inverted_index.large | 85 |

| abstract_inverted_index.layer | 118 |

| abstract_inverted_index.light | 53, 60, 80 |

| abstract_inverted_index.makes | 96 |

| abstract_inverted_index.modal | 126, 190 |

| abstract_inverted_index.needs | 148 |

| abstract_inverted_index.terms | 266 |

| abstract_inverted_index.their | 49, 154 |

| abstract_inverted_index.there | 82 |

| abstract_inverted_index.which | 45, 95 |

| abstract_inverted_index.(SOTA) | 257 |

| abstract_inverted_index.common | 238 |

| abstract_inverted_index.detect | 39 |

| abstract_inverted_index.fusion | 6, 117, 146, 168, 202, 268 |

| abstract_inverted_index.higher | 19 |

| abstract_inverted_index.images | 56, 127 |

| abstract_inverted_index.module | 182 |

| abstract_inverted_index.namely | 170 |

| abstract_inverted_index.paths. | 219 |

| abstract_inverted_index.simple | 107 |

| abstract_inverted_index.visual | 4 |

| abstract_inverted_index.weight | 214 |

| abstract_inverted_index.balance | 232 |

| abstract_inverted_index.between | 88, 133, 233 |

| abstract_inverted_index.channel | 212 |

| abstract_inverted_index.clarity | 25 |

| abstract_inverted_index.content | 187 |

| abstract_inverted_index.emitted | 42 |

| abstract_inverted_index.extract | 100 |

| abstract_inverted_index.greater | 24 |

| abstract_inverted_index.images, | 94 |

| abstract_inverted_index.images. | 191 |

| abstract_inverted_index.methods | 104, 111, 264 |

| abstract_inverted_index.purpose | 1 |

| abstract_inverted_index.reason, | 158 |

| abstract_inverted_index.related | 47 |

| abstract_inverted_index.results | 250 |

| abstract_inverted_index.scales. | 135 |

| abstract_inverted_index.sensors | 38, 54 |

| abstract_inverted_index.spatial | 210 |

| abstract_inverted_index.texture | 247 |

| abstract_inverted_index.thermal | 40 |

| abstract_inverted_index.visible | 52, 79, 93 |

| abstract_inverted_index.weights | 206 |

| abstract_inverted_index.whereas | 51 |

| abstract_inverted_index.without | 119 |

| abstract_inverted_index.Existing | 103 |

| abstract_inverted_index.However, | 70 |

| abstract_inverted_index.Infrared | 37 |

| abstract_inverted_index.addition | 110 |

| abstract_inverted_index.blurring | 243 |

| abstract_inverted_index.contains | 29 |

| abstract_inverted_index.designed | 176 |

| abstract_inverted_index.details. | 248 |

| abstract_inverted_index.existing | 263 |

| abstract_inverted_index.features | 114, 123, 132, 164, 180, 200, 203 |

| abstract_inverted_index.generate | 15, 55 |

| abstract_inverted_index.infrared | 77, 91 |

| abstract_inverted_index.maintain | 229 |

| abstract_inverted_index.network, | 169 |

| abstract_inverted_index.objects, | 44, 64 |

| abstract_inverted_index.parallel | 195, 209 |

| abstract_inverted_index.problems | 239 |

| abstract_inverted_index.proposed | 193 |

| abstract_inverted_index.quality, | 20 |

| abstract_inverted_index.sensors, | 81 |

| abstract_inverted_index.splicing | 108 |

| abstract_inverted_index.superior | 261 |

| abstract_inverted_index.DAGANFuse | 254 |

| abstract_inverted_index.Moreover, | 136 |

| abstract_inverted_index.attention | 152, 165, 181, 205, 213 |

| abstract_inverted_index.capturing | 58 |

| abstract_inverted_index.contrary, | 143 |

| abstract_inverted_index.different | 74, 125, 134, 189, 234 |

| abstract_inverted_index.difficult | 98 |

| abstract_inverted_index.features. | 36 |

| abstract_inverted_index.generally | 105 |

| abstract_inverted_index.generated | 90 |

| abstract_inverted_index.including | 65 |

| abstract_inverted_index.integrate | 9 |

| abstract_inverted_index.interacts | 62 |

| abstract_inverted_index.intrinsic | 122, 186 |

| abstract_inverted_index.introduce | 160 |

| abstract_inverted_index.performed | 208 |

| abstract_inverted_index.radiation | 41 |

| abstract_inverted_index.redundant | 35 |

| abstract_inverted_index.DAGANFuse. | 171 |

| abstract_inverted_index.difference | 87 |

| abstract_inverted_index.diffusion, | 67 |

| abstract_inverted_index.generative | 166 |

| abstract_inverted_index.generator, | 174 |

| abstract_inverted_index.modalities | 235 |

| abstract_inverted_index.principles | 75 |

| abstract_inverted_index.similarity | 86 |

| abstract_inverted_index.adversarial | 167 |

| abstract_inverted_index.calculation | 197 |

| abstract_inverted_index.considered. | 140 |

| abstract_inverted_index.considering | 120 |

| abstract_inverted_index.correlation | 138 |

| abstract_inverted_index.cross-modal | 178 |

| abstract_inverted_index.cross-scale | 162 |

| abstract_inverted_index.information | 5, 32, 231, 242 |

| abstract_inverted_index.interaction | 130 |

| abstract_inverted_index.multi-modal | 3 |

| abstract_inverted_index.performance | 258 |

| abstract_inverted_index.reflection, | 66 |

| abstract_inverted_index.Experimental | 249 |

| abstract_inverted_index.calculations | 215 |

| abstract_inverted_index.differential | 163, 179, 199 |

| abstract_inverted_index.information, | 22 |

| abstract_inverted_index.information. | 102 |

| abstract_inverted_index.performance. | 269 |

| abstract_inverted_index.temperature, | 50 |

| abstract_inverted_index.complementary | 31, 101 |

| abstract_inverted_index.discriminator | 225 |

| abstract_inverted_index.multi-sensors | 13 |

| abstract_inverted_index.transmission. | 69 |

| abstract_inverted_index.discriminator, | 222 |

| abstract_inverted_index.complementarity. | 155 |

| abstract_inverted_index.state-of-the-art | 256 |

| cited_by_percentile_year.max | 97 |

| cited_by_percentile_year.min | 95 |

| countries_distinct_count | 1 |

| institutions_distinct_count | 2 |

| citation_normalized_percentile.value | 0.93157426 |

| citation_normalized_percentile.is_in_top_1_percent | False |

| citation_normalized_percentile.is_in_top_10_percent | True |