Efficient Application of Deep Neural Networks for Identifying Small and Multiple Weed Patches Using Drone Images Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.1109/access.2024.3402213

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.1109/access.2024.3402213

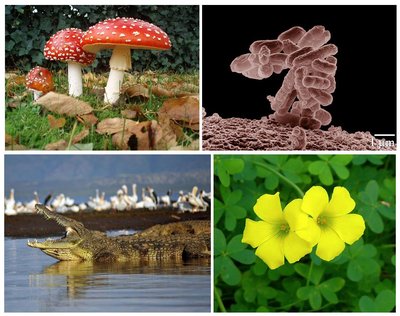

Deep learning (DL) based camouflage target localization, classification and detection using UAV data is currently an evolving and promising hotspot in the field of digital image processing and computer vision. Weed, an undesirable plant that hinders crop growth, acts as a camouflage target w.r.t sugarcane crop (Saccharum Officinarum). Weed exhibits green-on-green color-based merging capability (background matching) and similar spectral behavior with the sugarcane crop, resulting in a visually complex camouflage habitat, making it difficult to distinguish and detect weed from crop. The concealed nature of weed in the sugarcane field, qualifies it as a camouflage target. In this scenario, weed is distributed in the form of multiple small patches across the UAV imagery, making the detection even more complicated, leading to a requirement of a pixel-based classification and subsequent target detection technique. The research problem is to successfully detect weed (camouflage target) at the smallest resolvable pixel size (2-4 cm/px), keeping in mind the similar behavior (merging color) and its difficulty to differentiate it from the sugarcane crop (background). To achieve this challenge, color and texture are exploited as important feature representations. They are extracted via UAV image(s), which can aid in small and multiple weed patch detection, by implementing a rich feature-based Deep Neural Network (DNN). In this revolutionary era of AI and modern drones, DNNs provide feature based elastic transformations, expedited processing, improved accuracy, and optimized mapping and detection. These representation-based networks traverse deeper into color and texture feature representations as a unified component, pixel by pixel, eventually detecting the camouflage targets by their self-learning capabilities in comparison to traditional classification approaches. DNNs exhibit good capabilities to solve camouflage target detection problems, therefore we have proposed a methodology based on deep learning feature-based modelling which generates classified and localized image maps that highlight multiple and small weed patches at the minimal pixel level possible from a merged green-on green background. We have explored and proposed a methodology that self learns exhaustive features from the UAV imagery via feature transformations which in turn reduces the feature bias and exhibits enhanced prospects w.r.t sensitivity of imagery background. This eventually results in improved localization and classification of camouflage targets with respect to traditional target detection and localization modelling techniques in natural background. It is observed that the proposed methodology is capable of detecting small patches of weed (similar color behavior) from its background with an accuracy of 90.5%.

Related Topics

- Type

- article

- Language

- en

- Landing Page

- https://doi.org/10.1109/access.2024.3402213

- https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdf

- OA Status

- gold

- Cited By

- 8

- References

- 48

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4397026421

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4397026421Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.1109/access.2024.3402213Digital Object Identifier

- Title

-

Efficient Application of Deep Neural Networks for Identifying Small and Multiple Weed Patches Using Drone ImagesWork title

- Type

-

articleOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2024Year of publication

- Publication date

-

2024-01-01Full publication date if available

- Authors

-

Vyomika Singh, Dharmendra Singh, Harish KumarList of authors in order

- Landing page

-

https://doi.org/10.1109/access.2024.3402213Publisher landing page

- PDF URL

-

https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdfDirect link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

goldOpen access status per OpenAlex

- OA URL

-

https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdfDirect OA link when available

- Concepts

-

Camouflage, Artificial intelligence, Computer science, Weed, Drone, Deep learning, Pixel, Feature (linguistics), Computer vision, Pattern recognition (psychology), Feature extraction, Ecology, Genetics, Linguistics, Biology, PhilosophyTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

8Total citation count in OpenAlex

- Citations by year (recent)

-

2025: 4, 2024: 4Per-year citation counts (last 5 years)

- References (count)

-

48Number of works referenced by this work

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4397026421 |

|---|---|

| doi | https://doi.org/10.1109/access.2024.3402213 |

| ids.doi | https://doi.org/10.1109/access.2024.3402213 |

| ids.openalex | https://openalex.org/W4397026421 |

| fwci | 6.25639262 |

| type | article |

| title | Efficient Application of Deep Neural Networks for Identifying Small and Multiple Weed Patches Using Drone Images |

| biblio.issue | |

| biblio.volume | 12 |

| biblio.last_page | 71996 |

| biblio.first_page | 71982 |

| topics[0].id | https://openalex.org/T10616 |

| topics[0].field.id | https://openalex.org/fields/11 |

| topics[0].field.display_name | Agricultural and Biological Sciences |

| topics[0].score | 0.9951000213623047 |

| topics[0].domain.id | https://openalex.org/domains/1 |

| topics[0].domain.display_name | Life Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1110 |

| topics[0].subfield.display_name | Plant Science |

| topics[0].display_name | Smart Agriculture and AI |

| topics[1].id | https://openalex.org/T10111 |

| topics[1].field.id | https://openalex.org/fields/23 |

| topics[1].field.display_name | Environmental Science |

| topics[1].score | 0.9751999974250793 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/2303 |

| topics[1].subfield.display_name | Ecology |

| topics[1].display_name | Remote Sensing in Agriculture |

| topics[2].id | https://openalex.org/T12894 |

| topics[2].field.id | https://openalex.org/fields/11 |

| topics[2].field.display_name | Agricultural and Biological Sciences |

| topics[2].score | 0.9656999707221985 |

| topics[2].domain.id | https://openalex.org/domains/1 |

| topics[2].domain.display_name | Life Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1110 |

| topics[2].subfield.display_name | Plant Science |

| topics[2].display_name | Date Palm Research Studies |

| is_xpac | False |

| apc_list.value | 1850 |

| apc_list.currency | USD |

| apc_list.value_usd | 1850 |

| apc_paid.value | 1850 |

| apc_paid.currency | USD |

| apc_paid.value_usd | 1850 |

| concepts[0].id | https://openalex.org/C2776196576 |

| concepts[0].level | 2 |

| concepts[0].score | 0.9218447208404541 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q196113 |

| concepts[0].display_name | Camouflage |

| concepts[1].id | https://openalex.org/C154945302 |

| concepts[1].level | 1 |

| concepts[1].score | 0.7460101842880249 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[1].display_name | Artificial intelligence |

| concepts[2].id | https://openalex.org/C41008148 |

| concepts[2].level | 0 |

| concepts[2].score | 0.7212290167808533 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[2].display_name | Computer science |

| concepts[3].id | https://openalex.org/C2775891814 |

| concepts[3].level | 2 |

| concepts[3].score | 0.6817640066146851 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q101879 |

| concepts[3].display_name | Weed |

| concepts[4].id | https://openalex.org/C59519942 |

| concepts[4].level | 2 |

| concepts[4].score | 0.6473618149757385 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q650665 |

| concepts[4].display_name | Drone |

| concepts[5].id | https://openalex.org/C108583219 |

| concepts[5].level | 2 |

| concepts[5].score | 0.5897717475891113 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q197536 |

| concepts[5].display_name | Deep learning |

| concepts[6].id | https://openalex.org/C160633673 |

| concepts[6].level | 2 |

| concepts[6].score | 0.5438123941421509 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q355198 |

| concepts[6].display_name | Pixel |

| concepts[7].id | https://openalex.org/C2776401178 |

| concepts[7].level | 2 |

| concepts[7].score | 0.5046399831771851 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q12050496 |

| concepts[7].display_name | Feature (linguistics) |

| concepts[8].id | https://openalex.org/C31972630 |

| concepts[8].level | 1 |

| concepts[8].score | 0.4984703063964844 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q844240 |

| concepts[8].display_name | Computer vision |

| concepts[9].id | https://openalex.org/C153180895 |

| concepts[9].level | 2 |

| concepts[9].score | 0.486126184463501 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q7148389 |

| concepts[9].display_name | Pattern recognition (psychology) |

| concepts[10].id | https://openalex.org/C52622490 |

| concepts[10].level | 2 |

| concepts[10].score | 0.47570711374282837 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q1026626 |

| concepts[10].display_name | Feature extraction |

| concepts[11].id | https://openalex.org/C18903297 |

| concepts[11].level | 1 |

| concepts[11].score | 0.0874631404876709 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q7150 |

| concepts[11].display_name | Ecology |

| concepts[12].id | https://openalex.org/C54355233 |

| concepts[12].level | 1 |

| concepts[12].score | 0.0 |

| concepts[12].wikidata | https://www.wikidata.org/wiki/Q7162 |

| concepts[12].display_name | Genetics |

| concepts[13].id | https://openalex.org/C41895202 |

| concepts[13].level | 1 |

| concepts[13].score | 0.0 |

| concepts[13].wikidata | https://www.wikidata.org/wiki/Q8162 |

| concepts[13].display_name | Linguistics |

| concepts[14].id | https://openalex.org/C86803240 |

| concepts[14].level | 0 |

| concepts[14].score | 0.0 |

| concepts[14].wikidata | https://www.wikidata.org/wiki/Q420 |

| concepts[14].display_name | Biology |

| concepts[15].id | https://openalex.org/C138885662 |

| concepts[15].level | 0 |

| concepts[15].score | 0.0 |

| concepts[15].wikidata | https://www.wikidata.org/wiki/Q5891 |

| concepts[15].display_name | Philosophy |

| keywords[0].id | https://openalex.org/keywords/camouflage |

| keywords[0].score | 0.9218447208404541 |

| keywords[0].display_name | Camouflage |

| keywords[1].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[1].score | 0.7460101842880249 |

| keywords[1].display_name | Artificial intelligence |

| keywords[2].id | https://openalex.org/keywords/computer-science |

| keywords[2].score | 0.7212290167808533 |

| keywords[2].display_name | Computer science |

| keywords[3].id | https://openalex.org/keywords/weed |

| keywords[3].score | 0.6817640066146851 |

| keywords[3].display_name | Weed |

| keywords[4].id | https://openalex.org/keywords/drone |

| keywords[4].score | 0.6473618149757385 |

| keywords[4].display_name | Drone |

| keywords[5].id | https://openalex.org/keywords/deep-learning |

| keywords[5].score | 0.5897717475891113 |

| keywords[5].display_name | Deep learning |

| keywords[6].id | https://openalex.org/keywords/pixel |

| keywords[6].score | 0.5438123941421509 |

| keywords[6].display_name | Pixel |

| keywords[7].id | https://openalex.org/keywords/feature |

| keywords[7].score | 0.5046399831771851 |

| keywords[7].display_name | Feature (linguistics) |

| keywords[8].id | https://openalex.org/keywords/computer-vision |

| keywords[8].score | 0.4984703063964844 |

| keywords[8].display_name | Computer vision |

| keywords[9].id | https://openalex.org/keywords/pattern-recognition |

| keywords[9].score | 0.486126184463501 |

| keywords[9].display_name | Pattern recognition (psychology) |

| keywords[10].id | https://openalex.org/keywords/feature-extraction |

| keywords[10].score | 0.47570711374282837 |

| keywords[10].display_name | Feature extraction |

| keywords[11].id | https://openalex.org/keywords/ecology |

| keywords[11].score | 0.0874631404876709 |

| keywords[11].display_name | Ecology |

| language | en |

| locations[0].id | doi:10.1109/access.2024.3402213 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S2485537415 |

| locations[0].source.issn | 2169-3536 |

| locations[0].source.type | journal |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | 2169-3536 |

| locations[0].source.is_core | True |

| locations[0].source.is_in_doaj | True |

| locations[0].source.display_name | IEEE Access |

| locations[0].source.host_organization | https://openalex.org/P4310319808 |

| locations[0].source.host_organization_name | Institute of Electrical and Electronics Engineers |

| locations[0].source.host_organization_lineage | https://openalex.org/P4310319808 |

| locations[0].source.host_organization_lineage_names | Institute of Electrical and Electronics Engineers |

| locations[0].license | |

| locations[0].pdf_url | https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdf |

| locations[0].version | publishedVersion |

| locations[0].raw_type | journal-article |

| locations[0].license_id | |

| locations[0].is_accepted | True |

| locations[0].is_published | True |

| locations[0].raw_source_name | IEEE Access |

| locations[0].landing_page_url | https://doi.org/10.1109/access.2024.3402213 |

| locations[1].id | pmh:oai:doaj.org/article:555aada4c8ef4fec82751df7096a4dba |

| locations[1].is_oa | False |

| locations[1].source.id | https://openalex.org/S4306401280 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | False |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | DOAJ (DOAJ: Directory of Open Access Journals) |

| locations[1].source.host_organization | |

| locations[1].source.host_organization_name | |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | submittedVersion |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | False |

| locations[1].raw_source_name | IEEE Access, Vol 12, Pp 71982-71996 (2024) |

| locations[1].landing_page_url | https://doaj.org/article/555aada4c8ef4fec82751df7096a4dba |

| indexed_in | crossref, doaj |

| authorships[0].author.id | https://openalex.org/A5004238445 |

| authorships[0].author.orcid | https://orcid.org/0009-0008-6563-3550 |

| authorships[0].author.display_name | Vyomika Singh |

| authorships[0].countries | IN |

| authorships[0].affiliations[0].institution_ids | https://openalex.org/I154851008 |

| authorships[0].affiliations[0].raw_affiliation_string | Department of Electronics and Communication Engineering, Indian Institute of Technology, Roorkee, Roorkee, India |

| authorships[0].institutions[0].id | https://openalex.org/I154851008 |

| authorships[0].institutions[0].ror | https://ror.org/00582g326 |

| authorships[0].institutions[0].type | education |

| authorships[0].institutions[0].lineage | https://openalex.org/I154851008 |

| authorships[0].institutions[0].country_code | IN |

| authorships[0].institutions[0].display_name | Indian Institute of Technology Roorkee |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Vyomika Singh |

| authorships[0].is_corresponding | False |

| authorships[0].raw_affiliation_strings | Department of Electronics and Communication Engineering, Indian Institute of Technology, Roorkee, Roorkee, India |

| authorships[1].author.id | https://openalex.org/A5075489586 |

| authorships[1].author.orcid | https://orcid.org/0000-0002-0396-1494 |

| authorships[1].author.display_name | Dharmendra Singh |

| authorships[1].countries | IN |

| authorships[1].affiliations[0].institution_ids | https://openalex.org/I154851008 |

| authorships[1].affiliations[0].raw_affiliation_string | Department of Electronics and Communication Engineering, Indian Institute of Technology, Roorkee, Roorkee, India |

| authorships[1].institutions[0].id | https://openalex.org/I154851008 |

| authorships[1].institutions[0].ror | https://ror.org/00582g326 |

| authorships[1].institutions[0].type | education |

| authorships[1].institutions[0].lineage | https://openalex.org/I154851008 |

| authorships[1].institutions[0].country_code | IN |

| authorships[1].institutions[0].display_name | Indian Institute of Technology Roorkee |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Dharmendra Singh |

| authorships[1].is_corresponding | False |

| authorships[1].raw_affiliation_strings | Department of Electronics and Communication Engineering, Indian Institute of Technology, Roorkee, Roorkee, India |

| authorships[2].author.id | https://openalex.org/A5025400168 |

| authorships[2].author.orcid | https://orcid.org/0000-0003-2302-5828 |

| authorships[2].author.display_name | Harish Kumar |

| authorships[2].countries | SA |

| authorships[2].affiliations[0].institution_ids | https://openalex.org/I82952536 |

| authorships[2].affiliations[0].raw_affiliation_string | Department of Computer Science, College of Computer Science, King Khalid University, Abha, Saudi Arabia |

| authorships[2].institutions[0].id | https://openalex.org/I82952536 |

| authorships[2].institutions[0].ror | https://ror.org/052kwzs30 |

| authorships[2].institutions[0].type | education |

| authorships[2].institutions[0].lineage | https://openalex.org/I82952536 |

| authorships[2].institutions[0].country_code | SA |

| authorships[2].institutions[0].display_name | King Khalid University |

| authorships[2].author_position | last |

| authorships[2].raw_author_name | Harish Kumar |

| authorships[2].is_corresponding | False |

| authorships[2].raw_affiliation_strings | Department of Computer Science, College of Computer Science, King Khalid University, Abha, Saudi Arabia |

| has_content.pdf | True |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdf |

| open_access.oa_status | gold |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Efficient Application of Deep Neural Networks for Identifying Small and Multiple Weed Patches Using Drone Images |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T03:46:38.306776 |

| primary_topic.id | https://openalex.org/T10616 |

| primary_topic.field.id | https://openalex.org/fields/11 |

| primary_topic.field.display_name | Agricultural and Biological Sciences |

| primary_topic.score | 0.9951000213623047 |

| primary_topic.domain.id | https://openalex.org/domains/1 |

| primary_topic.domain.display_name | Life Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1110 |

| primary_topic.subfield.display_name | Plant Science |

| primary_topic.display_name | Smart Agriculture and AI |

| related_works | https://openalex.org/W2978048274, https://openalex.org/W2348329006, https://openalex.org/W2379031960, https://openalex.org/W2376458710, https://openalex.org/W2231217681, https://openalex.org/W2914759737, https://openalex.org/W2984158411, https://openalex.org/W4253283976, https://openalex.org/W4231347762, https://openalex.org/W374553806 |

| cited_by_count | 8 |

| counts_by_year[0].year | 2025 |

| counts_by_year[0].cited_by_count | 4 |

| counts_by_year[1].year | 2024 |

| counts_by_year[1].cited_by_count | 4 |

| locations_count | 2 |

| best_oa_location.id | doi:10.1109/access.2024.3402213 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S2485537415 |

| best_oa_location.source.issn | 2169-3536 |

| best_oa_location.source.type | journal |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | 2169-3536 |

| best_oa_location.source.is_core | True |

| best_oa_location.source.is_in_doaj | True |

| best_oa_location.source.display_name | IEEE Access |

| best_oa_location.source.host_organization | https://openalex.org/P4310319808 |

| best_oa_location.source.host_organization_name | Institute of Electrical and Electronics Engineers |

| best_oa_location.source.host_organization_lineage | https://openalex.org/P4310319808 |

| best_oa_location.source.host_organization_lineage_names | Institute of Electrical and Electronics Engineers |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdf |

| best_oa_location.version | publishedVersion |

| best_oa_location.raw_type | journal-article |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | True |

| best_oa_location.is_published | True |

| best_oa_location.raw_source_name | IEEE Access |

| best_oa_location.landing_page_url | https://doi.org/10.1109/access.2024.3402213 |

| primary_location.id | doi:10.1109/access.2024.3402213 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S2485537415 |

| primary_location.source.issn | 2169-3536 |

| primary_location.source.type | journal |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | 2169-3536 |

| primary_location.source.is_core | True |

| primary_location.source.is_in_doaj | True |

| primary_location.source.display_name | IEEE Access |

| primary_location.source.host_organization | https://openalex.org/P4310319808 |

| primary_location.source.host_organization_name | Institute of Electrical and Electronics Engineers |

| primary_location.source.host_organization_lineage | https://openalex.org/P4310319808 |

| primary_location.source.host_organization_lineage_names | Institute of Electrical and Electronics Engineers |

| primary_location.license | |

| primary_location.pdf_url | https://ieeexplore.ieee.org/ielx7/6287639/6514899/10533244.pdf |

| primary_location.version | publishedVersion |

| primary_location.raw_type | journal-article |

| primary_location.license_id | |

| primary_location.is_accepted | True |

| primary_location.is_published | True |

| primary_location.raw_source_name | IEEE Access |

| primary_location.landing_page_url | https://doi.org/10.1109/access.2024.3402213 |

| publication_date | 2024-01-01 |

| publication_year | 2024 |

| referenced_works | https://openalex.org/W2112918833, https://openalex.org/W2419314515, https://openalex.org/W2123436086, https://openalex.org/W2396886742, https://openalex.org/W2736285137, https://openalex.org/W4285323682, https://openalex.org/W1687112550, https://openalex.org/W2500751094, https://openalex.org/W2919115771, https://openalex.org/W3161891919, https://openalex.org/W6683994600, https://openalex.org/W3013149063, https://openalex.org/W3197814671, https://openalex.org/W3091225957, https://openalex.org/W4316506832, https://openalex.org/W2295598076, https://openalex.org/W3047513525, https://openalex.org/W2532964518, https://openalex.org/W2424454650, https://openalex.org/W4380149986, https://openalex.org/W4223586001, https://openalex.org/W3165807380, https://openalex.org/W6628973269, https://openalex.org/W4317513060, https://openalex.org/W2154144021, https://openalex.org/W2059432853, https://openalex.org/W4283362312, https://openalex.org/W2037239062, https://openalex.org/W2964271185, https://openalex.org/W1975533357, https://openalex.org/W1984127314, https://openalex.org/W2740333758, https://openalex.org/W2151103935, https://openalex.org/W2102593897, https://openalex.org/W2154741421, https://openalex.org/W2951422189, https://openalex.org/W3093012524, https://openalex.org/W2792643794, https://openalex.org/W2180748755, https://openalex.org/W2997604048, https://openalex.org/W6631190155, https://openalex.org/W2911964244, https://openalex.org/W2151162785, https://openalex.org/W30070282, https://openalex.org/W2963037989, https://openalex.org/W4293416723, https://openalex.org/W1522301498, https://openalex.org/W2163922914 |

| referenced_works_count | 48 |

| abstract_inverted_index.a | 40, 66, 93, 121, 124, 200, 243, 278, 307, 317 |

| abstract_inverted_index.AI | 212 |

| abstract_inverted_index.In | 96, 207 |

| abstract_inverted_index.It | 371 |

| abstract_inverted_index.To | 169 |

| abstract_inverted_index.We | 312 |

| abstract_inverted_index.an | 15, 31, 393 |

| abstract_inverted_index.as | 39, 92, 178, 242 |

| abstract_inverted_index.at | 142, 300 |

| abstract_inverted_index.by | 198, 247, 254 |

| abstract_inverted_index.in | 20, 65, 86, 102, 151, 191, 258, 332, 350, 368 |

| abstract_inverted_index.is | 13, 100, 135, 372, 378 |

| abstract_inverted_index.it | 72, 91, 163 |

| abstract_inverted_index.of | 23, 84, 105, 123, 211, 344, 355, 380, 384, 395 |

| abstract_inverted_index.on | 281 |

| abstract_inverted_index.to | 74, 120, 136, 161, 260, 268, 360 |

| abstract_inverted_index.we | 275 |

| abstract_inverted_index.The | 81, 132 |

| abstract_inverted_index.UAV | 11, 111, 186, 326 |

| abstract_inverted_index.aid | 190 |

| abstract_inverted_index.and | 8, 17, 27, 56, 76, 127, 158, 174, 193, 213, 226, 229, 238, 289, 296, 315, 338, 353, 364 |

| abstract_inverted_index.are | 176, 183 |

| abstract_inverted_index.can | 189 |

| abstract_inverted_index.era | 210 |

| abstract_inverted_index.its | 159, 390 |

| abstract_inverted_index.the | 21, 61, 87, 103, 110, 114, 143, 153, 165, 251, 301, 325, 335, 375 |

| abstract_inverted_index.via | 185, 328 |

| abstract_inverted_index.(2-4 | 148 |

| abstract_inverted_index.(DL) | 2 |

| abstract_inverted_index.DNNs | 216, 264 |

| abstract_inverted_index.Deep | 0, 203 |

| abstract_inverted_index.They | 182 |

| abstract_inverted_index.This | 347 |

| abstract_inverted_index.Weed | 48 |

| abstract_inverted_index.acts | 38 |

| abstract_inverted_index.bias | 337 |

| abstract_inverted_index.crop | 36, 45, 167 |

| abstract_inverted_index.data | 12 |

| abstract_inverted_index.deep | 282 |

| abstract_inverted_index.even | 116 |

| abstract_inverted_index.form | 104 |

| abstract_inverted_index.from | 79, 164, 306, 324, 389 |

| abstract_inverted_index.good | 266 |

| abstract_inverted_index.have | 276, 313 |

| abstract_inverted_index.into | 236 |

| abstract_inverted_index.maps | 292 |

| abstract_inverted_index.mind | 152 |

| abstract_inverted_index.more | 117 |

| abstract_inverted_index.rich | 201 |

| abstract_inverted_index.self | 320 |

| abstract_inverted_index.size | 147 |

| abstract_inverted_index.that | 34, 293, 319, 374 |

| abstract_inverted_index.this | 97, 171, 208 |

| abstract_inverted_index.turn | 333 |

| abstract_inverted_index.weed | 78, 85, 99, 139, 195, 298, 385 |

| abstract_inverted_index.with | 60, 358, 392 |

| abstract_inverted_index.These | 231 |

| abstract_inverted_index.Weed, | 30 |

| abstract_inverted_index.based | 3, 219, 280 |

| abstract_inverted_index.color | 173, 237, 387 |

| abstract_inverted_index.crop, | 63 |

| abstract_inverted_index.crop. | 80 |

| abstract_inverted_index.field | 22 |

| abstract_inverted_index.green | 310 |

| abstract_inverted_index.image | 25, 291 |

| abstract_inverted_index.level | 304 |

| abstract_inverted_index.patch | 196 |

| abstract_inverted_index.pixel | 146, 246, 303 |

| abstract_inverted_index.plant | 33 |

| abstract_inverted_index.small | 107, 192, 297, 382 |

| abstract_inverted_index.solve | 269 |

| abstract_inverted_index.their | 255 |

| abstract_inverted_index.using | 10 |

| abstract_inverted_index.w.r.t | 43, 342 |

| abstract_inverted_index.which | 188, 286, 331 |

| abstract_inverted_index.(DNN). | 206 |

| abstract_inverted_index.Neural | 204 |

| abstract_inverted_index.across | 109 |

| abstract_inverted_index.color) | 157 |

| abstract_inverted_index.deeper | 235 |

| abstract_inverted_index.detect | 77, 138 |

| abstract_inverted_index.field, | 89 |

| abstract_inverted_index.learns | 321 |

| abstract_inverted_index.making | 71, 113 |

| abstract_inverted_index.merged | 308 |

| abstract_inverted_index.modern | 214 |

| abstract_inverted_index.nature | 83 |

| abstract_inverted_index.pixel, | 248 |

| abstract_inverted_index.target | 5, 42, 129, 271, 362 |

| abstract_inverted_index.Network | 205 |

| abstract_inverted_index.achieve | 170 |

| abstract_inverted_index.capable | 379 |

| abstract_inverted_index.cm/px), | 149 |

| abstract_inverted_index.complex | 68 |

| abstract_inverted_index.digital | 24 |

| abstract_inverted_index.drones, | 215 |

| abstract_inverted_index.elastic | 220 |

| abstract_inverted_index.exhibit | 265 |

| abstract_inverted_index.feature | 180, 218, 240, 329, 336 |

| abstract_inverted_index.growth, | 37 |

| abstract_inverted_index.hinders | 35 |

| abstract_inverted_index.hotspot | 19 |

| abstract_inverted_index.imagery | 327, 345 |

| abstract_inverted_index.keeping | 150 |

| abstract_inverted_index.leading | 119 |

| abstract_inverted_index.mapping | 228 |

| abstract_inverted_index.merging | 52 |

| abstract_inverted_index.minimal | 302 |

| abstract_inverted_index.natural | 369 |

| abstract_inverted_index.patches | 108, 299, 383 |

| abstract_inverted_index.problem | 134 |

| abstract_inverted_index.provide | 217 |

| abstract_inverted_index.reduces | 334 |

| abstract_inverted_index.respect | 359 |

| abstract_inverted_index.results | 349 |

| abstract_inverted_index.similar | 57, 154 |

| abstract_inverted_index.target) | 141 |

| abstract_inverted_index.target. | 95 |

| abstract_inverted_index.targets | 253, 357 |

| abstract_inverted_index.texture | 175, 239 |

| abstract_inverted_index.unified | 244 |

| abstract_inverted_index.vision. | 29 |

| abstract_inverted_index.(merging | 156 |

| abstract_inverted_index.(similar | 386 |

| abstract_inverted_index.accuracy | 394 |

| abstract_inverted_index.behavior | 59, 155 |

| abstract_inverted_index.computer | 28 |

| abstract_inverted_index.enhanced | 340 |

| abstract_inverted_index.evolving | 16 |

| abstract_inverted_index.exhibits | 49, 339 |

| abstract_inverted_index.explored | 314 |

| abstract_inverted_index.features | 323 |

| abstract_inverted_index.green-on | 309 |

| abstract_inverted_index.habitat, | 70 |

| abstract_inverted_index.imagery, | 112 |

| abstract_inverted_index.improved | 224, 351 |

| abstract_inverted_index.learning | 1, 283 |

| abstract_inverted_index.multiple | 106, 194, 295 |

| abstract_inverted_index.networks | 233 |

| abstract_inverted_index.observed | 373 |

| abstract_inverted_index.possible | 305 |

| abstract_inverted_index.proposed | 277, 316, 376 |

| abstract_inverted_index.research | 133 |

| abstract_inverted_index.smallest | 144 |

| abstract_inverted_index.spectral | 58 |

| abstract_inverted_index.traverse | 234 |

| abstract_inverted_index.visually | 67 |

| abstract_inverted_index.accuracy, | 225 |

| abstract_inverted_index.behavior) | 388 |

| abstract_inverted_index.concealed | 82 |

| abstract_inverted_index.currently | 14 |

| abstract_inverted_index.detecting | 250, 381 |

| abstract_inverted_index.detection | 9, 115, 130, 272, 363 |

| abstract_inverted_index.difficult | 73 |

| abstract_inverted_index.expedited | 222 |

| abstract_inverted_index.exploited | 177 |

| abstract_inverted_index.extracted | 184 |

| abstract_inverted_index.generates | 287 |

| abstract_inverted_index.highlight | 294 |

| abstract_inverted_index.image(s), | 187 |

| abstract_inverted_index.important | 179 |

| abstract_inverted_index.localized | 290 |

| abstract_inverted_index.matching) | 55 |

| abstract_inverted_index.modelling | 285, 366 |

| abstract_inverted_index.optimized | 227 |

| abstract_inverted_index.problems, | 273 |

| abstract_inverted_index.promising | 18 |

| abstract_inverted_index.prospects | 341 |

| abstract_inverted_index.qualifies | 90 |

| abstract_inverted_index.resulting | 64 |

| abstract_inverted_index.scenario, | 98 |

| abstract_inverted_index.sugarcane | 44, 62, 88, 166 |

| abstract_inverted_index.therefore | 274 |

| abstract_inverted_index.(Saccharum | 46 |

| abstract_inverted_index.background | 391 |

| abstract_inverted_index.camouflage | 4, 41, 69, 94, 252, 270, 356 |

| abstract_inverted_index.capability | 53 |

| abstract_inverted_index.challenge, | 172 |

| abstract_inverted_index.classified | 288 |

| abstract_inverted_index.comparison | 259 |

| abstract_inverted_index.component, | 245 |

| abstract_inverted_index.detection, | 197 |

| abstract_inverted_index.detection. | 230 |

| abstract_inverted_index.difficulty | 160 |

| abstract_inverted_index.eventually | 249, 348 |

| abstract_inverted_index.exhaustive | 322 |

| abstract_inverted_index.processing | 26 |

| abstract_inverted_index.resolvable | 145 |

| abstract_inverted_index.subsequent | 128 |

| abstract_inverted_index.technique. | 131 |

| abstract_inverted_index.techniques | 367 |

| abstract_inverted_index.(background | 54 |

| abstract_inverted_index.(camouflage | 140 |

| abstract_inverted_index.approaches. | 263 |

| abstract_inverted_index.background. | 311, 346, 370 |

| abstract_inverted_index.color-based | 51 |

| abstract_inverted_index.distinguish | 75 |

| abstract_inverted_index.distributed | 101 |

| abstract_inverted_index.methodology | 279, 318, 377 |

| abstract_inverted_index.pixel-based | 125 |

| abstract_inverted_index.processing, | 223 |

| abstract_inverted_index.requirement | 122 |

| abstract_inverted_index.sensitivity | 343 |

| abstract_inverted_index.traditional | 261, 361 |

| abstract_inverted_index.undesirable | 32 |

| abstract_inverted_index.capabilities | 257, 267 |

| abstract_inverted_index.complicated, | 118 |

| abstract_inverted_index.implementing | 199 |

| abstract_inverted_index.localization | 352, 365 |

| abstract_inverted_index.successfully | 137 |

| abstract_inverted_index.(background). | 168 |

| abstract_inverted_index.90.5%. | 396 |

| abstract_inverted_index.Officinarum). | 47 |

| abstract_inverted_index.differentiate | 162 |

| abstract_inverted_index.feature-based | 202, 284 |

| abstract_inverted_index.localization, | 6 |

| abstract_inverted_index.revolutionary | 209 |

| abstract_inverted_index.self-learning | 256 |

| abstract_inverted_index.classification | 7, 126, 262, 354 |

| abstract_inverted_index.green-on-green | 50 |

| abstract_inverted_index.representations | 241 |

| abstract_inverted_index.transformations | 330 |

| abstract_inverted_index.representations. | 181 |

| abstract_inverted_index.transformations, | 221 |

| abstract_inverted_index.representation-based | 232 |

| cited_by_percentile_year.max | 98 |

| cited_by_percentile_year.min | 97 |

| countries_distinct_count | 2 |

| institutions_distinct_count | 3 |

| citation_normalized_percentile.value | 0.95964456 |

| citation_normalized_percentile.is_in_top_1_percent | False |

| citation_normalized_percentile.is_in_top_10_percent | True |