Evaluating commit message generation Article Swipe

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.1145/3510455.3512790

· OA: W4224313199

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.1145/3510455.3512790

· OA: W4224313199

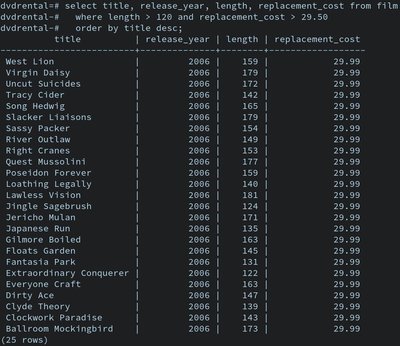

Commit messages play an important role in several software engineering tasks\nsuch as program comprehension and understanding program evolution. However,\nprogrammers neglect to write good commit messages. Hence, several Commit\nMessage Generation (CMG) tools have been proposed. We observe that the recent\nstate of the art CMG tools use simple and easy to compute automated evaluation\nmetrics such as BLEU4 or its variants. The advances in the field of Machine\nTranslation (MT) indicate several weaknesses of BLEU4 and its variants. They\nalso propose several other metrics for evaluating Natural Language Generation\n(NLG) tools. In this work, we discuss the suitability of various MT metrics for\nthe CMG task. Based on the insights from our experiments, we propose a new\nvariant specifically for evaluating the CMG task. We re-evaluate the state of\nthe art CMG tools on our new metric. We believe that our work fixes an\nimportant gap that exists in the understanding of evaluation metrics for CMG\nresearch.\n