Heptapod: Language Modeling on Visual Signals Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2510.06673

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2510.06673

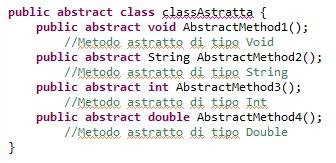

We introduce Heptapod, an image autoregressive model that adheres to the foundational principles of language modeling. Heptapod employs \textbf{causal attention}, \textbf{eliminates reliance on CFG}, and \textbf{eschews the trend of semantic tokenizers}. Our key innovation is \textit{next 2D distribution prediction}: a causal Transformer with reconstruction-focused visual tokenizer, learns to predict the distribution over the entire 2D spatial grid of images at each timestep. This learning objective unifies the sequential modeling of autoregressive framework with the holistic self-supervised learning of masked autoencoding, enabling the model to capture comprehensive image semantics via generative training. On the ImageNet generation benchmark, Heptapod achieves an FID of $2.70$, significantly outperforming previous causal autoregressive approaches. We hope our work inspires a principled rethinking of language modeling on visual signals and beyond.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2510.06673

- https://arxiv.org/pdf/2510.06673

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W4415316600

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4415316600Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2510.06673Digital Object Identifier

- Title

-

Heptapod: Language Modeling on Visual SignalsWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2025Year of publication

- Publication date

-

2025-10-08Full publication date if available

- Authors

-

Yongxin Zhu, Jiawei Chen, Yuanzhe Chen, Zhuo Chen, Dongya Jia, Jian Cong, Xiaobin Zhuang, Yu‐Ping Wang, Yuxuan WangList of authors in order

- Landing page

-

https://arxiv.org/abs/2510.06673Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2510.06673Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2510.06673Direct OA link when available

- Cited by

-

0Total citation count in OpenAlex

Full payload

| id | https://openalex.org/W4415316600 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2510.06673 |

| ids.doi | https://doi.org/10.48550/arxiv.2510.06673 |

| ids.openalex | https://openalex.org/W4415316600 |

| fwci | |

| type | preprint |

| title | Heptapod: Language Modeling on Visual Signals |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T11714 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.10899999737739563 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1707 |

| topics[0].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[0].display_name | Multimodal Machine Learning Applications |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2510.06673 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | cc-by |

| locations[0].pdf_url | https://arxiv.org/pdf/2510.06673 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | https://openalex.org/licenses/cc-by |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2510.06673 |

| locations[1].id | doi:10.48550/arxiv.2510.06673 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | cc-by |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | https://openalex.org/licenses/cc-by |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2510.06673 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5086260915 |

| authorships[0].author.orcid | https://orcid.org/0000-0002-1813-1792 |

| authorships[0].author.display_name | Yongxin Zhu |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Zhu, Yongxin |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5100362822 |

| authorships[1].author.orcid | https://orcid.org/0000-0002-8195-1582 |

| authorships[1].author.display_name | Jiawei Chen |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Chen, Jiawei |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5055175414 |

| authorships[2].author.orcid | |

| authorships[2].author.display_name | Yuanzhe Chen |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Chen, Yuanzhe |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5100345062 |

| authorships[3].author.orcid | https://orcid.org/0000-0001-9991-6892 |

| authorships[3].author.display_name | Zhuo Chen |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Chen, Zhuo |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5060687079 |

| authorships[4].author.orcid | https://orcid.org/0000-0002-6307-8580 |

| authorships[4].author.display_name | Dongya Jia |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | Jia, Dongya |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5110372538 |

| authorships[5].author.orcid | https://orcid.org/0000-0003-3775-6883 |

| authorships[5].author.display_name | Jian Cong |

| authorships[5].author_position | middle |

| authorships[5].raw_author_name | Cong, Jian |

| authorships[5].is_corresponding | False |

| authorships[6].author.id | https://openalex.org/A5001776951 |

| authorships[6].author.orcid | https://orcid.org/0000-0002-0285-6705 |

| authorships[6].author.display_name | Xiaobin Zhuang |

| authorships[6].author_position | middle |

| authorships[6].raw_author_name | Zhuang, Xiaobin |

| authorships[6].is_corresponding | False |

| authorships[7].author.id | https://openalex.org/A5100339106 |

| authorships[7].author.orcid | https://orcid.org/0000-0001-9340-5864 |

| authorships[7].author.display_name | Yu‐Ping Wang |

| authorships[7].author_position | middle |

| authorships[7].raw_author_name | Wang, Yuping |

| authorships[7].is_corresponding | False |

| authorships[8].author.id | https://openalex.org/A5100375979 |

| authorships[8].author.orcid | https://orcid.org/0000-0002-1649-6974 |

| authorships[8].author.display_name | Yuxuan Wang |

| authorships[8].author_position | last |

| authorships[8].raw_author_name | Wang, Yuxuan |

| authorships[8].is_corresponding | False |

| has_content.pdf | True |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2510.06673 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-18T00:00:00 |

| display_name | Heptapod: Language Modeling on Visual Signals |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T11714 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.10899999737739563 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1707 |

| primary_topic.subfield.display_name | Computer Vision and Pattern Recognition |

| primary_topic.display_name | Multimodal Machine Learning Applications |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2510.06673 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | cc-by |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2510.06673 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2510.06673 |

| primary_location.id | pmh:oai:arXiv.org:2510.06673 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | cc-by |

| primary_location.pdf_url | https://arxiv.org/pdf/2510.06673 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | https://openalex.org/licenses/cc-by |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2510.06673 |

| publication_date | 2025-10-08 |

| publication_year | 2025 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 39, 113 |

| abstract_inverted_index.2D | 36, 54 |

| abstract_inverted_index.On | 91 |

| abstract_inverted_index.We | 0, 108 |

| abstract_inverted_index.an | 3, 98 |

| abstract_inverted_index.at | 59 |

| abstract_inverted_index.is | 34 |

| abstract_inverted_index.of | 13, 28, 57, 69, 77, 100, 116 |

| abstract_inverted_index.on | 22, 119 |

| abstract_inverted_index.to | 9, 47, 83 |

| abstract_inverted_index.FID | 99 |

| abstract_inverted_index.Our | 31 |

| abstract_inverted_index.and | 24, 122 |

| abstract_inverted_index.key | 32 |

| abstract_inverted_index.our | 110 |

| abstract_inverted_index.the | 10, 26, 49, 52, 66, 73, 81, 92 |

| abstract_inverted_index.via | 88 |

| abstract_inverted_index.This | 62 |

| abstract_inverted_index.each | 60 |

| abstract_inverted_index.grid | 56 |

| abstract_inverted_index.hope | 109 |

| abstract_inverted_index.over | 51 |

| abstract_inverted_index.that | 7 |

| abstract_inverted_index.with | 42, 72 |

| abstract_inverted_index.work | 111 |

| abstract_inverted_index.CFG}, | 23 |

| abstract_inverted_index.image | 4, 86 |

| abstract_inverted_index.model | 6, 82 |

| abstract_inverted_index.trend | 27 |

| abstract_inverted_index.causal | 40, 105 |

| abstract_inverted_index.entire | 53 |

| abstract_inverted_index.images | 58 |

| abstract_inverted_index.learns | 46 |

| abstract_inverted_index.masked | 78 |

| abstract_inverted_index.visual | 44, 120 |

| abstract_inverted_index.$2.70$, | 101 |

| abstract_inverted_index.adheres | 8 |

| abstract_inverted_index.beyond. | 123 |

| abstract_inverted_index.capture | 84 |

| abstract_inverted_index.employs | 17 |

| abstract_inverted_index.predict | 48 |

| abstract_inverted_index.signals | 121 |

| abstract_inverted_index.spatial | 55 |

| abstract_inverted_index.unifies | 65 |

| abstract_inverted_index.Heptapod | 16, 96 |

| abstract_inverted_index.ImageNet | 93 |

| abstract_inverted_index.achieves | 97 |

| abstract_inverted_index.enabling | 80 |

| abstract_inverted_index.holistic | 74 |

| abstract_inverted_index.inspires | 112 |

| abstract_inverted_index.language | 14, 117 |

| abstract_inverted_index.learning | 63, 76 |

| abstract_inverted_index.modeling | 68, 118 |

| abstract_inverted_index.previous | 104 |

| abstract_inverted_index.reliance | 21 |

| abstract_inverted_index.semantic | 29 |

| abstract_inverted_index.Heptapod, | 2 |

| abstract_inverted_index.framework | 71 |

| abstract_inverted_index.introduce | 1 |

| abstract_inverted_index.modeling. | 15 |

| abstract_inverted_index.objective | 64 |

| abstract_inverted_index.semantics | 87 |

| abstract_inverted_index.timestep. | 61 |

| abstract_inverted_index.training. | 90 |

| abstract_inverted_index.benchmark, | 95 |

| abstract_inverted_index.generation | 94 |

| abstract_inverted_index.generative | 89 |

| abstract_inverted_index.innovation | 33 |

| abstract_inverted_index.principled | 114 |

| abstract_inverted_index.principles | 12 |

| abstract_inverted_index.rethinking | 115 |

| abstract_inverted_index.sequential | 67 |

| abstract_inverted_index.tokenizer, | 45 |

| abstract_inverted_index.Transformer | 41 |

| abstract_inverted_index.approaches. | 107 |

| abstract_inverted_index.attention}, | 19 |

| abstract_inverted_index.\textit{next | 35 |

| abstract_inverted_index.distribution | 37, 50 |

| abstract_inverted_index.foundational | 11 |

| abstract_inverted_index.prediction}: | 38 |

| abstract_inverted_index.tokenizers}. | 30 |

| abstract_inverted_index.autoencoding, | 79 |

| abstract_inverted_index.comprehensive | 85 |

| abstract_inverted_index.outperforming | 103 |

| abstract_inverted_index.significantly | 102 |

| abstract_inverted_index.\textbf{causal | 18 |

| abstract_inverted_index.autoregressive | 5, 70, 106 |

| abstract_inverted_index.\textbf{eschews | 25 |

| abstract_inverted_index.self-supervised | 75 |

| abstract_inverted_index.\textbf{eliminates | 20 |

| abstract_inverted_index.reconstruction-focused | 43 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 9 |

| citation_normalized_percentile |