Linearized Kernel Dictionary Learning Article Swipe

YOU?

·

· 2016

· Open Access

·

· DOI: https://doi.org/10.1109/jstsp.2016.2555241

· OA: W1927762903

YOU?

·

· 2016

· Open Access

·

· DOI: https://doi.org/10.1109/jstsp.2016.2555241

· OA: W1927762903

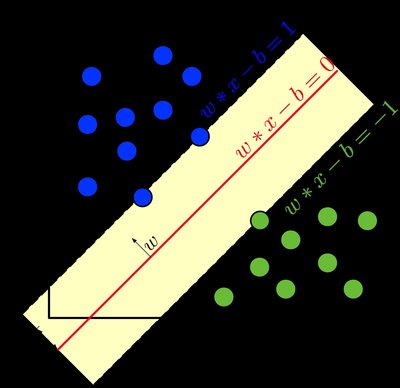

In this paper we present a new approach of incorporating kernels into\ndictionary learning. The kernel K-SVD algorithm (KKSVD), which has been\nintroduced recently, shows an improvement in classification performance, with\nrelation to its linear counterpart K-SVD. However, this algorithm requires the\nstorage and handling of a very large kernel matrix, which leads to high\ncomputational cost, while also limiting its use to setups with small number of\ntraining examples. We address these problems by combining two ideas: first we\napproximate the kernel matrix using a cleverly sampled subset of its columns\nusing the Nystr\\"{o}m method; secondly, as we wish to avoid using this matrix\naltogether, we decompose it by SVD to form new "virtual samples," on which any\nlinear dictionary learning can be employed. Our method, termed "Linearized\nKernel Dictionary Learning" (LKDL) can be seamlessly applied as a\npre-processing stage on top of any efficient off-the-shelf dictionary learning\nscheme, effectively "kernelizing" it. We demonstrate the effectiveness of our\nmethod on several tasks of both supervised and unsupervised classification and\nshow the efficiency of the proposed scheme, its easy integration and\nperformance boosting properties.\n