Essentially No Barriers in Neural Network Energy Landscape Article Swipe

Related Concepts

Maxima and minima

Hessian matrix

Artificial neural network

Energy landscape

Eigenvalues and eigenvectors

Construct (python library)

Energy (signal processing)

Function (biology)

Mathematics

Regular polygon

Computer science

Topology (electrical circuits)

Mathematical optimization

Algorithm

Applied mathematics

Artificial intelligence

Combinatorics

Mathematical analysis

Geometry

Physics

Statistics

Thermodynamics

Evolutionary biology

Programming language

Quantum mechanics

Biology

Felix Draxler

,

Kambis Veschgini

,

Manfred Salmhofer

,

Fred A. Hamprecht

·

YOU?

·

· 2018

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1803.00885

· OA: W2793333878

YOU?

·

· 2018

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1803.00885

· OA: W2793333878

YOU?

·

· 2018

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1803.00885

· OA: W2793333878

YOU?

·

· 2018

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.1803.00885

· OA: W2793333878

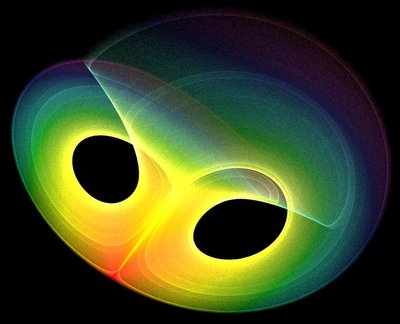

Training neural networks involves finding minima of a high-dimensional non-convex loss function. Knowledge of the structure of this energy landscape is sparse. Relaxing from linear interpolations, we construct continuous paths between minima of recent neural network architectures on CIFAR10 and CIFAR100. Surprisingly, the paths are essentially flat in both the training and test landscapes. This implies that neural networks have enough capacity for structural changes, or that these changes are small between minima. Also, each minimum has at least one vanishing Hessian eigenvalue in addition to those resulting from trivial invariance.

Related Topics

Finding more related topics…