GANSpace: Discovering Interpretable GAN Controls Article Swipe

Related Concepts

Generative grammar

Computer science

Principal component analysis

Pattern recognition (psychology)

Artificial intelligence

Feature (linguistics)

Adversarial system

Feature vector

Layer (electronics)

Space (punctuation)

Simple (philosophy)

Principal (computer security)

Image (mathematics)

Machine learning

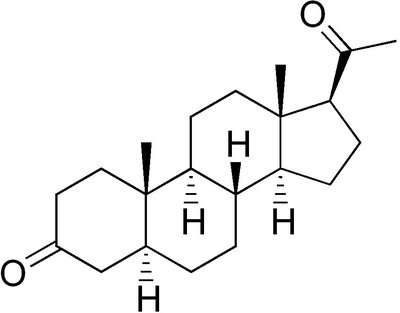

Chemistry

Philosophy

Organic chemistry

Operating system

Epistemology

Linguistics

Erik Härkönen

,

Aaron Hertzmann

,

Jaakko Lehtinen

,

Sylvain Paris

·

YOU?

·

· 2020

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2004.02546

· OA: W3014852036

YOU?

·

· 2020

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2004.02546

· OA: W3014852036

YOU?

·

· 2020

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2004.02546

· OA: W3014852036

YOU?

·

· 2020

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2004.02546

· OA: W3014852036

This paper describes a simple technique to analyze Generative Adversarial Networks (GANs) and create interpretable controls for image synthesis, such as change of viewpoint, aging, lighting, and time of day. We identify important latent directions based on Principal Components Analysis (PCA) applied either in latent space or feature space. Then, we show that a large number of interpretable controls can be defined by layer-wise perturbation along the principal directions. Moreover, we show that BigGAN can be controlled with layer-wise inputs in a StyleGAN-like manner. We show results on different GANs trained on various datasets, and demonstrate good qualitative matches to edit directions found through earlier supervised approaches.

Related Topics

Finding more related topics…