Instability in clinical risk stratification models using deep learning Article Swipe

Related Concepts

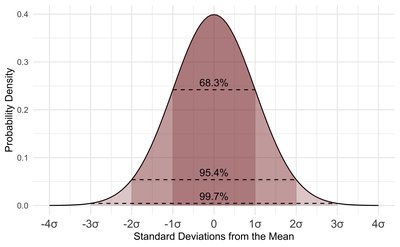

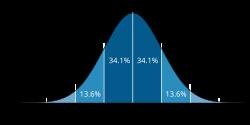

Randomness

Deep learning

Stability (learning theory)

Artificial intelligence

Computer science

Machine learning

Training set

Risk stratification

Set (abstract data type)

Instability

Health records

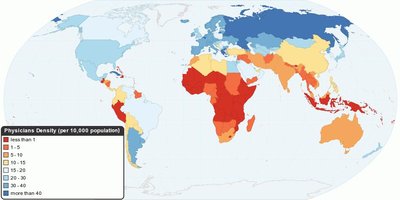

Health care

Statistics

Mathematics

Medicine

Cardiology

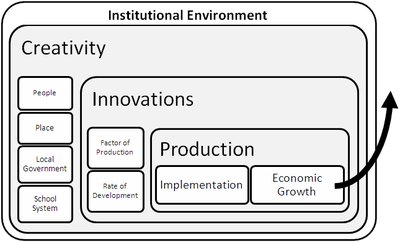

Economic growth

Physics

Programming language

Economics

Mechanics

Daniel Lopez-Martinez

,

Alex Yakubovich

,

Martin Seneviratne

,

Adam D. Lelkes

,

Akshit Tyagi

,

Jonas Kemp

,

Ethan Steinberg

,

N. Lance Downing

,

Ron Li

,

Keith Morse

,

Nigam H. Shah

,

Mingjun Chen

·

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2211.10828

· OA: W4309799379

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2211.10828

· OA: W4309799379

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2211.10828

· OA: W4309799379

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2211.10828

· OA: W4309799379

While it has been well known in the ML community that deep learning models suffer from instability, the consequences for healthcare deployments are under characterised. We study the stability of different model architectures trained on electronic health records, using a set of outpatient prediction tasks as a case study. We show that repeated training runs of the same deep learning model on the same training data can result in significantly different outcomes at a patient level even though global performance metrics remain stable. We propose two stability metrics for measuring the effect of randomness of model training, as well as mitigation strategies for improving model stability.

Related Topics

Finding more related topics…