Just a Few Glances: Open-Set Visual Perception with Image Prompt Paradigm Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2412.10719

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2412.10719

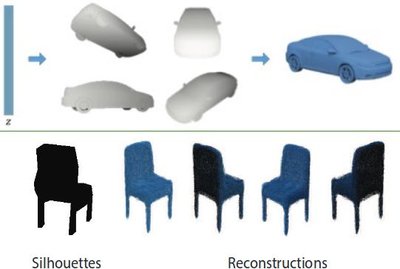

To break through the limitations of pre-training models on fixed categories, Open-Set Object Detection (OSOD) and Open-Set Segmentation (OSS) have attracted a surge of interest from researchers. Inspired by large language models, mainstream OSOD and OSS methods generally utilize text as a prompt, achieving remarkable performance. Following SAM paradigm, some researchers use visual prompts, such as points, boxes, and masks that cover detection or segmentation targets. Despite these two prompt paradigms exhibit excellent performance, they also reveal inherent limitations. On the one hand, it is difficult to accurately describe characteristics of specialized category using textual description. On the other hand, existing visual prompt paradigms heavily rely on multi-round human interaction, which hinders them being applied to fully automated pipeline. To address the above issues, we propose a novel prompt paradigm in OSOD and OSS, that is, \textbf{Image Prompt Paradigm}. This brand new prompt paradigm enables to detect or segment specialized categories without multi-round human intervention. To achieve this goal, the proposed image prompt paradigm uses just a few image instances as prompts, and we propose a novel framework named \textbf{MI Grounding} for this new paradigm. In this framework, high-quality image prompts are automatically encoded, selected and fused, achieving the single-stage and non-interactive inference. We conduct extensive experiments on public datasets, showing that MI Grounding achieves competitive performance on OSOD and OSS benchmarks compared to text prompt paradigm methods and visual prompt paradigm methods. Moreover, MI Grounding can greatly outperform existing method on our constructed specialized ADR50K dataset.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2412.10719

- https://arxiv.org/pdf/2412.10719

- OA Status

- green

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4405468534

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4405468534Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2412.10719Digital Object Identifier

- Title

-

Just a Few Glances: Open-Set Visual Perception with Image Prompt ParadigmWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2024Year of publication

- Publication date

-

2024-12-14Full publication date if available

- Authors

-

Jinrong Zhang, Penghui Wang, Chun‐Xiao Liu, Wei Liu, Dian Jin, Qiong Zhang, Erli Meng, Zhengnan HuList of authors in order

- Landing page

-

https://arxiv.org/abs/2412.10719Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2412.10719Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2412.10719Direct OA link when available

- Concepts

-

Perception, Set (abstract data type), Image (mathematics), Computer science, Cognitive psychology, Psychology, Computer vision, Neuroscience, Programming languageTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

0Total citation count in OpenAlex

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4405468534 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2412.10719 |

| ids.doi | https://doi.org/10.48550/arxiv.2412.10719 |

| ids.openalex | https://openalex.org/W4405468534 |

| fwci | |

| type | preprint |

| title | Just a Few Glances: Open-Set Visual Perception with Image Prompt Paradigm |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T11605 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.98580002784729 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1707 |

| topics[0].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[0].display_name | Visual Attention and Saliency Detection |

| topics[1].id | https://openalex.org/T10627 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9829000234603882 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1707 |

| topics[1].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[1].display_name | Advanced Image and Video Retrieval Techniques |

| topics[2].id | https://openalex.org/T13114 |

| topics[2].field.id | https://openalex.org/fields/22 |

| topics[2].field.display_name | Engineering |

| topics[2].score | 0.9815000295639038 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/2214 |

| topics[2].subfield.display_name | Media Technology |

| topics[2].display_name | Image Processing Techniques and Applications |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C26760741 |

| concepts[0].level | 2 |

| concepts[0].score | 0.692821204662323 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q160402 |

| concepts[0].display_name | Perception |

| concepts[1].id | https://openalex.org/C177264268 |

| concepts[1].level | 2 |

| concepts[1].score | 0.6092901229858398 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q1514741 |

| concepts[1].display_name | Set (abstract data type) |

| concepts[2].id | https://openalex.org/C115961682 |

| concepts[2].level | 2 |

| concepts[2].score | 0.5646311640739441 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q860623 |

| concepts[2].display_name | Image (mathematics) |

| concepts[3].id | https://openalex.org/C41008148 |

| concepts[3].level | 0 |

| concepts[3].score | 0.4199643135070801 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[3].display_name | Computer science |

| concepts[4].id | https://openalex.org/C180747234 |

| concepts[4].level | 1 |

| concepts[4].score | 0.4150051176548004 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q23373 |

| concepts[4].display_name | Cognitive psychology |

| concepts[5].id | https://openalex.org/C15744967 |

| concepts[5].level | 0 |

| concepts[5].score | 0.4119730293750763 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q9418 |

| concepts[5].display_name | Psychology |

| concepts[6].id | https://openalex.org/C31972630 |

| concepts[6].level | 1 |

| concepts[6].score | 0.3372005820274353 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q844240 |

| concepts[6].display_name | Computer vision |

| concepts[7].id | https://openalex.org/C169760540 |

| concepts[7].level | 1 |

| concepts[7].score | 0.10942471027374268 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q207011 |

| concepts[7].display_name | Neuroscience |

| concepts[8].id | https://openalex.org/C199360897 |

| concepts[8].level | 1 |

| concepts[8].score | 0.0 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q9143 |

| concepts[8].display_name | Programming language |

| keywords[0].id | https://openalex.org/keywords/perception |

| keywords[0].score | 0.692821204662323 |

| keywords[0].display_name | Perception |

| keywords[1].id | https://openalex.org/keywords/set |

| keywords[1].score | 0.6092901229858398 |

| keywords[1].display_name | Set (abstract data type) |

| keywords[2].id | https://openalex.org/keywords/image |

| keywords[2].score | 0.5646311640739441 |

| keywords[2].display_name | Image (mathematics) |

| keywords[3].id | https://openalex.org/keywords/computer-science |

| keywords[3].score | 0.4199643135070801 |

| keywords[3].display_name | Computer science |

| keywords[4].id | https://openalex.org/keywords/cognitive-psychology |

| keywords[4].score | 0.4150051176548004 |

| keywords[4].display_name | Cognitive psychology |

| keywords[5].id | https://openalex.org/keywords/psychology |

| keywords[5].score | 0.4119730293750763 |

| keywords[5].display_name | Psychology |

| keywords[6].id | https://openalex.org/keywords/computer-vision |

| keywords[6].score | 0.3372005820274353 |

| keywords[6].display_name | Computer vision |

| keywords[7].id | https://openalex.org/keywords/neuroscience |

| keywords[7].score | 0.10942471027374268 |

| keywords[7].display_name | Neuroscience |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2412.10719 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | https://arxiv.org/pdf/2412.10719 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2412.10719 |

| locations[1].id | doi:10.48550/arxiv.2412.10719 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2412.10719 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5089295072 |

| authorships[0].author.orcid | https://orcid.org/0000-0003-0774-8479 |

| authorships[0].author.display_name | Jinrong Zhang |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Zhang, Jinrong |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5109012690 |

| authorships[1].author.orcid | |

| authorships[1].author.display_name | Penghui Wang |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Wang, Penghui |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5054470348 |

| authorships[2].author.orcid | |

| authorships[2].author.display_name | Chun‐Xiao Liu |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Liu, Chunxiao |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5100431969 |

| authorships[3].author.orcid | https://orcid.org/0000-0003-2860-1783 |

| authorships[3].author.display_name | Wei Liu |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Liu, Wei |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5102751739 |

| authorships[4].author.orcid | https://orcid.org/0000-0003-2560-0179 |

| authorships[4].author.display_name | Dian Jin |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | Jin, Dian |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5100407288 |

| authorships[5].author.orcid | https://orcid.org/0000-0002-5360-3951 |

| authorships[5].author.display_name | Qiong Zhang |

| authorships[5].author_position | middle |

| authorships[5].raw_author_name | Zhang, Qiong |

| authorships[5].is_corresponding | False |

| authorships[6].author.id | https://openalex.org/A5044390981 |

| authorships[6].author.orcid | |

| authorships[6].author.display_name | Erli Meng |

| authorships[6].author_position | middle |

| authorships[6].raw_author_name | Meng, Erli |

| authorships[6].is_corresponding | False |

| authorships[7].author.id | https://openalex.org/A5101359991 |

| authorships[7].author.orcid | |

| authorships[7].author.display_name | Zhengnan Hu |

| authorships[7].author_position | last |

| authorships[7].raw_author_name | Hu, Zhengnan |

| authorships[7].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2412.10719 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2024-12-18T00:00:00 |

| display_name | Just a Few Glances: Open-Set Visual Perception with Image Prompt Paradigm |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T11605 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.98580002784729 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1707 |

| primary_topic.subfield.display_name | Computer Vision and Pattern Recognition |

| primary_topic.display_name | Visual Attention and Saliency Detection |

| related_works | https://openalex.org/W2628861693, https://openalex.org/W3203087560, https://openalex.org/W4361279463, https://openalex.org/W4232814730, https://openalex.org/W2975814312, https://openalex.org/W4387697615, https://openalex.org/W2568121504, https://openalex.org/W2483836897, https://openalex.org/W2049668268, https://openalex.org/W2087303720 |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2412.10719 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2412.10719 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2412.10719 |

| primary_location.id | pmh:oai:arXiv.org:2412.10719 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | https://arxiv.org/pdf/2412.10719 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2412.10719 |

| publication_date | 2024-12-14 |

| publication_year | 2024 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 21, 41, 126, 166, 175 |

| abstract_inverted_index.In | 185 |

| abstract_inverted_index.MI | 212, 234 |

| abstract_inverted_index.On | 79, 96 |

| abstract_inverted_index.To | 0, 119, 155 |

| abstract_inverted_index.We | 203 |

| abstract_inverted_index.as | 40, 55, 170 |

| abstract_inverted_index.by | 28 |

| abstract_inverted_index.in | 130 |

| abstract_inverted_index.is | 84 |

| abstract_inverted_index.it | 83 |

| abstract_inverted_index.of | 5, 23, 90 |

| abstract_inverted_index.on | 8, 106, 207, 217, 241 |

| abstract_inverted_index.or | 63, 147 |

| abstract_inverted_index.to | 86, 115, 145, 223 |

| abstract_inverted_index.we | 124, 173 |

| abstract_inverted_index.OSS | 35, 220 |

| abstract_inverted_index.SAM | 47 |

| abstract_inverted_index.and | 15, 34, 58, 132, 172, 195, 200, 219, 228 |

| abstract_inverted_index.are | 191 |

| abstract_inverted_index.can | 236 |

| abstract_inverted_index.few | 167 |

| abstract_inverted_index.for | 181 |

| abstract_inverted_index.is, | 135 |

| abstract_inverted_index.new | 141, 183 |

| abstract_inverted_index.one | 81 |

| abstract_inverted_index.our | 242 |

| abstract_inverted_index.the | 3, 80, 97, 121, 159, 198 |

| abstract_inverted_index.two | 68 |

| abstract_inverted_index.use | 51 |

| abstract_inverted_index.OSOD | 33, 131, 218 |

| abstract_inverted_index.OSS, | 133 |

| abstract_inverted_index.This | 139 |

| abstract_inverted_index.also | 75 |

| abstract_inverted_index.from | 25 |

| abstract_inverted_index.have | 19 |

| abstract_inverted_index.just | 165 |

| abstract_inverted_index.rely | 105 |

| abstract_inverted_index.some | 49 |

| abstract_inverted_index.such | 54 |

| abstract_inverted_index.text | 39, 224 |

| abstract_inverted_index.that | 60, 134, 211 |

| abstract_inverted_index.them | 112 |

| abstract_inverted_index.they | 74 |

| abstract_inverted_index.this | 157, 182, 186 |

| abstract_inverted_index.uses | 164 |

| abstract_inverted_index.(OSS) | 18 |

| abstract_inverted_index.above | 122 |

| abstract_inverted_index.being | 113 |

| abstract_inverted_index.brand | 140 |

| abstract_inverted_index.break | 1 |

| abstract_inverted_index.cover | 61 |

| abstract_inverted_index.fixed | 9 |

| abstract_inverted_index.fully | 116 |

| abstract_inverted_index.goal, | 158 |

| abstract_inverted_index.hand, | 82, 99 |

| abstract_inverted_index.human | 108, 153 |

| abstract_inverted_index.image | 161, 168, 189 |

| abstract_inverted_index.large | 29 |

| abstract_inverted_index.masks | 59 |

| abstract_inverted_index.named | 178 |

| abstract_inverted_index.novel | 127, 176 |

| abstract_inverted_index.other | 98 |

| abstract_inverted_index.surge | 22 |

| abstract_inverted_index.these | 67 |

| abstract_inverted_index.using | 93 |

| abstract_inverted_index.which | 110 |

| abstract_inverted_index.(OSOD) | 14 |

| abstract_inverted_index.ADR50K | 245 |

| abstract_inverted_index.Object | 12 |

| abstract_inverted_index.Prompt | 137 |

| abstract_inverted_index.boxes, | 57 |

| abstract_inverted_index.detect | 146 |

| abstract_inverted_index.fused, | 196 |

| abstract_inverted_index.method | 240 |

| abstract_inverted_index.models | 7 |

| abstract_inverted_index.prompt | 69, 102, 128, 142, 162, 225, 230 |

| abstract_inverted_index.public | 208 |

| abstract_inverted_index.reveal | 76 |

| abstract_inverted_index.visual | 52, 101, 229 |

| abstract_inverted_index.Despite | 66 |

| abstract_inverted_index.achieve | 156 |

| abstract_inverted_index.address | 120 |

| abstract_inverted_index.applied | 114 |

| abstract_inverted_index.conduct | 204 |

| abstract_inverted_index.enables | 144 |

| abstract_inverted_index.exhibit | 71 |

| abstract_inverted_index.greatly | 237 |

| abstract_inverted_index.heavily | 104 |

| abstract_inverted_index.hinders | 111 |

| abstract_inverted_index.issues, | 123 |

| abstract_inverted_index.methods | 36, 227 |

| abstract_inverted_index.models, | 31 |

| abstract_inverted_index.points, | 56 |

| abstract_inverted_index.prompt, | 42 |

| abstract_inverted_index.prompts | 190 |

| abstract_inverted_index.propose | 125, 174 |

| abstract_inverted_index.segment | 148 |

| abstract_inverted_index.showing | 210 |

| abstract_inverted_index.textual | 94 |

| abstract_inverted_index.through | 2 |

| abstract_inverted_index.utilize | 38 |

| abstract_inverted_index.without | 151 |

| abstract_inverted_index.Inspired | 27 |

| abstract_inverted_index.Open-Set | 11, 16 |

| abstract_inverted_index.achieves | 214 |

| abstract_inverted_index.category | 92 |

| abstract_inverted_index.compared | 222 |

| abstract_inverted_index.dataset. | 246 |

| abstract_inverted_index.describe | 88 |

| abstract_inverted_index.encoded, | 193 |

| abstract_inverted_index.existing | 100, 239 |

| abstract_inverted_index.inherent | 77 |

| abstract_inverted_index.interest | 24 |

| abstract_inverted_index.language | 30 |

| abstract_inverted_index.methods. | 232 |

| abstract_inverted_index.paradigm | 129, 143, 163, 226, 231 |

| abstract_inverted_index.prompts, | 53, 171 |

| abstract_inverted_index.proposed | 160 |

| abstract_inverted_index.selected | 194 |

| abstract_inverted_index.targets. | 65 |

| abstract_inverted_index.Detection | 13 |

| abstract_inverted_index.Following | 46 |

| abstract_inverted_index.Grounding | 213, 235 |

| abstract_inverted_index.Moreover, | 233 |

| abstract_inverted_index.achieving | 43, 197 |

| abstract_inverted_index.attracted | 20 |

| abstract_inverted_index.automated | 117 |

| abstract_inverted_index.datasets, | 209 |

| abstract_inverted_index.detection | 62 |

| abstract_inverted_index.difficult | 85 |

| abstract_inverted_index.excellent | 72 |

| abstract_inverted_index.extensive | 205 |

| abstract_inverted_index.framework | 177 |

| abstract_inverted_index.generally | 37 |

| abstract_inverted_index.instances | 169 |

| abstract_inverted_index.paradigm, | 48 |

| abstract_inverted_index.paradigm. | 184 |

| abstract_inverted_index.paradigms | 70, 103 |

| abstract_inverted_index.pipeline. | 118 |

| abstract_inverted_index.Grounding} | 180 |

| abstract_inverted_index.Paradigm}. | 138 |

| abstract_inverted_index.\textbf{MI | 179 |

| abstract_inverted_index.accurately | 87 |

| abstract_inverted_index.benchmarks | 221 |

| abstract_inverted_index.categories | 150 |

| abstract_inverted_index.framework, | 187 |

| abstract_inverted_index.inference. | 202 |

| abstract_inverted_index.mainstream | 32 |

| abstract_inverted_index.outperform | 238 |

| abstract_inverted_index.remarkable | 44 |

| abstract_inverted_index.categories, | 10 |

| abstract_inverted_index.competitive | 215 |

| abstract_inverted_index.constructed | 243 |

| abstract_inverted_index.experiments | 206 |

| abstract_inverted_index.limitations | 4 |

| abstract_inverted_index.multi-round | 107, 152 |

| abstract_inverted_index.performance | 216 |

| abstract_inverted_index.researchers | 50 |

| abstract_inverted_index.specialized | 91, 149, 244 |

| abstract_inverted_index.Segmentation | 17 |

| abstract_inverted_index.description. | 95 |

| abstract_inverted_index.high-quality | 188 |

| abstract_inverted_index.interaction, | 109 |

| abstract_inverted_index.limitations. | 78 |

| abstract_inverted_index.performance, | 73 |

| abstract_inverted_index.performance. | 45 |

| abstract_inverted_index.pre-training | 6 |

| abstract_inverted_index.researchers. | 26 |

| abstract_inverted_index.segmentation | 64 |

| abstract_inverted_index.single-stage | 199 |

| abstract_inverted_index.\textbf{Image | 136 |

| abstract_inverted_index.automatically | 192 |

| abstract_inverted_index.intervention. | 154 |

| abstract_inverted_index.characteristics | 89 |

| abstract_inverted_index.non-interactive | 201 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 8 |

| citation_normalized_percentile |