KV-Cache Compression via Attention Pattern Pruning for Latency-Constrained LLMs Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.5281/zenodo.17817217

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.5281/zenodo.17817217

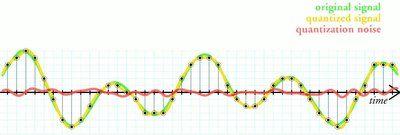

Large Language Models (LLMs) have achieved remarkable success across diverse natural language processing tasks. However, their autoregressive inference, particularly with long input sequences, is significantly bottlenecked by the Key-Value (KV) cache. The KV cache, essential for avoiding redundant computations in the self-attention mechanism, grows linearly with sequence length, leading to substantial memory consumption and bandwidth demands. This overhead translates directly into increased inference latency, particularly critical for real-time, latency-constrained applications. Existing KV cache compression techniques often resort to quantization or simple heuristic-based token eviction, which can either sacrifice accuracy through lossy compression or discard vital context. This paper proposes a novel KV-cache compression method rooted in attention pattern pruning. By dynamically analyzing the self-attention weights across transformer layers and heads, our approach identifies and prunes redundant or less important key-value pairs that contribute minimally to the subsequent token generation. This intelligent pruning strategy ensures that only the most semantically relevant context is retained in the cache, thereby reducing its memory footprint and alleviating bandwidth limitations without significant degradation in model performance. We demonstrate that this method leads to a substantial reduction in inference latency and memory usage, enabling more efficient and responsive deployment of LLMs, especially in scenarios demanding low-latency outputs.

Related Topics

- Type

- article

- Landing Page

- https://doi.org/10.5281/zenodo.17817217

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W7108609355

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W7108609355Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.5281/zenodo.17817217Digital Object Identifier

- Title

-

KV-Cache Compression via Attention Pattern Pruning for Latency-Constrained LLMsWork title

- Type

-

articleOpenAlex work type

- Publication year

-

2025Year of publication

- Publication date

-

2025-12-04Full publication date if available

- Authors

-

Revista, Zen, IA, 10List of authors in order

- Landing page

-

https://doi.org/10.5281/zenodo.17817217Publisher landing page

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://doi.org/10.5281/zenodo.17817217Direct OA link when available

- Concepts

-

Computer science, Cache, Quantization (signal processing), Lossy compression, Inference, Memory footprint, Data compression, Transformer, Latency (audio), Artificial intelligence, Pruning, Security token, Language model, Bandwidth (computing), Autoregressive model, Context (archaeology), Real-time computing, Algorithm, Overhead (engineering), Compression ratio, Lossless compression, Recall, Computation, Memory bandwidth, Context management, Machine learning, Arithmetic coding, Reduction (mathematics)Top concepts (fields/topics) attached by OpenAlex

- Cited by

-

0Total citation count in OpenAlex

Full payload

| id | https://openalex.org/W7108609355 |

|---|---|

| doi | https://doi.org/10.5281/zenodo.17817217 |

| ids.doi | https://doi.org/10.5281/zenodo.17817217 |

| ids.openalex | https://openalex.org/W7108609355 |

| fwci | 0.0 |

| type | article |

| title | KV-Cache Compression via Attention Pattern Pruning for Latency-Constrained LLMs |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T10181 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.2714828848838806 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1702 |

| topics[0].subfield.display_name | Artificial Intelligence |

| topics[0].display_name | Natural Language Processing Techniques |

| topics[1].id | https://openalex.org/T10028 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.16633792221546173 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1702 |

| topics[1].subfield.display_name | Artificial Intelligence |

| topics[1].display_name | Topic Modeling |

| topics[2].id | https://openalex.org/T14347 |

| topics[2].field.id | https://openalex.org/fields/17 |

| topics[2].field.display_name | Computer Science |

| topics[2].score | 0.07438474148511887 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1710 |

| topics[2].subfield.display_name | Information Systems |

| topics[2].display_name | Big Data and Digital Economy |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C41008148 |

| concepts[0].level | 0 |

| concepts[0].score | 0.7909771800041199 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[0].display_name | Computer science |

| concepts[1].id | https://openalex.org/C115537543 |

| concepts[1].level | 2 |

| concepts[1].score | 0.499234676361084 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q165596 |

| concepts[1].display_name | Cache |

| concepts[2].id | https://openalex.org/C28855332 |

| concepts[2].level | 2 |

| concepts[2].score | 0.48441416025161743 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q198099 |

| concepts[2].display_name | Quantization (signal processing) |

| concepts[3].id | https://openalex.org/C165021410 |

| concepts[3].level | 2 |

| concepts[3].score | 0.4607539474964142 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q55564 |

| concepts[3].display_name | Lossy compression |

| concepts[4].id | https://openalex.org/C2776214188 |

| concepts[4].level | 2 |

| concepts[4].score | 0.444996178150177 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q408386 |

| concepts[4].display_name | Inference |

| concepts[5].id | https://openalex.org/C74912251 |

| concepts[5].level | 2 |

| concepts[5].score | 0.42210114002227783 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q6815727 |

| concepts[5].display_name | Memory footprint |

| concepts[6].id | https://openalex.org/C78548338 |

| concepts[6].level | 2 |

| concepts[6].score | 0.41121795773506165 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q2493 |

| concepts[6].display_name | Data compression |

| concepts[7].id | https://openalex.org/C66322947 |

| concepts[7].level | 3 |

| concepts[7].score | 0.4105891287326813 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q11658 |

| concepts[7].display_name | Transformer |

| concepts[8].id | https://openalex.org/C82876162 |

| concepts[8].level | 2 |

| concepts[8].score | 0.37780696153640747 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q17096504 |

| concepts[8].display_name | Latency (audio) |

| concepts[9].id | https://openalex.org/C154945302 |

| concepts[9].level | 1 |

| concepts[9].score | 0.37083956599235535 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[9].display_name | Artificial intelligence |

| concepts[10].id | https://openalex.org/C108010975 |

| concepts[10].level | 2 |

| concepts[10].score | 0.3463610112667084 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q500094 |

| concepts[10].display_name | Pruning |

| concepts[11].id | https://openalex.org/C48145219 |

| concepts[11].level | 2 |

| concepts[11].score | 0.3408026397228241 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q1335365 |

| concepts[11].display_name | Security token |

| concepts[12].id | https://openalex.org/C137293760 |

| concepts[12].level | 2 |

| concepts[12].score | 0.33625078201293945 |

| concepts[12].wikidata | https://www.wikidata.org/wiki/Q3621696 |

| concepts[12].display_name | Language model |

| concepts[13].id | https://openalex.org/C2776257435 |

| concepts[13].level | 2 |

| concepts[13].score | 0.32025864720344543 |

| concepts[13].wikidata | https://www.wikidata.org/wiki/Q1576430 |

| concepts[13].display_name | Bandwidth (computing) |

| concepts[14].id | https://openalex.org/C159877910 |

| concepts[14].level | 2 |

| concepts[14].score | 0.31896623969078064 |

| concepts[14].wikidata | https://www.wikidata.org/wiki/Q2202883 |

| concepts[14].display_name | Autoregressive model |

| concepts[15].id | https://openalex.org/C2779343474 |

| concepts[15].level | 2 |

| concepts[15].score | 0.3140846788883209 |

| concepts[15].wikidata | https://www.wikidata.org/wiki/Q3109175 |

| concepts[15].display_name | Context (archaeology) |

| concepts[16].id | https://openalex.org/C79403827 |

| concepts[16].level | 1 |

| concepts[16].score | 0.3118276596069336 |

| concepts[16].wikidata | https://www.wikidata.org/wiki/Q3988 |

| concepts[16].display_name | Real-time computing |

| concepts[17].id | https://openalex.org/C11413529 |

| concepts[17].level | 1 |

| concepts[17].score | 0.30393800139427185 |

| concepts[17].wikidata | https://www.wikidata.org/wiki/Q8366 |

| concepts[17].display_name | Algorithm |

| concepts[18].id | https://openalex.org/C2779960059 |

| concepts[18].level | 2 |

| concepts[18].score | 0.30319663882255554 |

| concepts[18].wikidata | https://www.wikidata.org/wiki/Q7113681 |

| concepts[18].display_name | Overhead (engineering) |

| concepts[19].id | https://openalex.org/C25797200 |

| concepts[19].level | 3 |

| concepts[19].score | 0.2997397780418396 |

| concepts[19].wikidata | https://www.wikidata.org/wiki/Q828137 |

| concepts[19].display_name | Compression ratio |

| concepts[20].id | https://openalex.org/C81081738 |

| concepts[20].level | 3 |

| concepts[20].score | 0.29833346605300903 |

| concepts[20].wikidata | https://www.wikidata.org/wiki/Q55542 |

| concepts[20].display_name | Lossless compression |

| concepts[21].id | https://openalex.org/C100660578 |

| concepts[21].level | 2 |

| concepts[21].score | 0.29504960775375366 |

| concepts[21].wikidata | https://www.wikidata.org/wiki/Q18733 |

| concepts[21].display_name | Recall |

| concepts[22].id | https://openalex.org/C45374587 |

| concepts[22].level | 2 |

| concepts[22].score | 0.27742746472358704 |

| concepts[22].wikidata | https://www.wikidata.org/wiki/Q12525525 |

| concepts[22].display_name | Computation |

| concepts[23].id | https://openalex.org/C188045654 |

| concepts[23].level | 2 |

| concepts[23].score | 0.2740260660648346 |

| concepts[23].wikidata | https://www.wikidata.org/wiki/Q17148339 |

| concepts[23].display_name | Memory bandwidth |

| concepts[24].id | https://openalex.org/C2776285913 |

| concepts[24].level | 3 |

| concepts[24].score | 0.26715096831321716 |

| concepts[24].wikidata | https://www.wikidata.org/wiki/Q5165183 |

| concepts[24].display_name | Context management |

| concepts[25].id | https://openalex.org/C119857082 |

| concepts[25].level | 1 |

| concepts[25].score | 0.26102688908576965 |

| concepts[25].wikidata | https://www.wikidata.org/wiki/Q2539 |

| concepts[25].display_name | Machine learning |

| concepts[26].id | https://openalex.org/C153338461 |

| concepts[26].level | 4 |

| concepts[26].score | 0.25436297059059143 |

| concepts[26].wikidata | https://www.wikidata.org/wiki/Q2651 |

| concepts[26].display_name | Arithmetic coding |

| concepts[27].id | https://openalex.org/C111335779 |

| concepts[27].level | 2 |

| concepts[27].score | 0.25231385231018066 |

| concepts[27].wikidata | https://www.wikidata.org/wiki/Q3454686 |

| concepts[27].display_name | Reduction (mathematics) |

| keywords[0].id | https://openalex.org/keywords/cache |

| keywords[0].score | 0.499234676361084 |

| keywords[0].display_name | Cache |

| keywords[1].id | https://openalex.org/keywords/quantization |

| keywords[1].score | 0.48441416025161743 |

| keywords[1].display_name | Quantization (signal processing) |

| keywords[2].id | https://openalex.org/keywords/lossy-compression |

| keywords[2].score | 0.4607539474964142 |

| keywords[2].display_name | Lossy compression |

| keywords[3].id | https://openalex.org/keywords/inference |

| keywords[3].score | 0.444996178150177 |

| keywords[3].display_name | Inference |

| keywords[4].id | https://openalex.org/keywords/memory-footprint |

| keywords[4].score | 0.42210114002227783 |

| keywords[4].display_name | Memory footprint |

| keywords[5].id | https://openalex.org/keywords/data-compression |

| keywords[5].score | 0.41121795773506165 |

| keywords[5].display_name | Data compression |

| keywords[6].id | https://openalex.org/keywords/transformer |

| keywords[6].score | 0.4105891287326813 |

| keywords[6].display_name | Transformer |

| keywords[7].id | https://openalex.org/keywords/latency |

| keywords[7].score | 0.37780696153640747 |

| keywords[7].display_name | Latency (audio) |

| keywords[8].id | https://openalex.org/keywords/pruning |

| keywords[8].score | 0.3463610112667084 |

| keywords[8].display_name | Pruning |

| language | |

| locations[0].id | doi:10.5281/zenodo.17817217 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400562 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | Zenodo (CERN European Organization for Nuclear Research) |

| locations[0].source.host_organization | https://openalex.org/I67311998 |

| locations[0].source.host_organization_name | European Organization for Nuclear Research |

| locations[0].source.host_organization_lineage | https://openalex.org/I67311998 |

| locations[0].license | cc-by |

| locations[0].pdf_url | |

| locations[0].version | |

| locations[0].raw_type | article-journal |

| locations[0].license_id | https://openalex.org/licenses/cc-by |

| locations[0].is_accepted | False |

| locations[0].is_published | |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | https://doi.org/10.5281/zenodo.17817217 |

| indexed_in | datacite |

| authorships[0].author.id | |

| authorships[0].author.orcid | |

| authorships[0].author.display_name | Revista, Zen |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Revista, Zen |

| authorships[0].is_corresponding | True |

| authorships[1].author.id | |

| authorships[1].author.orcid | |

| authorships[1].author.display_name | IA, 10 |

| authorships[1].author_position | last |

| authorships[1].raw_author_name | IA, 10 |

| authorships[1].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://doi.org/10.5281/zenodo.17817217 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-12-05T00:00:00 |

| display_name | KV-Cache Compression via Attention Pattern Pruning for Latency-Constrained LLMs |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-12-05T23:25:22.460635 |

| primary_topic.id | https://openalex.org/T10181 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.2714828848838806 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1702 |

| primary_topic.subfield.display_name | Artificial Intelligence |

| primary_topic.display_name | Natural Language Processing Techniques |

| cited_by_count | 0 |

| locations_count | 1 |

| best_oa_location.id | doi:10.5281/zenodo.17817217 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400562 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | Zenodo (CERN European Organization for Nuclear Research) |

| best_oa_location.source.host_organization | https://openalex.org/I67311998 |

| best_oa_location.source.host_organization_name | European Organization for Nuclear Research |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I67311998 |

| best_oa_location.license | cc-by |

| best_oa_location.pdf_url | |

| best_oa_location.version | |

| best_oa_location.raw_type | article-journal |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | https://doi.org/10.5281/zenodo.17817217 |

| primary_location.id | doi:10.5281/zenodo.17817217 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400562 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | Zenodo (CERN European Organization for Nuclear Research) |

| primary_location.source.host_organization | https://openalex.org/I67311998 |

| primary_location.source.host_organization_name | European Organization for Nuclear Research |

| primary_location.source.host_organization_lineage | https://openalex.org/I67311998 |

| primary_location.license | cc-by |

| primary_location.pdf_url | |

| primary_location.version | |

| primary_location.raw_type | article-journal |

| primary_location.license_id | https://openalex.org/licenses/cc-by |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | https://doi.org/10.5281/zenodo.17817217 |

| publication_date | 2025-12-04 |

| publication_year | 2025 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 99, 178 |

| abstract_inverted_index.By | 109 |

| abstract_inverted_index.KV | 32, 71 |

| abstract_inverted_index.We | 171 |

| abstract_inverted_index.by | 26 |

| abstract_inverted_index.in | 39, 105, 153, 168, 181, 196 |

| abstract_inverted_index.is | 23, 151 |

| abstract_inverted_index.of | 193 |

| abstract_inverted_index.or | 79, 92, 126 |

| abstract_inverted_index.to | 49, 77, 134, 177 |

| abstract_inverted_index.The | 31 |

| abstract_inverted_index.and | 53, 118, 123, 161, 184, 190 |

| abstract_inverted_index.can | 85 |

| abstract_inverted_index.for | 35, 66 |

| abstract_inverted_index.its | 158 |

| abstract_inverted_index.our | 120 |

| abstract_inverted_index.the | 27, 40, 112, 135, 146, 154 |

| abstract_inverted_index.(KV) | 29 |

| abstract_inverted_index.This | 56, 96, 139 |

| abstract_inverted_index.have | 4 |

| abstract_inverted_index.into | 60 |

| abstract_inverted_index.less | 127 |

| abstract_inverted_index.long | 20 |

| abstract_inverted_index.more | 188 |

| abstract_inverted_index.most | 147 |

| abstract_inverted_index.only | 145 |

| abstract_inverted_index.that | 131, 144, 173 |

| abstract_inverted_index.this | 174 |

| abstract_inverted_index.with | 19, 45 |

| abstract_inverted_index.LLMs, | 194 |

| abstract_inverted_index.Large | 0 |

| abstract_inverted_index.cache | 72 |

| abstract_inverted_index.grows | 43 |

| abstract_inverted_index.input | 21 |

| abstract_inverted_index.leads | 176 |

| abstract_inverted_index.lossy | 90 |

| abstract_inverted_index.model | 169 |

| abstract_inverted_index.novel | 100 |

| abstract_inverted_index.often | 75 |

| abstract_inverted_index.pairs | 130 |

| abstract_inverted_index.paper | 97 |

| abstract_inverted_index.their | 15 |

| abstract_inverted_index.token | 82, 137 |

| abstract_inverted_index.vital | 94 |

| abstract_inverted_index.which | 84 |

| abstract_inverted_index.(LLMs) | 3 |

| abstract_inverted_index.Models | 2 |

| abstract_inverted_index.across | 8, 115 |

| abstract_inverted_index.cache, | 33, 155 |

| abstract_inverted_index.cache. | 30 |

| abstract_inverted_index.either | 86 |

| abstract_inverted_index.heads, | 119 |

| abstract_inverted_index.layers | 117 |

| abstract_inverted_index.memory | 51, 159, 185 |

| abstract_inverted_index.method | 103, 175 |

| abstract_inverted_index.prunes | 124 |

| abstract_inverted_index.resort | 76 |

| abstract_inverted_index.rooted | 104 |

| abstract_inverted_index.simple | 80 |

| abstract_inverted_index.tasks. | 13 |

| abstract_inverted_index.usage, | 186 |

| abstract_inverted_index.context | 150 |

| abstract_inverted_index.discard | 93 |

| abstract_inverted_index.diverse | 9 |

| abstract_inverted_index.ensures | 143 |

| abstract_inverted_index.latency | 183 |

| abstract_inverted_index.leading | 48 |

| abstract_inverted_index.length, | 47 |

| abstract_inverted_index.natural | 10 |

| abstract_inverted_index.pattern | 107 |

| abstract_inverted_index.pruning | 141 |

| abstract_inverted_index.success | 7 |

| abstract_inverted_index.thereby | 156 |

| abstract_inverted_index.through | 89 |

| abstract_inverted_index.weights | 114 |

| abstract_inverted_index.without | 165 |

| abstract_inverted_index.Existing | 70 |

| abstract_inverted_index.However, | 14 |

| abstract_inverted_index.KV-cache | 101 |

| abstract_inverted_index.Language | 1 |

| abstract_inverted_index.accuracy | 88 |

| abstract_inverted_index.achieved | 5 |

| abstract_inverted_index.approach | 121 |

| abstract_inverted_index.avoiding | 36 |

| abstract_inverted_index.context. | 95 |

| abstract_inverted_index.critical | 65 |

| abstract_inverted_index.demands. | 55 |

| abstract_inverted_index.directly | 59 |

| abstract_inverted_index.enabling | 187 |

| abstract_inverted_index.language | 11 |

| abstract_inverted_index.latency, | 63 |

| abstract_inverted_index.linearly | 44 |

| abstract_inverted_index.outputs. | 200 |

| abstract_inverted_index.overhead | 57 |

| abstract_inverted_index.proposes | 98 |

| abstract_inverted_index.pruning. | 108 |

| abstract_inverted_index.reducing | 157 |

| abstract_inverted_index.relevant | 149 |

| abstract_inverted_index.retained | 152 |

| abstract_inverted_index.sequence | 46 |

| abstract_inverted_index.strategy | 142 |

| abstract_inverted_index.Key-Value | 28 |

| abstract_inverted_index.analyzing | 111 |

| abstract_inverted_index.attention | 106 |

| abstract_inverted_index.bandwidth | 54, 163 |

| abstract_inverted_index.demanding | 198 |

| abstract_inverted_index.efficient | 189 |

| abstract_inverted_index.essential | 34 |

| abstract_inverted_index.eviction, | 83 |

| abstract_inverted_index.footprint | 160 |

| abstract_inverted_index.important | 128 |

| abstract_inverted_index.increased | 61 |

| abstract_inverted_index.inference | 62, 182 |

| abstract_inverted_index.key-value | 129 |

| abstract_inverted_index.minimally | 133 |

| abstract_inverted_index.reduction | 180 |

| abstract_inverted_index.redundant | 37, 125 |

| abstract_inverted_index.sacrifice | 87 |

| abstract_inverted_index.scenarios | 197 |

| abstract_inverted_index.contribute | 132 |

| abstract_inverted_index.deployment | 192 |

| abstract_inverted_index.especially | 195 |

| abstract_inverted_index.identifies | 122 |

| abstract_inverted_index.inference, | 17 |

| abstract_inverted_index.mechanism, | 42 |

| abstract_inverted_index.processing | 12 |

| abstract_inverted_index.real-time, | 67 |

| abstract_inverted_index.remarkable | 6 |

| abstract_inverted_index.responsive | 191 |

| abstract_inverted_index.sequences, | 22 |

| abstract_inverted_index.subsequent | 136 |

| abstract_inverted_index.techniques | 74 |

| abstract_inverted_index.translates | 58 |

| abstract_inverted_index.alleviating | 162 |

| abstract_inverted_index.compression | 73, 91, 102 |

| abstract_inverted_index.consumption | 52 |

| abstract_inverted_index.degradation | 167 |

| abstract_inverted_index.demonstrate | 172 |

| abstract_inverted_index.dynamically | 110 |

| abstract_inverted_index.generation. | 138 |

| abstract_inverted_index.intelligent | 140 |

| abstract_inverted_index.limitations | 164 |

| abstract_inverted_index.low-latency | 199 |

| abstract_inverted_index.significant | 166 |

| abstract_inverted_index.substantial | 50, 179 |

| abstract_inverted_index.transformer | 116 |

| abstract_inverted_index.bottlenecked | 25 |

| abstract_inverted_index.computations | 38 |

| abstract_inverted_index.particularly | 18, 64 |

| abstract_inverted_index.performance. | 170 |

| abstract_inverted_index.quantization | 78 |

| abstract_inverted_index.semantically | 148 |

| abstract_inverted_index.applications. | 69 |

| abstract_inverted_index.significantly | 24 |

| abstract_inverted_index.autoregressive | 16 |

| abstract_inverted_index.self-attention | 41, 113 |

| abstract_inverted_index.heuristic-based | 81 |

| abstract_inverted_index.latency-constrained | 68 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 2 |

| citation_normalized_percentile.value | 0.92002677 |

| citation_normalized_percentile.is_in_top_1_percent | False |

| citation_normalized_percentile.is_in_top_10_percent | True |