Leveraging Segment Anything Model for Source-Free Domain Adaptation via Dual Feature Guided Auto-Prompting Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2505.08527

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2505.08527

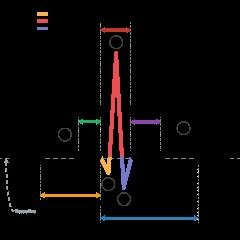

Source-free domain adaptation (SFDA) for segmentation aims at adapting a model trained in the source domain to perform well in the target domain with only the source model and unlabeled target data. Inspired by the recent success of Segment Anything Model (SAM) which exhibits the generality of segmenting images of various modalities and in different domains given human-annotated prompts like bounding boxes or points, we for the first time explore the potentials of Segment Anything Model for SFDA via automatedly finding an accurate bounding box prompt. We find that the bounding boxes directly generated with existing SFDA approaches are defective due to the domain gap. To tackle this issue, we propose a novel Dual Feature Guided (DFG) auto-prompting approach to search for the box prompt. Specifically, the source model is first trained in a feature aggregation phase, which not only preliminarily adapts the source model to the target domain but also builds a feature distribution well-prepared for box prompt search. In the second phase, based on two feature distribution observations, we gradually expand the box prompt with the guidance of the target model feature and the SAM feature to handle the class-wise clustered target features and the class-wise dispersed target features, respectively. To remove the potentially enlarged false positive regions caused by the over-confident prediction of the target model, the refined pseudo-labels produced by SAM are further postprocessed based on connectivity analysis. Experiments on 3D and 2D datasets indicate that our approach yields superior performance compared to conventional methods. Code is available at https://github.com/xmed-lab/DFG.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2505.08527

- https://arxiv.org/pdf/2505.08527

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W4414937510

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4414937510Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2505.08527Digital Object Identifier

- Title

-

Leveraging Segment Anything Model for Source-Free Domain Adaptation via Dual Feature Guided Auto-PromptingWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2025Year of publication

- Publication date

-

2025-05-13Full publication date if available

- Authors

-

Zheang Huai, Hui Tang, Yi Li, Zhuangzhuang Chen, Xiaomeng LiList of authors in order

- Landing page

-

https://arxiv.org/abs/2505.08527Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2505.08527Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2505.08527Direct OA link when available

- Cited by

-

0Total citation count in OpenAlex

Full payload

| id | https://openalex.org/W4414937510 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2505.08527 |

| ids.doi | https://doi.org/10.48550/arxiv.2505.08527 |

| ids.openalex | https://openalex.org/W4414937510 |

| fwci | |

| type | preprint |

| title | Leveraging Segment Anything Model for Source-Free Domain Adaptation via Dual Feature Guided Auto-Prompting |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T11307 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.957099974155426 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1702 |

| topics[0].subfield.display_name | Artificial Intelligence |

| topics[0].display_name | Domain Adaptation and Few-Shot Learning |

| topics[1].id | https://openalex.org/T12535 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9506999850273132 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1702 |

| topics[1].subfield.display_name | Artificial Intelligence |

| topics[1].display_name | Machine Learning and Data Classification |

| topics[2].id | https://openalex.org/T12761 |

| topics[2].field.id | https://openalex.org/fields/17 |

| topics[2].field.display_name | Computer Science |

| topics[2].score | 0.9405999779701233 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1702 |

| topics[2].subfield.display_name | Artificial Intelligence |

| topics[2].display_name | Data Stream Mining Techniques |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2505.08527 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | https://arxiv.org/pdf/2505.08527 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2505.08527 |

| locations[1].id | doi:10.48550/arxiv.2505.08527 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2505.08527 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5034966866 |

| authorships[0].author.orcid | https://orcid.org/0000-0001-6164-1977 |

| authorships[0].author.display_name | Zheang Huai |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Huai, Zheang |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5024350063 |

| authorships[1].author.orcid | https://orcid.org/0000-0001-8856-9127 |

| authorships[1].author.display_name | Hui Tang |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Tang, Hui |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5100421812 |

| authorships[2].author.orcid | https://orcid.org/0000-0003-4562-8208 |

| authorships[2].author.display_name | Yi Li |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Li, Yi |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5008344915 |

| authorships[3].author.orcid | https://orcid.org/0000-0002-0336-1181 |

| authorships[3].author.display_name | Zhuangzhuang Chen |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Chen, Zhuangzhuang |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5100427643 |

| authorships[4].author.orcid | https://orcid.org/0000-0003-1105-8083 |

| authorships[4].author.display_name | Xiaomeng Li |

| authorships[4].author_position | last |

| authorships[4].raw_author_name | Li, Xiaomeng |

| authorships[4].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2505.08527 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Leveraging Segment Anything Model for Source-Free Domain Adaptation via Dual Feature Guided Auto-Prompting |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T11307 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.957099974155426 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1702 |

| primary_topic.subfield.display_name | Artificial Intelligence |

| primary_topic.display_name | Domain Adaptation and Few-Shot Learning |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2505.08527 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2505.08527 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2505.08527 |

| primary_location.id | pmh:oai:arXiv.org:2505.08527 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | https://arxiv.org/pdf/2505.08527 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2505.08527 |

| publication_date | 2025-05-13 |

| publication_year | 2025 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 9, 111, 133, 152 |

| abstract_inverted_index.2D | 236 |

| abstract_inverted_index.3D | 234 |

| abstract_inverted_index.In | 160 |

| abstract_inverted_index.To | 105, 202 |

| abstract_inverted_index.We | 86 |

| abstract_inverted_index.an | 81 |

| abstract_inverted_index.at | 7, 252 |

| abstract_inverted_index.by | 33, 211, 223 |

| abstract_inverted_index.in | 12, 19, 53, 132 |

| abstract_inverted_index.is | 129, 250 |

| abstract_inverted_index.of | 37, 46, 49, 72, 179, 215 |

| abstract_inverted_index.on | 165, 229, 233 |

| abstract_inverted_index.or | 62 |

| abstract_inverted_index.to | 16, 101, 119, 145, 188, 246 |

| abstract_inverted_index.we | 64, 109, 170 |

| abstract_inverted_index.SAM | 186, 224 |

| abstract_inverted_index.and | 28, 52, 184, 195, 235 |

| abstract_inverted_index.are | 98, 225 |

| abstract_inverted_index.box | 84, 123, 157, 174 |

| abstract_inverted_index.but | 149 |

| abstract_inverted_index.due | 100 |

| abstract_inverted_index.for | 4, 65, 76, 121, 156 |

| abstract_inverted_index.not | 138 |

| abstract_inverted_index.our | 240 |

| abstract_inverted_index.the | 13, 20, 25, 34, 44, 66, 70, 89, 102, 122, 126, 142, 146, 161, 173, 177, 180, 185, 190, 196, 204, 212, 216, 219 |

| abstract_inverted_index.two | 166 |

| abstract_inverted_index.via | 78 |

| abstract_inverted_index.Code | 249 |

| abstract_inverted_index.Dual | 113 |

| abstract_inverted_index.SFDA | 77, 96 |

| abstract_inverted_index.aims | 6 |

| abstract_inverted_index.also | 150 |

| abstract_inverted_index.find | 87 |

| abstract_inverted_index.gap. | 104 |

| abstract_inverted_index.like | 59 |

| abstract_inverted_index.only | 24, 139 |

| abstract_inverted_index.that | 88, 239 |

| abstract_inverted_index.this | 107 |

| abstract_inverted_index.time | 68 |

| abstract_inverted_index.well | 18 |

| abstract_inverted_index.with | 23, 94, 176 |

| abstract_inverted_index.(DFG) | 116 |

| abstract_inverted_index.(SAM) | 41 |

| abstract_inverted_index.Model | 40, 75 |

| abstract_inverted_index.based | 164, 228 |

| abstract_inverted_index.boxes | 61, 91 |

| abstract_inverted_index.data. | 31 |

| abstract_inverted_index.false | 207 |

| abstract_inverted_index.first | 67, 130 |

| abstract_inverted_index.given | 56 |

| abstract_inverted_index.model | 10, 27, 128, 144, 182 |

| abstract_inverted_index.novel | 112 |

| abstract_inverted_index.which | 42, 137 |

| abstract_inverted_index.(SFDA) | 3 |

| abstract_inverted_index.Guided | 115 |

| abstract_inverted_index.adapts | 141 |

| abstract_inverted_index.builds | 151 |

| abstract_inverted_index.caused | 210 |

| abstract_inverted_index.domain | 1, 15, 22, 103, 148 |

| abstract_inverted_index.expand | 172 |

| abstract_inverted_index.handle | 189 |

| abstract_inverted_index.images | 48 |

| abstract_inverted_index.issue, | 108 |

| abstract_inverted_index.model, | 218 |

| abstract_inverted_index.phase, | 136, 163 |

| abstract_inverted_index.prompt | 158, 175 |

| abstract_inverted_index.recent | 35 |

| abstract_inverted_index.remove | 203 |

| abstract_inverted_index.search | 120 |

| abstract_inverted_index.second | 162 |

| abstract_inverted_index.source | 14, 26, 127, 143 |

| abstract_inverted_index.tackle | 106 |

| abstract_inverted_index.target | 21, 30, 147, 181, 193, 199, 217 |

| abstract_inverted_index.yields | 242 |

| abstract_inverted_index.Feature | 114 |

| abstract_inverted_index.Segment | 38, 73 |

| abstract_inverted_index.domains | 55 |

| abstract_inverted_index.explore | 69 |

| abstract_inverted_index.feature | 134, 153, 167, 183, 187 |

| abstract_inverted_index.finding | 80 |

| abstract_inverted_index.further | 226 |

| abstract_inverted_index.perform | 17 |

| abstract_inverted_index.points, | 63 |

| abstract_inverted_index.prompt. | 85, 124 |

| abstract_inverted_index.prompts | 58 |

| abstract_inverted_index.propose | 110 |

| abstract_inverted_index.refined | 220 |

| abstract_inverted_index.regions | 209 |

| abstract_inverted_index.search. | 159 |

| abstract_inverted_index.success | 36 |

| abstract_inverted_index.trained | 11, 131 |

| abstract_inverted_index.various | 50 |

| abstract_inverted_index.Anything | 39, 74 |

| abstract_inverted_index.Inspired | 32 |

| abstract_inverted_index.accurate | 82 |

| abstract_inverted_index.adapting | 8 |

| abstract_inverted_index.approach | 118, 241 |

| abstract_inverted_index.bounding | 60, 83, 90 |

| abstract_inverted_index.compared | 245 |

| abstract_inverted_index.datasets | 237 |

| abstract_inverted_index.directly | 92 |

| abstract_inverted_index.enlarged | 206 |

| abstract_inverted_index.exhibits | 43 |

| abstract_inverted_index.existing | 95 |

| abstract_inverted_index.features | 194 |

| abstract_inverted_index.guidance | 178 |

| abstract_inverted_index.indicate | 238 |

| abstract_inverted_index.methods. | 248 |

| abstract_inverted_index.positive | 208 |

| abstract_inverted_index.produced | 222 |

| abstract_inverted_index.superior | 243 |

| abstract_inverted_index.analysis. | 231 |

| abstract_inverted_index.available | 251 |

| abstract_inverted_index.clustered | 192 |

| abstract_inverted_index.defective | 99 |

| abstract_inverted_index.different | 54 |

| abstract_inverted_index.dispersed | 198 |

| abstract_inverted_index.features, | 200 |

| abstract_inverted_index.generated | 93 |

| abstract_inverted_index.gradually | 171 |

| abstract_inverted_index.unlabeled | 29 |

| abstract_inverted_index.adaptation | 2 |

| abstract_inverted_index.approaches | 97 |

| abstract_inverted_index.class-wise | 191, 197 |

| abstract_inverted_index.generality | 45 |

| abstract_inverted_index.modalities | 51 |

| abstract_inverted_index.potentials | 71 |

| abstract_inverted_index.prediction | 214 |

| abstract_inverted_index.segmenting | 47 |

| abstract_inverted_index.Experiments | 232 |

| abstract_inverted_index.Source-free | 0 |

| abstract_inverted_index.aggregation | 135 |

| abstract_inverted_index.automatedly | 79 |

| abstract_inverted_index.performance | 244 |

| abstract_inverted_index.potentially | 205 |

| abstract_inverted_index.connectivity | 230 |

| abstract_inverted_index.conventional | 247 |

| abstract_inverted_index.distribution | 154, 168 |

| abstract_inverted_index.segmentation | 5 |

| abstract_inverted_index.Specifically, | 125 |

| abstract_inverted_index.observations, | 169 |

| abstract_inverted_index.postprocessed | 227 |

| abstract_inverted_index.preliminarily | 140 |

| abstract_inverted_index.pseudo-labels | 221 |

| abstract_inverted_index.respectively. | 201 |

| abstract_inverted_index.well-prepared | 155 |

| abstract_inverted_index.auto-prompting | 117 |

| abstract_inverted_index.over-confident | 213 |

| abstract_inverted_index.human-annotated | 57 |

| abstract_inverted_index.https://github.com/xmed-lab/DFG. | 253 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 5 |

| citation_normalized_percentile |