Mega-TTS 2: Boosting Prompting Mechanisms for Zero-Shot Speech Synthesis Article Swipe

YOU?

·

· 2023

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2307.07218

YOU?

·

· 2023

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2307.07218

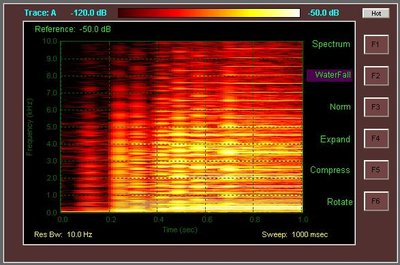

Zero-shot text-to-speech (TTS) aims to synthesize voices with unseen speech prompts, which significantly reduces the data and computation requirements for voice cloning by skipping the fine-tuning process. However, the prompting mechanisms of zero-shot TTS still face challenges in the following aspects: 1) previous works of zero-shot TTS are typically trained with single-sentence prompts, which significantly restricts their performance when the data is relatively sufficient during the inference stage. 2) The prosodic information in prompts is highly coupled with timbre, making it untransferable to each other. This paper introduces Mega-TTS 2, a generic prompting mechanism for zero-shot TTS, to tackle the aforementioned challenges. Specifically, we design a powerful acoustic autoencoder that separately encodes the prosody and timbre information into the compressed latent space while providing high-quality reconstructions. Then, we propose a multi-reference timbre encoder and a prosody latent language model (P-LLM) to extract useful information from multi-sentence prompts. We further leverage the probabilities derived from multiple P-LLM outputs to produce transferable and controllable prosody. Experimental results demonstrate that Mega-TTS 2 could not only synthesize identity-preserving speech with a short prompt of an unseen speaker from arbitrary sources but consistently outperform the fine-tuning method when the volume of data ranges from 10 seconds to 5 minutes. Furthermore, our method enables to transfer various speaking styles to the target timbre in a fine-grained and controlled manner. Audio samples can be found in https://boostprompt.github.io/boostprompt/.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2307.07218

- https://arxiv.org/pdf/2307.07218

- OA Status

- green

- Cited By

- 8

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4384615685

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4384615685Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2307.07218Digital Object Identifier

- Title

-

Mega-TTS 2: Boosting Prompting Mechanisms for Zero-Shot Speech SynthesisWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2023Year of publication

- Publication date

-

2023-07-14Full publication date if available

- Authors

-

Ziyue Karen Jiang, Jinglin Liu, Yi Ren, Jinzheng He, Chen Zhang, Zhenhui Ye, Pengfei Wei, Chunfeng Wang, Xiang Yin, Zejun Ma, Zhou ZhaoList of authors in order

- Landing page

-

https://arxiv.org/abs/2307.07218Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2307.07218Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2307.07218Direct OA link when available

- Concepts

-

Prosody, Computer science, Timbre, Speech recognition, Sentence, Autoencoder, Natural language processing, Artificial intelligence, Visual arts, Art, Deep learning, MusicalTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

8Total citation count in OpenAlex

- Citations by year (recent)

-

2025: 2, 2024: 6Per-year citation counts (last 5 years)

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4384615685 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2307.07218 |

| ids.doi | https://doi.org/10.48550/arxiv.2307.07218 |

| ids.openalex | https://openalex.org/W4384615685 |

| fwci | |

| type | preprint |

| title | Mega-TTS 2: Boosting Prompting Mechanisms for Zero-Shot Speech Synthesis |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T10201 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.9987999796867371 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1702 |

| topics[0].subfield.display_name | Artificial Intelligence |

| topics[0].display_name | Speech Recognition and Synthesis |

| topics[1].id | https://openalex.org/T10860 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9876999855041504 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1711 |

| topics[1].subfield.display_name | Signal Processing |

| topics[1].display_name | Speech and Audio Processing |

| topics[2].id | https://openalex.org/T11309 |

| topics[2].field.id | https://openalex.org/fields/17 |

| topics[2].field.display_name | Computer Science |

| topics[2].score | 0.9764000177383423 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1711 |

| topics[2].subfield.display_name | Signal Processing |

| topics[2].display_name | Music and Audio Processing |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C542774811 |

| concepts[0].level | 2 |

| concepts[0].score | 0.7492033243179321 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q10880526 |

| concepts[0].display_name | Prosody |

| concepts[1].id | https://openalex.org/C41008148 |

| concepts[1].level | 0 |

| concepts[1].score | 0.7480801343917847 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[1].display_name | Computer science |

| concepts[2].id | https://openalex.org/C2776539107 |

| concepts[2].level | 3 |

| concepts[2].score | 0.6937741041183472 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q176501 |

| concepts[2].display_name | Timbre |

| concepts[3].id | https://openalex.org/C28490314 |

| concepts[3].level | 1 |

| concepts[3].score | 0.6711801886558533 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q189436 |

| concepts[3].display_name | Speech recognition |

| concepts[4].id | https://openalex.org/C2777530160 |

| concepts[4].level | 2 |

| concepts[4].score | 0.558725118637085 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q41796 |

| concepts[4].display_name | Sentence |

| concepts[5].id | https://openalex.org/C101738243 |

| concepts[5].level | 3 |

| concepts[5].score | 0.4356156885623932 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q786435 |

| concepts[5].display_name | Autoencoder |

| concepts[6].id | https://openalex.org/C204321447 |

| concepts[6].level | 1 |

| concepts[6].score | 0.3689311146736145 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q30642 |

| concepts[6].display_name | Natural language processing |

| concepts[7].id | https://openalex.org/C154945302 |

| concepts[7].level | 1 |

| concepts[7].score | 0.35630184412002563 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[7].display_name | Artificial intelligence |

| concepts[8].id | https://openalex.org/C153349607 |

| concepts[8].level | 1 |

| concepts[8].score | 0.0 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q36649 |

| concepts[8].display_name | Visual arts |

| concepts[9].id | https://openalex.org/C142362112 |

| concepts[9].level | 0 |

| concepts[9].score | 0.0 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q735 |

| concepts[9].display_name | Art |

| concepts[10].id | https://openalex.org/C108583219 |

| concepts[10].level | 2 |

| concepts[10].score | 0.0 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q197536 |

| concepts[10].display_name | Deep learning |

| concepts[11].id | https://openalex.org/C558565934 |

| concepts[11].level | 2 |

| concepts[11].score | 0.0 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q2743 |

| concepts[11].display_name | Musical |

| keywords[0].id | https://openalex.org/keywords/prosody |

| keywords[0].score | 0.7492033243179321 |

| keywords[0].display_name | Prosody |

| keywords[1].id | https://openalex.org/keywords/computer-science |

| keywords[1].score | 0.7480801343917847 |

| keywords[1].display_name | Computer science |

| keywords[2].id | https://openalex.org/keywords/timbre |

| keywords[2].score | 0.6937741041183472 |

| keywords[2].display_name | Timbre |

| keywords[3].id | https://openalex.org/keywords/speech-recognition |

| keywords[3].score | 0.6711801886558533 |

| keywords[3].display_name | Speech recognition |

| keywords[4].id | https://openalex.org/keywords/sentence |

| keywords[4].score | 0.558725118637085 |

| keywords[4].display_name | Sentence |

| keywords[5].id | https://openalex.org/keywords/autoencoder |

| keywords[5].score | 0.4356156885623932 |

| keywords[5].display_name | Autoencoder |

| keywords[6].id | https://openalex.org/keywords/natural-language-processing |

| keywords[6].score | 0.3689311146736145 |

| keywords[6].display_name | Natural language processing |

| keywords[7].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[7].score | 0.35630184412002563 |

| keywords[7].display_name | Artificial intelligence |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2307.07218 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | https://arxiv.org/pdf/2307.07218 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2307.07218 |

| locations[1].id | doi:10.48550/arxiv.2307.07218 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2307.07218 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5083611896 |

| authorships[0].author.orcid | https://orcid.org/0009-0007-4158-4689 |

| authorships[0].author.display_name | Ziyue Karen Jiang |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Jiang, Ziyue |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5065126806 |

| authorships[1].author.orcid | https://orcid.org/0000-0002-9905-3887 |

| authorships[1].author.display_name | Jinglin Liu |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Liu, Jinglin |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5075486968 |

| authorships[2].author.orcid | https://orcid.org/0000-0002-3665-700X |

| authorships[2].author.display_name | Yi Ren |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Ren, Yi |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5043581249 |

| authorships[3].author.orcid | https://orcid.org/0009-0003-3024-9624 |

| authorships[3].author.display_name | Jinzheng He |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | He, Jinzheng |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5100374198 |

| authorships[4].author.orcid | https://orcid.org/0009-0004-3596-4683 |

| authorships[4].author.display_name | Chen Zhang |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | Zhang, Chen |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5016502904 |

| authorships[5].author.orcid | https://orcid.org/0000-0002-7105-014X |

| authorships[5].author.display_name | Zhenhui Ye |

| authorships[5].author_position | middle |

| authorships[5].raw_author_name | Ye, Zhenhui |

| authorships[5].is_corresponding | False |

| authorships[6].author.id | https://openalex.org/A5015951797 |

| authorships[6].author.orcid | https://orcid.org/0000-0002-1155-6085 |

| authorships[6].author.display_name | Pengfei Wei |

| authorships[6].author_position | middle |

| authorships[6].raw_author_name | Wei, Pengfei |

| authorships[6].is_corresponding | False |

| authorships[7].author.id | https://openalex.org/A5100601920 |

| authorships[7].author.orcid | https://orcid.org/0000-0001-9676-8425 |

| authorships[7].author.display_name | Chunfeng Wang |

| authorships[7].author_position | middle |

| authorships[7].raw_author_name | Wang, Chunfeng |

| authorships[7].is_corresponding | False |

| authorships[8].author.id | https://openalex.org/A5100446849 |

| authorships[8].author.orcid | https://orcid.org/0000-0002-6096-9943 |

| authorships[8].author.display_name | Xiang Yin |

| authorships[8].author_position | middle |

| authorships[8].raw_author_name | Yin, Xiang |

| authorships[8].is_corresponding | False |

| authorships[9].author.id | https://openalex.org/A5101108160 |

| authorships[9].author.orcid | |

| authorships[9].author.display_name | Zejun Ma |

| authorships[9].author_position | middle |

| authorships[9].raw_author_name | Ma, Zejun |

| authorships[9].is_corresponding | False |

| authorships[10].author.id | https://openalex.org/A5079260216 |

| authorships[10].author.orcid | https://orcid.org/0000-0001-6121-0384 |

| authorships[10].author.display_name | Zhou Zhao |

| authorships[10].author_position | last |

| authorships[10].raw_author_name | Zhao, Zhou |

| authorships[10].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2307.07218 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2023-07-18T00:00:00 |

| display_name | Mega-TTS 2: Boosting Prompting Mechanisms for Zero-Shot Speech Synthesis |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T10201 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.9987999796867371 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1702 |

| primary_topic.subfield.display_name | Artificial Intelligence |

| primary_topic.display_name | Speech Recognition and Synthesis |

| related_works | https://openalex.org/W3013693939, https://openalex.org/W2159052453, https://openalex.org/W2566616303, https://openalex.org/W3131327266, https://openalex.org/W2734887215, https://openalex.org/W4297051394, https://openalex.org/W2752972570, https://openalex.org/W2406877384, https://openalex.org/W2145836866, https://openalex.org/W2803255133 |

| cited_by_count | 8 |

| counts_by_year[0].year | 2025 |

| counts_by_year[0].cited_by_count | 2 |

| counts_by_year[1].year | 2024 |

| counts_by_year[1].cited_by_count | 6 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2307.07218 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2307.07218 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2307.07218 |

| primary_location.id | pmh:oai:arXiv.org:2307.07218 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | https://arxiv.org/pdf/2307.07218 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2307.07218 |

| publication_date | 2023-07-14 |

| publication_year | 2023 |

| referenced_works_count | 0 |

| abstract_inverted_index.2 | 168 |

| abstract_inverted_index.5 | 202 |

| abstract_inverted_index.a | 90, 105, 129, 134, 176, 218 |

| abstract_inverted_index.1) | 41 |

| abstract_inverted_index.10 | 199 |

| abstract_inverted_index.2) | 68 |

| abstract_inverted_index.2, | 89 |

| abstract_inverted_index.We | 147 |

| abstract_inverted_index.an | 180 |

| abstract_inverted_index.be | 226 |

| abstract_inverted_index.by | 22 |

| abstract_inverted_index.in | 37, 72, 217, 228 |

| abstract_inverted_index.is | 61, 74 |

| abstract_inverted_index.it | 80 |

| abstract_inverted_index.of | 31, 44, 179, 195 |

| abstract_inverted_index.to | 4, 82, 97, 140, 157, 201, 208, 213 |

| abstract_inverted_index.we | 103, 127 |

| abstract_inverted_index.TTS | 33, 46 |

| abstract_inverted_index.The | 69 |

| abstract_inverted_index.and | 16, 114, 133, 160, 220 |

| abstract_inverted_index.are | 47 |

| abstract_inverted_index.but | 186 |

| abstract_inverted_index.can | 225 |

| abstract_inverted_index.for | 19, 94 |

| abstract_inverted_index.not | 170 |

| abstract_inverted_index.our | 205 |

| abstract_inverted_index.the | 14, 24, 28, 38, 59, 65, 99, 112, 118, 150, 189, 193, 214 |

| abstract_inverted_index.TTS, | 96 |

| abstract_inverted_index.This | 85 |

| abstract_inverted_index.aims | 3 |

| abstract_inverted_index.data | 15, 60, 196 |

| abstract_inverted_index.each | 83 |

| abstract_inverted_index.face | 35 |

| abstract_inverted_index.from | 144, 153, 183, 198 |

| abstract_inverted_index.into | 117 |

| abstract_inverted_index.only | 171 |

| abstract_inverted_index.that | 109, 166 |

| abstract_inverted_index.when | 58, 192 |

| abstract_inverted_index.with | 7, 50, 77, 175 |

| abstract_inverted_index.(TTS) | 2 |

| abstract_inverted_index.Audio | 223 |

| abstract_inverted_index.P-LLM | 155 |

| abstract_inverted_index.Then, | 126 |

| abstract_inverted_index.could | 169 |

| abstract_inverted_index.found | 227 |

| abstract_inverted_index.model | 138 |

| abstract_inverted_index.paper | 86 |

| abstract_inverted_index.short | 177 |

| abstract_inverted_index.space | 121 |

| abstract_inverted_index.still | 34 |

| abstract_inverted_index.their | 56 |

| abstract_inverted_index.voice | 20 |

| abstract_inverted_index.which | 11, 53 |

| abstract_inverted_index.while | 122 |

| abstract_inverted_index.works | 43 |

| abstract_inverted_index.design | 104 |

| abstract_inverted_index.during | 64 |

| abstract_inverted_index.highly | 75 |

| abstract_inverted_index.latent | 120, 136 |

| abstract_inverted_index.making | 79 |

| abstract_inverted_index.method | 191, 206 |

| abstract_inverted_index.other. | 84 |

| abstract_inverted_index.prompt | 178 |

| abstract_inverted_index.ranges | 197 |

| abstract_inverted_index.speech | 9, 174 |

| abstract_inverted_index.stage. | 67 |

| abstract_inverted_index.styles | 212 |

| abstract_inverted_index.tackle | 98 |

| abstract_inverted_index.target | 215 |

| abstract_inverted_index.timbre | 115, 131, 216 |

| abstract_inverted_index.unseen | 8, 181 |

| abstract_inverted_index.useful | 142 |

| abstract_inverted_index.voices | 6 |

| abstract_inverted_index.volume | 194 |

| abstract_inverted_index.(P-LLM) | 139 |

| abstract_inverted_index.cloning | 21 |

| abstract_inverted_index.coupled | 76 |

| abstract_inverted_index.derived | 152 |

| abstract_inverted_index.enables | 207 |

| abstract_inverted_index.encoder | 132 |

| abstract_inverted_index.encodes | 111 |

| abstract_inverted_index.extract | 141 |

| abstract_inverted_index.further | 148 |

| abstract_inverted_index.generic | 91 |

| abstract_inverted_index.manner. | 222 |

| abstract_inverted_index.outputs | 156 |

| abstract_inverted_index.produce | 158 |

| abstract_inverted_index.prompts | 73 |

| abstract_inverted_index.propose | 128 |

| abstract_inverted_index.prosody | 113, 135 |

| abstract_inverted_index.reduces | 13 |

| abstract_inverted_index.results | 164 |

| abstract_inverted_index.samples | 224 |

| abstract_inverted_index.seconds | 200 |

| abstract_inverted_index.sources | 185 |

| abstract_inverted_index.speaker | 182 |

| abstract_inverted_index.timbre, | 78 |

| abstract_inverted_index.trained | 49 |

| abstract_inverted_index.various | 210 |

| abstract_inverted_index.However, | 27 |

| abstract_inverted_index.Mega-TTS | 88, 167 |

| abstract_inverted_index.acoustic | 107 |

| abstract_inverted_index.aspects: | 40 |

| abstract_inverted_index.language | 137 |

| abstract_inverted_index.leverage | 149 |

| abstract_inverted_index.minutes. | 203 |

| abstract_inverted_index.multiple | 154 |

| abstract_inverted_index.powerful | 106 |

| abstract_inverted_index.previous | 42 |

| abstract_inverted_index.process. | 26 |

| abstract_inverted_index.prompts, | 10, 52 |

| abstract_inverted_index.prompts. | 146 |

| abstract_inverted_index.prosodic | 70 |

| abstract_inverted_index.prosody. | 162 |

| abstract_inverted_index.skipping | 23 |

| abstract_inverted_index.speaking | 211 |

| abstract_inverted_index.transfer | 209 |

| abstract_inverted_index.Zero-shot | 0 |

| abstract_inverted_index.arbitrary | 184 |

| abstract_inverted_index.following | 39 |

| abstract_inverted_index.inference | 66 |

| abstract_inverted_index.mechanism | 93 |

| abstract_inverted_index.prompting | 29, 92 |

| abstract_inverted_index.providing | 123 |

| abstract_inverted_index.restricts | 55 |

| abstract_inverted_index.typically | 48 |

| abstract_inverted_index.zero-shot | 32, 45, 95 |

| abstract_inverted_index.challenges | 36 |

| abstract_inverted_index.compressed | 119 |

| abstract_inverted_index.controlled | 221 |

| abstract_inverted_index.introduces | 87 |

| abstract_inverted_index.mechanisms | 30 |

| abstract_inverted_index.outperform | 188 |

| abstract_inverted_index.relatively | 62 |

| abstract_inverted_index.separately | 110 |

| abstract_inverted_index.sufficient | 63 |

| abstract_inverted_index.synthesize | 5, 172 |

| abstract_inverted_index.autoencoder | 108 |

| abstract_inverted_index.challenges. | 101 |

| abstract_inverted_index.computation | 17 |

| abstract_inverted_index.demonstrate | 165 |

| abstract_inverted_index.fine-tuning | 25, 190 |

| abstract_inverted_index.information | 71, 116, 143 |

| abstract_inverted_index.performance | 57 |

| abstract_inverted_index.Experimental | 163 |

| abstract_inverted_index.Furthermore, | 204 |

| abstract_inverted_index.consistently | 187 |

| abstract_inverted_index.controllable | 161 |

| abstract_inverted_index.fine-grained | 219 |

| abstract_inverted_index.high-quality | 124 |

| abstract_inverted_index.requirements | 18 |

| abstract_inverted_index.transferable | 159 |

| abstract_inverted_index.Specifically, | 102 |

| abstract_inverted_index.probabilities | 151 |

| abstract_inverted_index.significantly | 12, 54 |

| abstract_inverted_index.aforementioned | 100 |

| abstract_inverted_index.multi-sentence | 145 |

| abstract_inverted_index.text-to-speech | 1 |

| abstract_inverted_index.untransferable | 81 |

| abstract_inverted_index.multi-reference | 130 |

| abstract_inverted_index.single-sentence | 51 |

| abstract_inverted_index.reconstructions. | 125 |

| abstract_inverted_index.identity-preserving | 173 |

| abstract_inverted_index.https://boostprompt.github.io/boostprompt/. | 229 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 11 |

| sustainable_development_goals[0].id | https://metadata.un.org/sdg/16 |

| sustainable_development_goals[0].score | 0.6200000047683716 |

| sustainable_development_goals[0].display_name | Peace, Justice and strong institutions |

| citation_normalized_percentile |