Modeling the Internal and Contextual Attention for Self-Supervised Skeleton-Based Action Recognition Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.3390/s25216532

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.3390/s25216532

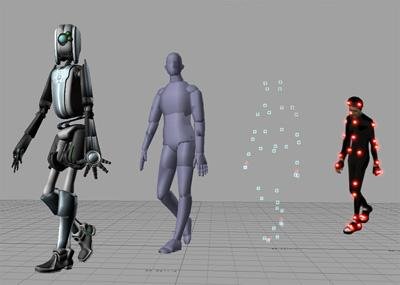

Multimodal contrastive learning has achieved significant performance advantages in self-supervised skeleton-based action recognition. Previous methods are limited by modality imbalance, which reduces alignment accuracy and makes it difficult to combine important spatial–temporal frequency patterns, leading to confusion between modalities and weaker feature representations. To overcome these problems, we explore intra-modality feature-wise self-similarity and inter-modality instance-wise cross-consistency, and discover two inherent correlations that benefit recognition: (i) Global Perspective expresses how action semantics carry a broad and high-level understanding, which supports the use of globally discriminative feature representations. (ii) Focus Adaptation refers to the role of the frequency spectrum in guiding attention toward key joints by emphasizing compact and salient signal patterns. Building upon these insights, we propose a novel language–skeleton contrastive learning framework comprising two key components: (a) Feature Modulation, which constructs a skeleton–language action conceptual domain to minimize the expected information gain between vision and language modalities. (b) Frequency Feature Learning, which introduces a Frequency-domain Spatial–Temporal block (FreST) that focuses on sparse key human joints in the frequency domain with compact signal energy. Extensive experiments demonstrate the effectiveness of our method achieves remarkable action recognition performance on widely used benchmark datasets, including NTU RGB+D 60 and NTU RGB+D 120. Especially on the challenging PKU-MMD dataset, MICA has achieved at least a 4.6% improvement over classical methods such as CrosSCLR and AimCLR, effectively demonstrating its ability to capture internal and contextual attention information.

Related Topics

- Type

- article

- Language

- en

- Landing Page

- https://doi.org/10.3390/s25216532

- https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961

- OA Status

- gold

- References

- 60

- OpenAlex ID

- https://openalex.org/W4415489811

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4415489811Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.3390/s25216532Digital Object Identifier

- Title

-

Modeling the Internal and Contextual Attention for Self-Supervised Skeleton-Based Action RecognitionWork title

- Type

-

articleOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2025Year of publication

- Publication date

-

2025-10-23Full publication date if available

- Authors

-

Wentian Xin, Yue Teng, Jicai Zhang, Yi Liu, Ruyi Liu, Yuzhi Hu, Qiguang MiaoList of authors in order

- Landing page

-

https://doi.org/10.3390/s25216532Publisher landing page

- PDF URL

-

https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

goldOpen access status per OpenAlex

- OA URL

-

https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961Direct OA link when available

- Cited by

-

0Total citation count in OpenAlex

- References (count)

-

60Number of works referenced by this work

Full payload

| id | https://openalex.org/W4415489811 |

|---|---|

| doi | https://doi.org/10.3390/s25216532 |

| ids.doi | https://doi.org/10.3390/s25216532 |

| ids.pmid | https://pubmed.ncbi.nlm.nih.gov/41228754 |

| ids.openalex | https://openalex.org/W4415489811 |

| fwci | 0.0 |

| type | article |

| title | Modeling the Internal and Contextual Attention for Self-Supervised Skeleton-Based Action Recognition |

| biblio.issue | 21 |

| biblio.volume | 25 |

| biblio.last_page | 6532 |

| biblio.first_page | 6532 |

| topics[0].id | https://openalex.org/T10812 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.9933000206947327 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1707 |

| topics[0].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[0].display_name | Human Pose and Action Recognition |

| topics[1].id | https://openalex.org/T11512 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9878000020980835 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1702 |

| topics[1].subfield.display_name | Artificial Intelligence |

| topics[1].display_name | Anomaly Detection Techniques and Applications |

| topics[2].id | https://openalex.org/T10667 |

| topics[2].field.id | https://openalex.org/fields/32 |

| topics[2].field.display_name | Psychology |

| topics[2].score | 0.906499981880188 |

| topics[2].domain.id | https://openalex.org/domains/2 |

| topics[2].domain.display_name | Social Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/3205 |

| topics[2].subfield.display_name | Experimental and Cognitive Psychology |

| topics[2].display_name | Emotion and Mood Recognition |

| is_xpac | False |

| apc_list.value | 2400 |

| apc_list.currency | CHF |

| apc_list.value_usd | 2598 |

| apc_paid.value | 2400 |

| apc_paid.currency | CHF |

| apc_paid.value_usd | 2598 |

| language | en |

| locations[0].id | doi:10.3390/s25216532 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S101949793 |

| locations[0].source.issn | 1424-8220 |

| locations[0].source.type | journal |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | 1424-8220 |

| locations[0].source.is_core | True |

| locations[0].source.is_in_doaj | True |

| locations[0].source.display_name | Sensors |

| locations[0].source.host_organization | https://openalex.org/P4310310987 |

| locations[0].source.host_organization_name | Multidisciplinary Digital Publishing Institute |

| locations[0].source.host_organization_lineage | https://openalex.org/P4310310987 |

| locations[0].source.host_organization_lineage_names | Multidisciplinary Digital Publishing Institute |

| locations[0].license | cc-by |

| locations[0].pdf_url | https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961 |

| locations[0].version | publishedVersion |

| locations[0].raw_type | journal-article |

| locations[0].license_id | https://openalex.org/licenses/cc-by |

| locations[0].is_accepted | True |

| locations[0].is_published | True |

| locations[0].raw_source_name | Sensors |

| locations[0].landing_page_url | https://doi.org/10.3390/s25216532 |

| locations[1].id | pmid:41228754 |

| locations[1].is_oa | False |

| locations[1].source.id | https://openalex.org/S4306525036 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | False |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | PubMed |

| locations[1].source.host_organization | https://openalex.org/I1299303238 |

| locations[1].source.host_organization_name | National Institutes of Health |

| locations[1].source.host_organization_lineage | https://openalex.org/I1299303238 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | publishedVersion |

| locations[1].raw_type | |

| locations[1].license_id | |

| locations[1].is_accepted | True |

| locations[1].is_published | True |

| locations[1].raw_source_name | Sensors (Basel, Switzerland) |

| locations[1].landing_page_url | https://pubmed.ncbi.nlm.nih.gov/41228754 |

| locations[2].id | pmh:oai:doaj.org/article:5da24f6cec704f3db128ca8577da635c |

| locations[2].is_oa | False |

| locations[2].source.id | https://openalex.org/S4306401280 |

| locations[2].source.issn | |

| locations[2].source.type | repository |

| locations[2].source.is_oa | False |

| locations[2].source.issn_l | |

| locations[2].source.is_core | False |

| locations[2].source.is_in_doaj | False |

| locations[2].source.display_name | DOAJ (DOAJ: Directory of Open Access Journals) |

| locations[2].source.host_organization | |

| locations[2].source.host_organization_name | |

| locations[2].license | |

| locations[2].pdf_url | |

| locations[2].version | submittedVersion |

| locations[2].raw_type | article |

| locations[2].license_id | |

| locations[2].is_accepted | False |

| locations[2].is_published | False |

| locations[2].raw_source_name | Sensors, Vol 25, Iss 21, p 6532 (2025) |

| locations[2].landing_page_url | https://doaj.org/article/5da24f6cec704f3db128ca8577da635c |

| locations[3].id | pmh:oai:europepmc.org:11419315 |

| locations[3].is_oa | True |

| locations[3].source.id | https://openalex.org/S4306400806 |

| locations[3].source.issn | |

| locations[3].source.type | repository |

| locations[3].source.is_oa | False |

| locations[3].source.issn_l | |

| locations[3].source.is_core | False |

| locations[3].source.is_in_doaj | False |

| locations[3].source.display_name | Europe PMC (PubMed Central) |

| locations[3].source.host_organization | https://openalex.org/I1303153112 |

| locations[3].source.host_organization_name | European Bioinformatics Institute |

| locations[3].source.host_organization_lineage | https://openalex.org/I1303153112 |

| locations[3].license | other-oa |

| locations[3].pdf_url | |

| locations[3].version | submittedVersion |

| locations[3].raw_type | Text |

| locations[3].license_id | https://openalex.org/licenses/other-oa |

| locations[3].is_accepted | False |

| locations[3].is_published | False |

| locations[3].raw_source_name | |

| locations[3].landing_page_url | https://www.ncbi.nlm.nih.gov/pmc/articles/12608129 |

| indexed_in | crossref, doaj, pubmed |

| authorships[0].author.id | https://openalex.org/A5019315893 |

| authorships[0].author.orcid | https://orcid.org/0000-0002-0849-6093 |

| authorships[0].author.display_name | Wentian Xin |

| authorships[0].countries | CN |

| authorships[0].affiliations[0].institution_ids | https://openalex.org/I43313876 |

| authorships[0].affiliations[0].raw_affiliation_string | School of Information Science and Technology, Dalian Maritime University, Dalian 116026, China |

| authorships[0].institutions[0].id | https://openalex.org/I43313876 |

| authorships[0].institutions[0].ror | https://ror.org/002b7nr53 |

| authorships[0].institutions[0].type | education |

| authorships[0].institutions[0].lineage | https://openalex.org/I43313876 |

| authorships[0].institutions[0].country_code | CN |

| authorships[0].institutions[0].display_name | Dalian Maritime University |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Wentian Xin |

| authorships[0].is_corresponding | False |

| authorships[0].raw_affiliation_strings | School of Information Science and Technology, Dalian Maritime University, Dalian 116026, China |

| authorships[1].author.id | https://openalex.org/A5001946688 |

| authorships[1].author.orcid | https://orcid.org/0009-0009-0345-1433 |

| authorships[1].author.display_name | Yue Teng |

| authorships[1].affiliations[0].raw_affiliation_string | Institute of Dataspace, Hefei Comprehensive National Science Center, Hefei 231283, China |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Yue Teng |

| authorships[1].is_corresponding | True |

| authorships[1].raw_affiliation_strings | Institute of Dataspace, Hefei Comprehensive National Science Center, Hefei 231283, China |

| authorships[2].author.id | https://openalex.org/A5111692142 |

| authorships[2].author.orcid | |

| authorships[2].author.display_name | Jicai Zhang |

| authorships[2].affiliations[0].raw_affiliation_string | Institute of Dataspace, Hefei Comprehensive National Science Center, Hefei 231283, China |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Jikang Zhang |

| authorships[2].is_corresponding | False |

| authorships[2].raw_affiliation_strings | Institute of Dataspace, Hefei Comprehensive National Science Center, Hefei 231283, China |

| authorships[3].author.id | https://openalex.org/A5101797028 |

| authorships[3].author.orcid | https://orcid.org/0009-0000-7044-9514 |

| authorships[3].author.display_name | Yi Liu |

| authorships[3].affiliations[0].raw_affiliation_string | Institute of Dataspace, Hefei Comprehensive National Science Center, Hefei 231283, China |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Yi Liu |

| authorships[3].is_corresponding | False |

| authorships[3].raw_affiliation_strings | Institute of Dataspace, Hefei Comprehensive National Science Center, Hefei 231283, China |

| authorships[4].author.id | https://openalex.org/A5101751409 |

| authorships[4].author.orcid | https://orcid.org/0000-0002-6882-9566 |

| authorships[4].author.display_name | Ruyi Liu |

| authorships[4].countries | CN |

| authorships[4].affiliations[0].institution_ids | https://openalex.org/I149594827 |

| authorships[4].affiliations[0].raw_affiliation_string | School of Computer Science and Technology, Xidian University, Xi'an 710071, China |

| authorships[4].institutions[0].id | https://openalex.org/I149594827 |

| authorships[4].institutions[0].ror | https://ror.org/05s92vm98 |

| authorships[4].institutions[0].type | education |

| authorships[4].institutions[0].lineage | https://openalex.org/I149594827 |

| authorships[4].institutions[0].country_code | CN |

| authorships[4].institutions[0].display_name | Xidian University |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | Ruyi Liu |

| authorships[4].is_corresponding | False |

| authorships[4].raw_affiliation_strings | School of Computer Science and Technology, Xidian University, Xi'an 710071, China |

| authorships[5].author.id | https://openalex.org/A5057854042 |

| authorships[5].author.orcid | |

| authorships[5].author.display_name | Yuzhi Hu |

| authorships[5].countries | CN |

| authorships[5].affiliations[0].institution_ids | https://openalex.org/I149594827 |

| authorships[5].affiliations[0].raw_affiliation_string | School of Computer Science and Technology, Xidian University, Xi'an 710071, China |

| authorships[5].institutions[0].id | https://openalex.org/I149594827 |

| authorships[5].institutions[0].ror | https://ror.org/05s92vm98 |

| authorships[5].institutions[0].type | education |

| authorships[5].institutions[0].lineage | https://openalex.org/I149594827 |

| authorships[5].institutions[0].country_code | CN |

| authorships[5].institutions[0].display_name | Xidian University |

| authorships[5].author_position | middle |

| authorships[5].raw_author_name | Yuzhi Hu |

| authorships[5].is_corresponding | False |

| authorships[5].raw_affiliation_strings | School of Computer Science and Technology, Xidian University, Xi'an 710071, China |

| authorships[6].author.id | https://openalex.org/A5007404362 |

| authorships[6].author.orcid | https://orcid.org/0000-0002-2872-388X |

| authorships[6].author.display_name | Qiguang Miao |

| authorships[6].countries | CN |

| authorships[6].affiliations[0].institution_ids | https://openalex.org/I149594827 |

| authorships[6].affiliations[0].raw_affiliation_string | School of Computer Science and Technology, Xidian University, Xi'an 710071, China |

| authorships[6].institutions[0].id | https://openalex.org/I149594827 |

| authorships[6].institutions[0].ror | https://ror.org/05s92vm98 |

| authorships[6].institutions[0].type | education |

| authorships[6].institutions[0].lineage | https://openalex.org/I149594827 |

| authorships[6].institutions[0].country_code | CN |

| authorships[6].institutions[0].display_name | Xidian University |

| authorships[6].author_position | last |

| authorships[6].raw_author_name | Qiguang Miao |

| authorships[6].is_corresponding | False |

| authorships[6].raw_affiliation_strings | School of Computer Science and Technology, Xidian University, Xi'an 710071, China |

| has_content.pdf | True |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961 |

| open_access.oa_status | gold |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-24T00:00:00 |

| display_name | Modeling the Internal and Contextual Attention for Self-Supervised Skeleton-Based Action Recognition |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T03:46:38.306776 |

| primary_topic.id | https://openalex.org/T10812 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.9933000206947327 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1707 |

| primary_topic.subfield.display_name | Computer Vision and Pattern Recognition |

| primary_topic.display_name | Human Pose and Action Recognition |

| cited_by_count | 0 |

| locations_count | 4 |

| best_oa_location.id | doi:10.3390/s25216532 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S101949793 |

| best_oa_location.source.issn | 1424-8220 |

| best_oa_location.source.type | journal |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | 1424-8220 |

| best_oa_location.source.is_core | True |

| best_oa_location.source.is_in_doaj | True |

| best_oa_location.source.display_name | Sensors |

| best_oa_location.source.host_organization | https://openalex.org/P4310310987 |

| best_oa_location.source.host_organization_name | Multidisciplinary Digital Publishing Institute |

| best_oa_location.source.host_organization_lineage | https://openalex.org/P4310310987 |

| best_oa_location.source.host_organization_lineage_names | Multidisciplinary Digital Publishing Institute |

| best_oa_location.license | cc-by |

| best_oa_location.pdf_url | https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961 |

| best_oa_location.version | publishedVersion |

| best_oa_location.raw_type | journal-article |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by |

| best_oa_location.is_accepted | True |

| best_oa_location.is_published | True |

| best_oa_location.raw_source_name | Sensors |

| best_oa_location.landing_page_url | https://doi.org/10.3390/s25216532 |

| primary_location.id | doi:10.3390/s25216532 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S101949793 |

| primary_location.source.issn | 1424-8220 |

| primary_location.source.type | journal |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | 1424-8220 |

| primary_location.source.is_core | True |

| primary_location.source.is_in_doaj | True |

| primary_location.source.display_name | Sensors |

| primary_location.source.host_organization | https://openalex.org/P4310310987 |

| primary_location.source.host_organization_name | Multidisciplinary Digital Publishing Institute |

| primary_location.source.host_organization_lineage | https://openalex.org/P4310310987 |

| primary_location.source.host_organization_lineage_names | Multidisciplinary Digital Publishing Institute |

| primary_location.license | cc-by |

| primary_location.pdf_url | https://www.mdpi.com/1424-8220/25/21/6532/pdf?version=1761220961 |

| primary_location.version | publishedVersion |

| primary_location.raw_type | journal-article |

| primary_location.license_id | https://openalex.org/licenses/cc-by |

| primary_location.is_accepted | True |

| primary_location.is_published | True |

| primary_location.raw_source_name | Sensors |

| primary_location.landing_page_url | https://doi.org/10.3390/s25216532 |

| publication_date | 2025-10-23 |

| publication_year | 2025 |

| referenced_works | https://openalex.org/W3011934803, https://openalex.org/W4400410064, https://openalex.org/W4205427072, https://openalex.org/W4221057901, https://openalex.org/W3127089628, https://openalex.org/W2935497007, https://openalex.org/W2918629098, https://openalex.org/W6884631020, https://openalex.org/W4402753559, https://openalex.org/W4402703028, https://openalex.org/W3170480636, https://openalex.org/W4400188636, https://openalex.org/W4312245820, https://openalex.org/W4296478337, https://openalex.org/W4410444066, https://openalex.org/W3034999503, https://openalex.org/W3119171349, https://openalex.org/W2963076818, https://openalex.org/W2948058585, https://openalex.org/W4399812200, https://openalex.org/W4388073267, https://openalex.org/W4390871852, https://openalex.org/W4399800460, https://openalex.org/W4404035381, https://openalex.org/W3169413442, https://openalex.org/W4382240124, https://openalex.org/W4399404947, https://openalex.org/W4386412320, https://openalex.org/W4410398086, https://openalex.org/W4406857689, https://openalex.org/W4410384582, https://openalex.org/W3092424783, https://openalex.org/W2958360136, https://openalex.org/W4402727681, https://openalex.org/W3103184573, https://openalex.org/W4382459184, https://openalex.org/W4405755225, https://openalex.org/W4386172468, https://openalex.org/W4386065445, https://openalex.org/W4408121553, https://openalex.org/W4406559860, https://openalex.org/W4386215080, https://openalex.org/W4387789756, https://openalex.org/W4406046873, https://openalex.org/W4401009299, https://openalex.org/W7084098655, https://openalex.org/W4401018833, https://openalex.org/W3195429019, https://openalex.org/W4413146761, https://openalex.org/W4389000614, https://openalex.org/W4408534934, https://openalex.org/W3034771037, https://openalex.org/W2944006115, https://openalex.org/W2765433083, https://openalex.org/W4313185874, https://openalex.org/W4390872019, https://openalex.org/W4385768200, https://openalex.org/W4389459415, https://openalex.org/W4312387119, https://openalex.org/W4323897056 |

| referenced_works_count | 60 |

| abstract_inverted_index.a | 72, 116, 131, 153, 210 |

| abstract_inverted_index.60 | 194 |

| abstract_inverted_index.To | 43 |

| abstract_inverted_index.as | 217 |

| abstract_inverted_index.at | 208 |

| abstract_inverted_index.by | 17, 103 |

| abstract_inverted_index.in | 8, 97, 165 |

| abstract_inverted_index.it | 26 |

| abstract_inverted_index.of | 81, 93, 178 |

| abstract_inverted_index.on | 160, 186, 200 |

| abstract_inverted_index.to | 28, 35, 90, 136, 225 |

| abstract_inverted_index.we | 47, 114 |

| abstract_inverted_index.(a) | 126 |

| abstract_inverted_index.(b) | 147 |

| abstract_inverted_index.(i) | 64 |

| abstract_inverted_index.NTU | 192, 196 |

| abstract_inverted_index.and | 24, 39, 52, 56, 74, 106, 144, 195, 219, 228 |

| abstract_inverted_index.are | 15 |

| abstract_inverted_index.has | 3, 206 |

| abstract_inverted_index.how | 68 |

| abstract_inverted_index.its | 223 |

| abstract_inverted_index.key | 101, 124, 162 |

| abstract_inverted_index.our | 179 |

| abstract_inverted_index.the | 79, 91, 94, 138, 166, 176, 201 |

| abstract_inverted_index.two | 58, 123 |

| abstract_inverted_index.use | 80 |

| abstract_inverted_index.(ii) | 86 |

| abstract_inverted_index.120. | 198 |

| abstract_inverted_index.4.6% | 211 |

| abstract_inverted_index.MICA | 205 |

| abstract_inverted_index.gain | 141 |

| abstract_inverted_index.over | 213 |

| abstract_inverted_index.role | 92 |

| abstract_inverted_index.such | 216 |

| abstract_inverted_index.that | 61, 158 |

| abstract_inverted_index.upon | 111 |

| abstract_inverted_index.used | 188 |

| abstract_inverted_index.with | 169 |

| abstract_inverted_index.Focus | 87 |

| abstract_inverted_index.RGB+D | 193, 197 |

| abstract_inverted_index.block | 156 |

| abstract_inverted_index.broad | 73 |

| abstract_inverted_index.carry | 71 |

| abstract_inverted_index.human | 163 |

| abstract_inverted_index.least | 209 |

| abstract_inverted_index.makes | 25 |

| abstract_inverted_index.novel | 117 |

| abstract_inverted_index.these | 45, 112 |

| abstract_inverted_index.which | 20, 77, 129, 151 |

| abstract_inverted_index.Global | 65 |

| abstract_inverted_index.action | 11, 69, 133, 183 |

| abstract_inverted_index.domain | 135, 168 |

| abstract_inverted_index.joints | 102, 164 |

| abstract_inverted_index.method | 180 |

| abstract_inverted_index.refers | 89 |

| abstract_inverted_index.signal | 108, 171 |

| abstract_inverted_index.sparse | 161 |

| abstract_inverted_index.toward | 100 |

| abstract_inverted_index.vision | 143 |

| abstract_inverted_index.weaker | 40 |

| abstract_inverted_index.widely | 187 |

| abstract_inverted_index.(FreST) | 157 |

| abstract_inverted_index.AimCLR, | 220 |

| abstract_inverted_index.Feature | 127, 149 |

| abstract_inverted_index.PKU-MMD | 203 |

| abstract_inverted_index.ability | 224 |

| abstract_inverted_index.benefit | 62 |

| abstract_inverted_index.between | 37, 142 |

| abstract_inverted_index.capture | 226 |

| abstract_inverted_index.combine | 29 |

| abstract_inverted_index.compact | 105, 170 |

| abstract_inverted_index.energy. | 172 |

| abstract_inverted_index.explore | 48 |

| abstract_inverted_index.feature | 41, 84 |

| abstract_inverted_index.focuses | 159 |

| abstract_inverted_index.guiding | 98 |

| abstract_inverted_index.leading | 34 |

| abstract_inverted_index.limited | 16 |

| abstract_inverted_index.methods | 14, 215 |

| abstract_inverted_index.propose | 115 |

| abstract_inverted_index.reduces | 21 |

| abstract_inverted_index.salient | 107 |

| abstract_inverted_index.Building | 110 |

| abstract_inverted_index.CrosSCLR | 218 |

| abstract_inverted_index.Previous | 13 |

| abstract_inverted_index.accuracy | 23 |

| abstract_inverted_index.achieved | 4, 207 |

| abstract_inverted_index.achieves | 181 |

| abstract_inverted_index.dataset, | 204 |

| abstract_inverted_index.discover | 57 |

| abstract_inverted_index.expected | 139 |

| abstract_inverted_index.globally | 82 |

| abstract_inverted_index.inherent | 59 |

| abstract_inverted_index.internal | 227 |

| abstract_inverted_index.language | 145 |

| abstract_inverted_index.learning | 2, 120 |

| abstract_inverted_index.minimize | 137 |

| abstract_inverted_index.modality | 18 |

| abstract_inverted_index.overcome | 44 |

| abstract_inverted_index.spectrum | 96 |

| abstract_inverted_index.supports | 78 |

| abstract_inverted_index.Extensive | 173 |

| abstract_inverted_index.Frequency | 148 |

| abstract_inverted_index.Learning, | 150 |

| abstract_inverted_index.alignment | 22 |

| abstract_inverted_index.attention | 99, 230 |

| abstract_inverted_index.benchmark | 189 |

| abstract_inverted_index.classical | 214 |

| abstract_inverted_index.confusion | 36 |

| abstract_inverted_index.datasets, | 190 |

| abstract_inverted_index.difficult | 27 |

| abstract_inverted_index.expresses | 67 |

| abstract_inverted_index.framework | 121 |

| abstract_inverted_index.frequency | 32, 95, 167 |

| abstract_inverted_index.important | 30 |

| abstract_inverted_index.including | 191 |

| abstract_inverted_index.insights, | 113 |

| abstract_inverted_index.patterns, | 33 |

| abstract_inverted_index.patterns. | 109 |

| abstract_inverted_index.problems, | 46 |

| abstract_inverted_index.semantics | 70 |

| abstract_inverted_index.Adaptation | 88 |

| abstract_inverted_index.Especially | 199 |

| abstract_inverted_index.Multimodal | 0 |

| abstract_inverted_index.advantages | 7 |

| abstract_inverted_index.comprising | 122 |

| abstract_inverted_index.conceptual | 134 |

| abstract_inverted_index.constructs | 130 |

| abstract_inverted_index.contextual | 229 |

| abstract_inverted_index.high-level | 75 |

| abstract_inverted_index.imbalance, | 19 |

| abstract_inverted_index.introduces | 152 |

| abstract_inverted_index.modalities | 38 |

| abstract_inverted_index.remarkable | 182 |

| abstract_inverted_index.Modulation, | 128 |

| abstract_inverted_index.Perspective | 66 |

| abstract_inverted_index.challenging | 202 |

| abstract_inverted_index.components: | 125 |

| abstract_inverted_index.contrastive | 1, 119 |

| abstract_inverted_index.demonstrate | 175 |

| abstract_inverted_index.effectively | 221 |

| abstract_inverted_index.emphasizing | 104 |

| abstract_inverted_index.experiments | 174 |

| abstract_inverted_index.improvement | 212 |

| abstract_inverted_index.information | 140 |

| abstract_inverted_index.modalities. | 146 |

| abstract_inverted_index.performance | 6, 185 |

| abstract_inverted_index.recognition | 184 |

| abstract_inverted_index.significant | 5 |

| abstract_inverted_index.correlations | 60 |

| abstract_inverted_index.feature-wise | 50 |

| abstract_inverted_index.information. | 231 |

| abstract_inverted_index.recognition. | 12 |

| abstract_inverted_index.recognition: | 63 |

| abstract_inverted_index.demonstrating | 222 |

| abstract_inverted_index.effectiveness | 177 |

| abstract_inverted_index.instance-wise | 54 |

| abstract_inverted_index.discriminative | 83 |

| abstract_inverted_index.inter-modality | 53 |

| abstract_inverted_index.intra-modality | 49 |

| abstract_inverted_index.skeleton-based | 10 |

| abstract_inverted_index.understanding, | 76 |

| abstract_inverted_index.self-similarity | 51 |

| abstract_inverted_index.self-supervised | 9 |

| abstract_inverted_index.Frequency-domain | 154 |

| abstract_inverted_index.representations. | 42, 85 |

| abstract_inverted_index.Spatial–Temporal | 155 |

| abstract_inverted_index.cross-consistency, | 55 |

| abstract_inverted_index.spatial–temporal | 31 |

| abstract_inverted_index.language–skeleton | 118 |

| abstract_inverted_index.skeleton–language | 132 |

| cited_by_percentile_year | |

| corresponding_author_ids | https://openalex.org/A5001946688 |

| countries_distinct_count | 1 |

| institutions_distinct_count | 7 |

| citation_normalized_percentile.value | 0.53758804 |

| citation_normalized_percentile.is_in_top_1_percent | False |

| citation_normalized_percentile.is_in_top_10_percent | False |