Online Micro-gesture Recognition Using Data Augmentation and Spatial-Temporal Attention Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2507.09512

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2507.09512

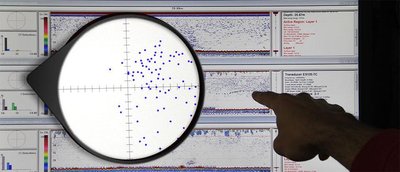

In this paper, we introduce the latest solution developed by our team, HFUT-VUT, for the Micro-gesture Online Recognition track of the IJCAI 2025 MiGA Challenge. The Micro-gesture Online Recognition task is a highly challenging problem that aims to locate the temporal positions and recognize the categories of multiple micro-gesture instances in untrimmed videos. Compared to traditional temporal action detection, this task places greater emphasis on distinguishing between micro-gesture categories and precisely identifying the start and end times of each instance. Moreover, micro-gestures are typically spontaneous human actions, with greater differences than those found in other human actions. To address these challenges, we propose hand-crafted data augmentation and spatial-temporal attention to enhance the model's ability to classify and localize micro-gestures more accurately. Our solution achieved an F1 score of 38.03, outperforming the previous state-of-the-art by 37.9%. As a result, our method ranked first in the Micro-gesture Online Recognition track.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2507.09512

- https://arxiv.org/pdf/2507.09512

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W4414696231

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4414696231Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2507.09512Digital Object Identifier

- Title

-

Online Micro-gesture Recognition Using Data Augmentation and Spatial-Temporal AttentionWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2025Year of publication

- Publication date

-

2025-07-13Full publication date if available

- Authors

-

Pengyu Liu, Kun Li, Fei Wang, Yanyan Wei, Junhui She, Dan GuoList of authors in order

- Landing page

-

https://arxiv.org/abs/2507.09512Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2507.09512Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2507.09512Direct OA link when available

- Cited by

-

0Total citation count in OpenAlex

Full payload

| id | https://openalex.org/W4414696231 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2507.09512 |

| ids.doi | https://doi.org/10.48550/arxiv.2507.09512 |

| ids.openalex | https://openalex.org/W4414696231 |

| fwci | |

| type | preprint |

| title | Online Micro-gesture Recognition Using Data Augmentation and Spatial-Temporal Attention |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T11398 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.9986000061035156 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1709 |

| topics[0].subfield.display_name | Human-Computer Interaction |

| topics[0].display_name | Hand Gesture Recognition Systems |

| topics[1].id | https://openalex.org/T10812 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9805999994277954 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1707 |

| topics[1].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[1].display_name | Human Pose and Action Recognition |

| topics[2].id | https://openalex.org/T12290 |

| topics[2].field.id | https://openalex.org/fields/22 |

| topics[2].field.display_name | Engineering |

| topics[2].score | 0.95169997215271 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/2207 |

| topics[2].subfield.display_name | Control and Systems Engineering |

| topics[2].display_name | Human Motion and Animation |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2507.09512 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | https://arxiv.org/pdf/2507.09512 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2507.09512 |

| locations[1].id | doi:10.48550/arxiv.2507.09512 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2507.09512 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5108144130 |

| authorships[0].author.orcid | |

| authorships[0].author.display_name | Pengyu Liu |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Liu, Pengyu |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5100377578 |

| authorships[1].author.orcid | https://orcid.org/0000-0003-2326-0166 |

| authorships[1].author.display_name | Kun Li |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Li, Kun |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5100455750 |

| authorships[2].author.orcid | https://orcid.org/0000-0001-8432-0009 |

| authorships[2].author.display_name | Fei Wang |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Wang, Fei |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5101143136 |

| authorships[3].author.orcid | |

| authorships[3].author.display_name | Yanyan Wei |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Wei, Yanyan |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5101390823 |

| authorships[4].author.orcid | |

| authorships[4].author.display_name | Junhui She |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | She, Junhui |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5059530979 |

| authorships[5].author.orcid | https://orcid.org/0000-0003-2594-254X |

| authorships[5].author.display_name | Dan Guo |

| authorships[5].author_position | last |

| authorships[5].raw_author_name | Guo, Dan |

| authorships[5].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2507.09512 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Online Micro-gesture Recognition Using Data Augmentation and Spatial-Temporal Attention |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T11398 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.9986000061035156 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1709 |

| primary_topic.subfield.display_name | Human-Computer Interaction |

| primary_topic.display_name | Hand Gesture Recognition Systems |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2507.09512 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2507.09512 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2507.09512 |

| primary_location.id | pmh:oai:arXiv.org:2507.09512 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | https://arxiv.org/pdf/2507.09512 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2507.09512 |

| publication_date | 2025-07-13 |

| publication_year | 2025 |

| referenced_works_count | 0 |

| abstract_inverted_index.a | 31, 136 |

| abstract_inverted_index.As | 135 |

| abstract_inverted_index.F1 | 125 |

| abstract_inverted_index.In | 0 |

| abstract_inverted_index.To | 97 |

| abstract_inverted_index.an | 124 |

| abstract_inverted_index.by | 9, 133 |

| abstract_inverted_index.in | 50, 93, 142 |

| abstract_inverted_index.is | 30 |

| abstract_inverted_index.of | 19, 46, 77, 127 |

| abstract_inverted_index.on | 64 |

| abstract_inverted_index.to | 37, 54, 109, 114 |

| abstract_inverted_index.we | 3, 101 |

| abstract_inverted_index.Our | 121 |

| abstract_inverted_index.The | 25 |

| abstract_inverted_index.and | 42, 69, 74, 106, 116 |

| abstract_inverted_index.are | 82 |

| abstract_inverted_index.end | 75 |

| abstract_inverted_index.for | 13 |

| abstract_inverted_index.our | 10, 138 |

| abstract_inverted_index.the | 5, 14, 20, 39, 44, 72, 111, 130, 143 |

| abstract_inverted_index.2025 | 22 |

| abstract_inverted_index.MiGA | 23 |

| abstract_inverted_index.aims | 36 |

| abstract_inverted_index.data | 104 |

| abstract_inverted_index.each | 78 |

| abstract_inverted_index.more | 119 |

| abstract_inverted_index.task | 29, 60 |

| abstract_inverted_index.than | 90 |

| abstract_inverted_index.that | 35 |

| abstract_inverted_index.this | 1, 59 |

| abstract_inverted_index.with | 87 |

| abstract_inverted_index.IJCAI | 21 |

| abstract_inverted_index.first | 141 |

| abstract_inverted_index.found | 92 |

| abstract_inverted_index.human | 85, 95 |

| abstract_inverted_index.other | 94 |

| abstract_inverted_index.score | 126 |

| abstract_inverted_index.start | 73 |

| abstract_inverted_index.team, | 11 |

| abstract_inverted_index.these | 99 |

| abstract_inverted_index.those | 91 |

| abstract_inverted_index.times | 76 |

| abstract_inverted_index.track | 18 |

| abstract_inverted_index.37.9%. | 134 |

| abstract_inverted_index.38.03, | 128 |

| abstract_inverted_index.Online | 16, 27, 145 |

| abstract_inverted_index.action | 57 |

| abstract_inverted_index.highly | 32 |

| abstract_inverted_index.latest | 6 |

| abstract_inverted_index.locate | 38 |

| abstract_inverted_index.method | 139 |

| abstract_inverted_index.paper, | 2 |

| abstract_inverted_index.places | 61 |

| abstract_inverted_index.ranked | 140 |

| abstract_inverted_index.track. | 147 |

| abstract_inverted_index.ability | 113 |

| abstract_inverted_index.address | 98 |

| abstract_inverted_index.between | 66 |

| abstract_inverted_index.enhance | 110 |

| abstract_inverted_index.greater | 62, 88 |

| abstract_inverted_index.model's | 112 |

| abstract_inverted_index.problem | 34 |

| abstract_inverted_index.propose | 102 |

| abstract_inverted_index.result, | 137 |

| abstract_inverted_index.videos. | 52 |

| abstract_inverted_index.Compared | 53 |

| abstract_inverted_index.achieved | 123 |

| abstract_inverted_index.actions, | 86 |

| abstract_inverted_index.actions. | 96 |

| abstract_inverted_index.classify | 115 |

| abstract_inverted_index.emphasis | 63 |

| abstract_inverted_index.localize | 117 |

| abstract_inverted_index.multiple | 47 |

| abstract_inverted_index.previous | 131 |

| abstract_inverted_index.solution | 7, 122 |

| abstract_inverted_index.temporal | 40, 56 |

| abstract_inverted_index.HFUT-VUT, | 12 |

| abstract_inverted_index.Moreover, | 80 |

| abstract_inverted_index.attention | 108 |

| abstract_inverted_index.developed | 8 |

| abstract_inverted_index.instance. | 79 |

| abstract_inverted_index.instances | 49 |

| abstract_inverted_index.introduce | 4 |

| abstract_inverted_index.positions | 41 |

| abstract_inverted_index.precisely | 70 |

| abstract_inverted_index.recognize | 43 |

| abstract_inverted_index.typically | 83 |

| abstract_inverted_index.untrimmed | 51 |

| abstract_inverted_index.Challenge. | 24 |

| abstract_inverted_index.categories | 45, 68 |

| abstract_inverted_index.detection, | 58 |

| abstract_inverted_index.Recognition | 17, 28, 146 |

| abstract_inverted_index.accurately. | 120 |

| abstract_inverted_index.challenges, | 100 |

| abstract_inverted_index.challenging | 33 |

| abstract_inverted_index.differences | 89 |

| abstract_inverted_index.identifying | 71 |

| abstract_inverted_index.spontaneous | 84 |

| abstract_inverted_index.traditional | 55 |

| abstract_inverted_index.augmentation | 105 |

| abstract_inverted_index.hand-crafted | 103 |

| abstract_inverted_index.Micro-gesture | 15, 26, 144 |

| abstract_inverted_index.micro-gesture | 48, 67 |

| abstract_inverted_index.outperforming | 129 |

| abstract_inverted_index.distinguishing | 65 |

| abstract_inverted_index.micro-gestures | 81, 118 |

| abstract_inverted_index.spatial-temporal | 107 |

| abstract_inverted_index.state-of-the-art | 132 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 6 |

| citation_normalized_percentile |