Predicting brain activity using Transformers Article Swipe

YOU?

·

· 2023

· Open Access

·

· DOI: https://doi.org/10.1101/2023.08.02.551743

· OA: W4385604837

YOU?

·

· 2023

· Open Access

·

· DOI: https://doi.org/10.1101/2023.08.02.551743

· OA: W4385604837

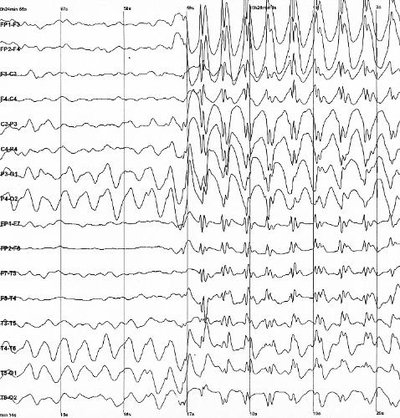

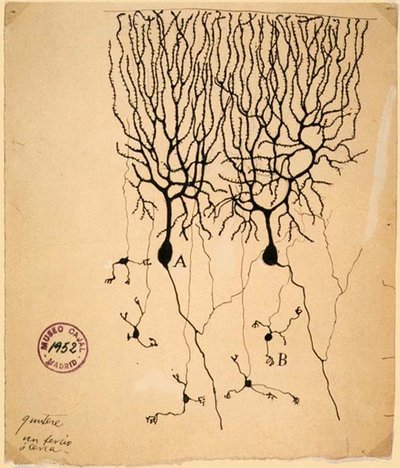

The Algonauts challenge [Gifford et al., 2023] called on the community to provide novel solutions for predicting brain activity of humans viewing natural scenes. This report provides an overview and technical details of our submitted solution. We use a general transformer encoder-decoder model to map images to fMRI responses. The encoder model is a vision transformer trained using self-supervised methods (DINOv2). The decoder uses queries corresponding to different brain regions of interests (ROI) in different hemispheres to gather relevant information from the encoder output for predicting neural activity in each ROI. The output tokens from the decoder are then linearly mapped to the fMRI activity. The predictive success (challenge score: 63.5229, rank 2) suggests that features from self-supervised transformers may deserve consideration as models of human visual brain representations and shows the effectiveness of transformer mechanisms (self and cross-attention) to learn the mapping from features to brain responses. Code is available in this github repository .