Time Series Human Motion Prediction Using RGB Camera and OpenPose Article Swipe

YOU?

·

· 2020

· Open Access

·

· DOI: https://doi.org/10.5057/isase.2020-c000037

YOU?

·

· 2020

· Open Access

·

· DOI: https://doi.org/10.5057/isase.2020-c000037

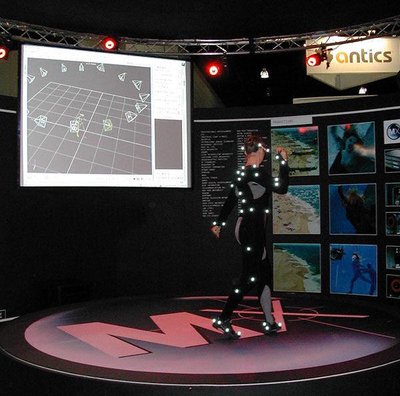

The report projects that by 2050, the population aged 60 and above will reach 2.1 billion. This aging society is more likely to suffer from locomotive syndrome. In order to reduce the spread of locomotive syndrome, it is best to increase awareness before the citizens become elderly. We propose the system to predict human motion as the first step to realize the locomotive syndrome estimation. Previous researches were using the Kinect camera which has a depth sensor that the camera used to detect the pose of a human body. However, in this research we are using an RGB camera as a reliable alternative. We set a goal to predict 1 second ahead of the motion which includes simple motions such as hand gesture and walking movement. We used OpenPose to extract the features of a human body pose including 14 points. YOLOv3 is used to crop the main feature in the frames before OpenPose process the frame. Distance and direction which are calculated from the features by comparing two consecutive frames as the input of Recurrent Neural Network Long Short-term Memory (RNN-LSTM) model and Kalman Filter. Mostly, Kalman Filter show better accuracy then RNN-LSTM and based on the human motions, motion such as hand gesture and moving to the right side are easier than more complex motion like hand gesture and moving to the left side. We confirmed the validity of RGB-camera based method in the simple human motion case from the result.

Related Topics

- Type

- article

- Language

- en

- Landing Page

- https://doi.org/10.5057/isase.2020-c000037

- https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdf

- OA Status

- diamond

- Cited By

- 4

- References

- 8

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W3023489980

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W3023489980Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.5057/isase.2020-c000037Digital Object Identifier

- Title

-

Time Series Human Motion Prediction Using RGB Camera and OpenPoseWork title

- Type

-

articleOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2020Year of publication

- Publication date

-

2020-01-01Full publication date if available

- Authors

-

Andi Prademon Yunus, Nobu C. Shirai, Kento Morita, Tetsushi WakabayashiList of authors in order

- Landing page

-

https://doi.org/10.5057/isase.2020-c000037Publisher landing page

- PDF URL

-

https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdfDirect link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

diamondOpen access status per OpenAlex

- OA URL

-

https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdfDirect OA link when available

- Concepts

-

Artificial intelligence, Computer science, Computer vision, RGB color model, Kalman filter, Gesture, Motion (physics), Gesture recognition, Motion capture, Motion estimation, Frame (networking), TelecommunicationsTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

4Total citation count in OpenAlex

- Citations by year (recent)

-

2025: 1, 2023: 1, 2022: 2Per-year citation counts (last 5 years)

- References (count)

-

8Number of works referenced by this work

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W3023489980 |

|---|---|

| doi | https://doi.org/10.5057/isase.2020-c000037 |

| ids.doi | https://doi.org/10.5057/isase.2020-c000037 |

| ids.mag | 3023489980 |

| ids.openalex | https://openalex.org/W3023489980 |

| fwci | 0.31490195 |

| type | article |

| title | Time Series Human Motion Prediction Using RGB Camera and OpenPose |

| biblio.issue | 0 |

| biblio.volume | ISASE2020 |

| biblio.last_page | 4 |

| biblio.first_page | 1 |

| topics[0].id | https://openalex.org/T10812 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.9959999918937683 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1707 |

| topics[0].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[0].display_name | Human Pose and Action Recognition |

| topics[1].id | https://openalex.org/T11775 |

| topics[1].field.id | https://openalex.org/fields/27 |

| topics[1].field.display_name | Medicine |

| topics[1].score | 0.9937999844551086 |

| topics[1].domain.id | https://openalex.org/domains/4 |

| topics[1].domain.display_name | Health Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/2741 |

| topics[1].subfield.display_name | Radiology, Nuclear Medicine and Imaging |

| topics[1].display_name | COVID-19 diagnosis using AI |

| topics[2].id | https://openalex.org/T14510 |

| topics[2].field.id | https://openalex.org/fields/22 |

| topics[2].field.display_name | Engineering |

| topics[2].score | 0.993399977684021 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/2204 |

| topics[2].subfield.display_name | Biomedical Engineering |

| topics[2].display_name | Medical Imaging and Analysis |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C154945302 |

| concepts[0].level | 1 |

| concepts[0].score | 0.7718614339828491 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[0].display_name | Artificial intelligence |

| concepts[1].id | https://openalex.org/C41008148 |

| concepts[1].level | 0 |

| concepts[1].score | 0.7582510709762573 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[1].display_name | Computer science |

| concepts[2].id | https://openalex.org/C31972630 |

| concepts[2].level | 1 |

| concepts[2].score | 0.7377495765686035 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q844240 |

| concepts[2].display_name | Computer vision |

| concepts[3].id | https://openalex.org/C82990744 |

| concepts[3].level | 2 |

| concepts[3].score | 0.6398991346359253 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q166194 |

| concepts[3].display_name | RGB color model |

| concepts[4].id | https://openalex.org/C157286648 |

| concepts[4].level | 2 |

| concepts[4].score | 0.5715863108634949 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q846780 |

| concepts[4].display_name | Kalman filter |

| concepts[5].id | https://openalex.org/C207347870 |

| concepts[5].level | 2 |

| concepts[5].score | 0.5602632164955139 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q371174 |

| concepts[5].display_name | Gesture |

| concepts[6].id | https://openalex.org/C104114177 |

| concepts[6].level | 2 |

| concepts[6].score | 0.5453135967254639 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q79782 |

| concepts[6].display_name | Motion (physics) |

| concepts[7].id | https://openalex.org/C159437735 |

| concepts[7].level | 3 |

| concepts[7].score | 0.4917295277118683 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q1519524 |

| concepts[7].display_name | Gesture recognition |

| concepts[8].id | https://openalex.org/C48007421 |

| concepts[8].level | 3 |

| concepts[8].score | 0.4686878025531769 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q676252 |

| concepts[8].display_name | Motion capture |

| concepts[9].id | https://openalex.org/C10161872 |

| concepts[9].level | 2 |

| concepts[9].score | 0.44072359800338745 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q557891 |

| concepts[9].display_name | Motion estimation |

| concepts[10].id | https://openalex.org/C126042441 |

| concepts[10].level | 2 |

| concepts[10].score | 0.41963496804237366 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q1324888 |

| concepts[10].display_name | Frame (networking) |

| concepts[11].id | https://openalex.org/C76155785 |

| concepts[11].level | 1 |

| concepts[11].score | 0.0 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q418 |

| concepts[11].display_name | Telecommunications |

| keywords[0].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[0].score | 0.7718614339828491 |

| keywords[0].display_name | Artificial intelligence |

| keywords[1].id | https://openalex.org/keywords/computer-science |

| keywords[1].score | 0.7582510709762573 |

| keywords[1].display_name | Computer science |

| keywords[2].id | https://openalex.org/keywords/computer-vision |

| keywords[2].score | 0.7377495765686035 |

| keywords[2].display_name | Computer vision |

| keywords[3].id | https://openalex.org/keywords/rgb-color-model |

| keywords[3].score | 0.6398991346359253 |

| keywords[3].display_name | RGB color model |

| keywords[4].id | https://openalex.org/keywords/kalman-filter |

| keywords[4].score | 0.5715863108634949 |

| keywords[4].display_name | Kalman filter |

| keywords[5].id | https://openalex.org/keywords/gesture |

| keywords[5].score | 0.5602632164955139 |

| keywords[5].display_name | Gesture |

| keywords[6].id | https://openalex.org/keywords/motion |

| keywords[6].score | 0.5453135967254639 |

| keywords[6].display_name | Motion (physics) |

| keywords[7].id | https://openalex.org/keywords/gesture-recognition |

| keywords[7].score | 0.4917295277118683 |

| keywords[7].display_name | Gesture recognition |

| keywords[8].id | https://openalex.org/keywords/motion-capture |

| keywords[8].score | 0.4686878025531769 |

| keywords[8].display_name | Motion capture |

| keywords[9].id | https://openalex.org/keywords/motion-estimation |

| keywords[9].score | 0.44072359800338745 |

| keywords[9].display_name | Motion estimation |

| keywords[10].id | https://openalex.org/keywords/frame |

| keywords[10].score | 0.41963496804237366 |

| keywords[10].display_name | Frame (networking) |

| language | en |

| locations[0].id | doi:10.5057/isase.2020-c000037 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4210221424 |

| locations[0].source.issn | 2433-5428 |

| locations[0].source.type | journal |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | 2433-5428 |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | International Symposium on Affective Science and Engineering |

| locations[0].source.host_organization | |

| locations[0].source.host_organization_name | |

| locations[0].license | |

| locations[0].pdf_url | https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdf |

| locations[0].version | publishedVersion |

| locations[0].raw_type | journal-article |

| locations[0].license_id | |

| locations[0].is_accepted | True |

| locations[0].is_published | True |

| locations[0].raw_source_name | International Symposium on Affective Science and Engineering |

| locations[0].landing_page_url | https://doi.org/10.5057/isase.2020-c000037 |

| indexed_in | crossref |

| authorships[0].author.id | https://openalex.org/A5006976140 |

| authorships[0].author.orcid | https://orcid.org/0000-0002-1185-5763 |

| authorships[0].author.display_name | Andi Prademon Yunus |

| authorships[0].countries | JP |

| authorships[0].affiliations[0].institution_ids | https://openalex.org/I178574317 |

| authorships[0].affiliations[0].raw_affiliation_string | Mie University |

| authorships[0].institutions[0].id | https://openalex.org/I178574317 |

| authorships[0].institutions[0].ror | https://ror.org/01529vy56 |

| authorships[0].institutions[0].type | education |

| authorships[0].institutions[0].lineage | https://openalex.org/I178574317 |

| authorships[0].institutions[0].country_code | JP |

| authorships[0].institutions[0].display_name | Mie University |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Andi Prademon YUNUS |

| authorships[0].is_corresponding | False |

| authorships[0].raw_affiliation_strings | Mie University |

| authorships[1].author.id | https://openalex.org/A5012209696 |

| authorships[1].author.orcid | https://orcid.org/0000-0001-7641-6190 |

| authorships[1].author.display_name | Nobu C. Shirai |

| authorships[1].countries | JP |

| authorships[1].affiliations[0].institution_ids | https://openalex.org/I178574317 |

| authorships[1].affiliations[0].raw_affiliation_string | Mie University |

| authorships[1].institutions[0].id | https://openalex.org/I178574317 |

| authorships[1].institutions[0].ror | https://ror.org/01529vy56 |

| authorships[1].institutions[0].type | education |

| authorships[1].institutions[0].lineage | https://openalex.org/I178574317 |

| authorships[1].institutions[0].country_code | JP |

| authorships[1].institutions[0].display_name | Mie University |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Nobu C. SHIRAI |

| authorships[1].is_corresponding | False |

| authorships[1].raw_affiliation_strings | Mie University |

| authorships[2].author.id | https://openalex.org/A5040874965 |

| authorships[2].author.orcid | https://orcid.org/0000-0002-7171-8197 |

| authorships[2].author.display_name | Kento Morita |

| authorships[2].countries | JP |

| authorships[2].affiliations[0].institution_ids | https://openalex.org/I178574317 |

| authorships[2].affiliations[0].raw_affiliation_string | Mie University |

| authorships[2].institutions[0].id | https://openalex.org/I178574317 |

| authorships[2].institutions[0].ror | https://ror.org/01529vy56 |

| authorships[2].institutions[0].type | education |

| authorships[2].institutions[0].lineage | https://openalex.org/I178574317 |

| authorships[2].institutions[0].country_code | JP |

| authorships[2].institutions[0].display_name | Mie University |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Kento MORITA |

| authorships[2].is_corresponding | False |

| authorships[2].raw_affiliation_strings | Mie University |

| authorships[3].author.id | https://openalex.org/A5111490360 |

| authorships[3].author.orcid | |

| authorships[3].author.display_name | Tetsushi Wakabayashi |

| authorships[3].countries | JP |

| authorships[3].affiliations[0].institution_ids | https://openalex.org/I178574317 |

| authorships[3].affiliations[0].raw_affiliation_string | Mie University |

| authorships[3].institutions[0].id | https://openalex.org/I178574317 |

| authorships[3].institutions[0].ror | https://ror.org/01529vy56 |

| authorships[3].institutions[0].type | education |

| authorships[3].institutions[0].lineage | https://openalex.org/I178574317 |

| authorships[3].institutions[0].country_code | JP |

| authorships[3].institutions[0].display_name | Mie University |

| authorships[3].author_position | last |

| authorships[3].raw_author_name | Tetsushi WAKABAYASHI |

| authorships[3].is_corresponding | False |

| authorships[3].raw_affiliation_strings | Mie University |

| has_content.pdf | True |

| has_content.grobid_xml | True |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdf |

| open_access.oa_status | diamond |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Time Series Human Motion Prediction Using RGB Camera and OpenPose |

| has_fulltext | True |

| is_retracted | False |

| updated_date | 2025-11-06T03:46:38.306776 |

| primary_topic.id | https://openalex.org/T10812 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.9959999918937683 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1707 |

| primary_topic.subfield.display_name | Computer Vision and Pattern Recognition |

| primary_topic.display_name | Human Pose and Action Recognition |

| related_works | https://openalex.org/W2066003895, https://openalex.org/W2902873204, https://openalex.org/W2185750513, https://openalex.org/W4312416068, https://openalex.org/W3147379364, https://openalex.org/W2010878661, https://openalex.org/W2026258298, https://openalex.org/W3204639664, https://openalex.org/W2970836791, https://openalex.org/W2805039731 |

| cited_by_count | 4 |

| counts_by_year[0].year | 2025 |

| counts_by_year[0].cited_by_count | 1 |

| counts_by_year[1].year | 2023 |

| counts_by_year[1].cited_by_count | 1 |

| counts_by_year[2].year | 2022 |

| counts_by_year[2].cited_by_count | 2 |

| locations_count | 1 |

| best_oa_location.id | doi:10.5057/isase.2020-c000037 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4210221424 |

| best_oa_location.source.issn | 2433-5428 |

| best_oa_location.source.type | journal |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | 2433-5428 |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | International Symposium on Affective Science and Engineering |

| best_oa_location.source.host_organization | |

| best_oa_location.source.host_organization_name | |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdf |

| best_oa_location.version | publishedVersion |

| best_oa_location.raw_type | journal-article |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | True |

| best_oa_location.is_published | True |

| best_oa_location.raw_source_name | International Symposium on Affective Science and Engineering |

| best_oa_location.landing_page_url | https://doi.org/10.5057/isase.2020-c000037 |

| primary_location.id | doi:10.5057/isase.2020-c000037 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4210221424 |

| primary_location.source.issn | 2433-5428 |

| primary_location.source.type | journal |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | 2433-5428 |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | International Symposium on Affective Science and Engineering |

| primary_location.source.host_organization | |

| primary_location.source.host_organization_name | |

| primary_location.license | |

| primary_location.pdf_url | https://www.jstage.jst.go.jp/article/isase/ISASE2020/0/ISASE2020_1_5/_pdf |

| primary_location.version | publishedVersion |

| primary_location.raw_type | journal-article |

| primary_location.license_id | |

| primary_location.is_accepted | True |

| primary_location.is_published | True |

| primary_location.raw_source_name | International Symposium on Affective Science and Engineering |

| primary_location.landing_page_url | https://doi.org/10.5057/isase.2020-c000037 |

| publication_date | 2020-01-01 |

| publication_year | 2020 |

| referenced_works | https://openalex.org/W2802025241, https://openalex.org/W2903317782, https://openalex.org/W2510046892, https://openalex.org/W2559085405, https://openalex.org/W2064675550, https://openalex.org/W2964010366, https://openalex.org/W2774513877, https://openalex.org/W2075628905 |

| referenced_works_count | 8 |

| abstract_inverted_index.1 | 109 |

| abstract_inverted_index.a | 74, 86, 100, 105, 134 |

| abstract_inverted_index.14 | 139 |

| abstract_inverted_index.60 | 9 |

| abstract_inverted_index.In | 27 |

| abstract_inverted_index.We | 47, 103, 126, 226 |

| abstract_inverted_index.an | 96 |

| abstract_inverted_index.as | 55, 99, 120, 171, 202 |

| abstract_inverted_index.by | 4, 166 |

| abstract_inverted_index.in | 90, 149, 234 |

| abstract_inverted_index.is | 19, 37, 142 |

| abstract_inverted_index.it | 36 |

| abstract_inverted_index.of | 33, 85, 112, 133, 174, 230 |

| abstract_inverted_index.on | 196 |

| abstract_inverted_index.to | 22, 29, 39, 51, 59, 81, 107, 129, 144, 207, 222 |

| abstract_inverted_index.we | 93 |

| abstract_inverted_index.2.1 | 14 |

| abstract_inverted_index.RGB | 97 |

| abstract_inverted_index.The | 0 |

| abstract_inverted_index.and | 10, 123, 158, 183, 194, 205, 220 |

| abstract_inverted_index.are | 94, 161, 211 |

| abstract_inverted_index.has | 73 |

| abstract_inverted_index.set | 104 |

| abstract_inverted_index.the | 6, 31, 43, 49, 56, 61, 69, 78, 83, 113, 131, 146, 150, 155, 164, 172, 197, 208, 223, 228, 235, 241 |

| abstract_inverted_index.two | 168 |

| abstract_inverted_index.Long | 178 |

| abstract_inverted_index.This | 16 |

| abstract_inverted_index.aged | 8 |

| abstract_inverted_index.best | 38 |

| abstract_inverted_index.body | 136 |

| abstract_inverted_index.case | 239 |

| abstract_inverted_index.crop | 145 |

| abstract_inverted_index.from | 24, 163, 240 |

| abstract_inverted_index.goal | 106 |

| abstract_inverted_index.hand | 121, 203, 218 |

| abstract_inverted_index.left | 224 |

| abstract_inverted_index.like | 217 |

| abstract_inverted_index.main | 147 |

| abstract_inverted_index.more | 20, 214 |

| abstract_inverted_index.pose | 84, 137 |

| abstract_inverted_index.show | 189 |

| abstract_inverted_index.side | 210 |

| abstract_inverted_index.step | 58 |

| abstract_inverted_index.such | 119, 201 |

| abstract_inverted_index.than | 213 |

| abstract_inverted_index.that | 3, 77 |

| abstract_inverted_index.then | 192 |

| abstract_inverted_index.this | 91 |

| abstract_inverted_index.used | 80, 127, 143 |

| abstract_inverted_index.were | 67 |

| abstract_inverted_index.will | 12 |

| abstract_inverted_index.2050, | 5 |

| abstract_inverted_index.above | 11 |

| abstract_inverted_index.aging | 17 |

| abstract_inverted_index.ahead | 111 |

| abstract_inverted_index.based | 195, 232 |

| abstract_inverted_index.body. | 88 |

| abstract_inverted_index.depth | 75 |

| abstract_inverted_index.first | 57 |

| abstract_inverted_index.human | 53, 87, 135, 198, 237 |

| abstract_inverted_index.input | 173 |

| abstract_inverted_index.model | 182 |

| abstract_inverted_index.order | 28 |

| abstract_inverted_index.reach | 13 |

| abstract_inverted_index.right | 209 |

| abstract_inverted_index.side. | 225 |

| abstract_inverted_index.using | 68, 95 |

| abstract_inverted_index.which | 72, 115, 160 |

| abstract_inverted_index.Filter | 188 |

| abstract_inverted_index.Kalman | 184, 187 |

| abstract_inverted_index.Kinect | 70 |

| abstract_inverted_index.Memory | 180 |

| abstract_inverted_index.Neural | 176 |

| abstract_inverted_index.YOLOv3 | 141 |

| abstract_inverted_index.become | 45 |

| abstract_inverted_index.before | 42, 152 |

| abstract_inverted_index.better | 190 |

| abstract_inverted_index.camera | 71, 79, 98 |

| abstract_inverted_index.detect | 82 |

| abstract_inverted_index.easier | 212 |

| abstract_inverted_index.frame. | 156 |

| abstract_inverted_index.frames | 151, 170 |

| abstract_inverted_index.likely | 21 |

| abstract_inverted_index.method | 233 |

| abstract_inverted_index.motion | 54, 114, 200, 216, 238 |

| abstract_inverted_index.moving | 206, 221 |

| abstract_inverted_index.reduce | 30 |

| abstract_inverted_index.report | 1 |

| abstract_inverted_index.second | 110 |

| abstract_inverted_index.sensor | 76 |

| abstract_inverted_index.simple | 117, 236 |

| abstract_inverted_index.spread | 32 |

| abstract_inverted_index.suffer | 23 |

| abstract_inverted_index.system | 50 |

| abstract_inverted_index.Filter. | 185 |

| abstract_inverted_index.Mostly, | 186 |

| abstract_inverted_index.Network | 177 |

| abstract_inverted_index.complex | 215 |

| abstract_inverted_index.extract | 130 |

| abstract_inverted_index.feature | 148 |

| abstract_inverted_index.gesture | 122, 204, 219 |

| abstract_inverted_index.motions | 118 |

| abstract_inverted_index.points. | 140 |

| abstract_inverted_index.predict | 52, 108 |

| abstract_inverted_index.process | 154 |

| abstract_inverted_index.propose | 48 |

| abstract_inverted_index.realize | 60 |

| abstract_inverted_index.result. | 242 |

| abstract_inverted_index.society | 18 |

| abstract_inverted_index.walking | 124 |

| abstract_inverted_index.Distance | 157 |

| abstract_inverted_index.However, | 89 |

| abstract_inverted_index.OpenPose | 128, 153 |

| abstract_inverted_index.Previous | 65 |

| abstract_inverted_index.RNN-LSTM | 193 |

| abstract_inverted_index.accuracy | 191 |

| abstract_inverted_index.billion. | 15 |

| abstract_inverted_index.citizens | 44 |

| abstract_inverted_index.elderly. | 46 |

| abstract_inverted_index.features | 132, 165 |

| abstract_inverted_index.includes | 116 |

| abstract_inverted_index.increase | 40 |

| abstract_inverted_index.motions, | 199 |

| abstract_inverted_index.projects | 2 |

| abstract_inverted_index.reliable | 101 |

| abstract_inverted_index.research | 92 |

| abstract_inverted_index.syndrome | 63 |

| abstract_inverted_index.validity | 229 |

| abstract_inverted_index.Recurrent | 175 |

| abstract_inverted_index.awareness | 41 |

| abstract_inverted_index.comparing | 167 |

| abstract_inverted_index.confirmed | 227 |

| abstract_inverted_index.direction | 159 |

| abstract_inverted_index.including | 138 |

| abstract_inverted_index.movement. | 125 |

| abstract_inverted_index.syndrome, | 35 |

| abstract_inverted_index.syndrome. | 26 |

| abstract_inverted_index.(RNN-LSTM) | 181 |

| abstract_inverted_index.RGB-camera | 231 |

| abstract_inverted_index.Short-term | 179 |

| abstract_inverted_index.calculated | 162 |

| abstract_inverted_index.locomotive | 25, 34, 62 |

| abstract_inverted_index.population | 7 |

| abstract_inverted_index.researches | 66 |

| abstract_inverted_index.consecutive | 169 |

| abstract_inverted_index.estimation. | 64 |

| abstract_inverted_index.alternative. | 102 |

| cited_by_percentile_year.max | 96 |

| cited_by_percentile_year.min | 89 |

| countries_distinct_count | 1 |

| institutions_distinct_count | 4 |

| citation_normalized_percentile.value | 0.56468179 |

| citation_normalized_percentile.is_in_top_1_percent | False |

| citation_normalized_percentile.is_in_top_10_percent | False |