Towards Unified Benchmark and Models for Multi-Modal Perceptual Metrics Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2412.10594

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2412.10594

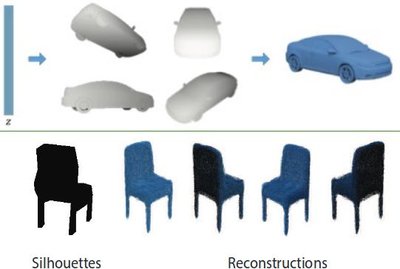

Human perception of similarity across uni- and multimodal inputs is highly complex, making it challenging to develop automated metrics that accurately mimic it. General purpose vision-language models, such as CLIP and large multi-modal models (LMMs), can be applied as zero-shot perceptual metrics, and several recent works have developed models specialized in narrow perceptual tasks. However, the extent to which existing perceptual metrics align with human perception remains unclear. To investigate this question, we introduce UniSim-Bench, a benchmark encompassing 7 multi-modal perceptual similarity tasks, with a total of 25 datasets. Our evaluation reveals that while general-purpose models perform reasonably well on average, they often lag behind specialized models on individual tasks. Conversely, metrics fine-tuned for specific tasks fail to generalize well to unseen, though related, tasks. As a first step towards a unified multi-task perceptual similarity metric, we fine-tune both encoder-based and generative vision-language models on a subset of the UniSim-Bench tasks. This approach yields the highest average performance, and in some cases, even surpasses taskspecific models. Nevertheless, these models still struggle with generalization to unseen tasks, highlighting the ongoing challenge of learning a robust, unified perceptual similarity metric capable of capturing the human notion of similarity. The code and models are available at https://github.com/SaraGhazanfari/UniSim.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2412.10594

- https://arxiv.org/pdf/2412.10594

- OA Status

- green

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4405468211

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4405468211Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2412.10594Digital Object Identifier

- Title

-

Towards Unified Benchmark and Models for Multi-Modal Perceptual MetricsWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2024Year of publication

- Publication date

-

2024-12-13Full publication date if available

- Authors

-

Sara Ghazanfari, Siddharth Garg, Nicolas Flammarion, P. Krishnamurthy, Farshad Khorrami, Francesco CroceList of authors in order

- Landing page

-

https://arxiv.org/abs/2412.10594Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2412.10594Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2412.10594Direct OA link when available

- Concepts

-

Benchmark (surveying), Modal, Perception, Computer science, Artificial intelligence, Psychology, Geography, Cartography, Neuroscience, Chemistry, Polymer chemistryTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

0Total citation count in OpenAlex

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4405468211 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2412.10594 |

| ids.doi | https://doi.org/10.48550/arxiv.2412.10594 |

| ids.openalex | https://openalex.org/W4405468211 |

| fwci | |

| type | preprint |

| title | Towards Unified Benchmark and Models for Multi-Modal Perceptual Metrics |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T11666 |

| topics[0].field.id | https://openalex.org/fields/31 |

| topics[0].field.display_name | Physics and Astronomy |

| topics[0].score | 0.6862999796867371 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/3107 |

| topics[0].subfield.display_name | Atomic and Molecular Physics, and Optics |

| topics[0].display_name | Color Science and Applications |

| topics[1].id | https://openalex.org/T12111 |

| topics[1].field.id | https://openalex.org/fields/22 |

| topics[1].field.display_name | Engineering |

| topics[1].score | 0.6675000190734863 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/2209 |

| topics[1].subfield.display_name | Industrial and Manufacturing Engineering |

| topics[1].display_name | Industrial Vision Systems and Defect Detection |

| topics[2].id | https://openalex.org/T10824 |

| topics[2].field.id | https://openalex.org/fields/17 |

| topics[2].field.display_name | Computer Science |

| topics[2].score | 0.6110000014305115 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1707 |

| topics[2].subfield.display_name | Computer Vision and Pattern Recognition |

| topics[2].display_name | Image Retrieval and Classification Techniques |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C185798385 |

| concepts[0].level | 2 |

| concepts[0].score | 0.8062610626220703 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q1161707 |

| concepts[0].display_name | Benchmark (surveying) |

| concepts[1].id | https://openalex.org/C71139939 |

| concepts[1].level | 2 |

| concepts[1].score | 0.7153476476669312 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q910194 |

| concepts[1].display_name | Modal |

| concepts[2].id | https://openalex.org/C26760741 |

| concepts[2].level | 2 |

| concepts[2].score | 0.6377725005149841 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q160402 |

| concepts[2].display_name | Perception |

| concepts[3].id | https://openalex.org/C41008148 |

| concepts[3].level | 0 |

| concepts[3].score | 0.603603720664978 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[3].display_name | Computer science |

| concepts[4].id | https://openalex.org/C154945302 |

| concepts[4].level | 1 |

| concepts[4].score | 0.37194520235061646 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[4].display_name | Artificial intelligence |

| concepts[5].id | https://openalex.org/C15744967 |

| concepts[5].level | 0 |

| concepts[5].score | 0.1999177634716034 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q9418 |

| concepts[5].display_name | Psychology |

| concepts[6].id | https://openalex.org/C205649164 |

| concepts[6].level | 0 |

| concepts[6].score | 0.19574445486068726 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q1071 |

| concepts[6].display_name | Geography |

| concepts[7].id | https://openalex.org/C58640448 |

| concepts[7].level | 1 |

| concepts[7].score | 0.14734384417533875 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q42515 |

| concepts[7].display_name | Cartography |

| concepts[8].id | https://openalex.org/C169760540 |

| concepts[8].level | 1 |

| concepts[8].score | 0.0 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q207011 |

| concepts[8].display_name | Neuroscience |

| concepts[9].id | https://openalex.org/C185592680 |

| concepts[9].level | 0 |

| concepts[9].score | 0.0 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q2329 |

| concepts[9].display_name | Chemistry |

| concepts[10].id | https://openalex.org/C188027245 |

| concepts[10].level | 1 |

| concepts[10].score | 0.0 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q750446 |

| concepts[10].display_name | Polymer chemistry |

| keywords[0].id | https://openalex.org/keywords/benchmark |

| keywords[0].score | 0.8062610626220703 |

| keywords[0].display_name | Benchmark (surveying) |

| keywords[1].id | https://openalex.org/keywords/modal |

| keywords[1].score | 0.7153476476669312 |

| keywords[1].display_name | Modal |

| keywords[2].id | https://openalex.org/keywords/perception |

| keywords[2].score | 0.6377725005149841 |

| keywords[2].display_name | Perception |

| keywords[3].id | https://openalex.org/keywords/computer-science |

| keywords[3].score | 0.603603720664978 |

| keywords[3].display_name | Computer science |

| keywords[4].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[4].score | 0.37194520235061646 |

| keywords[4].display_name | Artificial intelligence |

| keywords[5].id | https://openalex.org/keywords/psychology |

| keywords[5].score | 0.1999177634716034 |

| keywords[5].display_name | Psychology |

| keywords[6].id | https://openalex.org/keywords/geography |

| keywords[6].score | 0.19574445486068726 |

| keywords[6].display_name | Geography |

| keywords[7].id | https://openalex.org/keywords/cartography |

| keywords[7].score | 0.14734384417533875 |

| keywords[7].display_name | Cartography |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2412.10594 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | |

| locations[0].pdf_url | https://arxiv.org/pdf/2412.10594 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2412.10594 |

| locations[1].id | doi:10.48550/arxiv.2412.10594 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | cc-by |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | https://openalex.org/licenses/cc-by |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2412.10594 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5017092536 |

| authorships[0].author.orcid | |

| authorships[0].author.display_name | Sara Ghazanfari |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Ghazanfari, Sara |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5010950688 |

| authorships[1].author.orcid | https://orcid.org/0000-0002-6158-9512 |

| authorships[1].author.display_name | Siddharth Garg |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Garg, Siddharth |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5061093552 |

| authorships[2].author.orcid | |

| authorships[2].author.display_name | Nicolas Flammarion |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Flammarion, Nicolas |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5054769060 |

| authorships[3].author.orcid | https://orcid.org/0000-0001-8264-7972 |

| authorships[3].author.display_name | P. Krishnamurthy |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Krishnamurthy, Prashanth |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5082413942 |

| authorships[4].author.orcid | https://orcid.org/0000-0002-8418-004X |

| authorships[4].author.display_name | Farshad Khorrami |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | Khorrami, Farshad |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5069087915 |

| authorships[5].author.orcid | |

| authorships[5].author.display_name | Francesco Croce |

| authorships[5].author_position | last |

| authorships[5].raw_author_name | Croce, Francesco |

| authorships[5].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2412.10594 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Towards Unified Benchmark and Models for Multi-Modal Perceptual Metrics |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T11666 |

| primary_topic.field.id | https://openalex.org/fields/31 |

| primary_topic.field.display_name | Physics and Astronomy |

| primary_topic.score | 0.6862999796867371 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/3107 |

| primary_topic.subfield.display_name | Atomic and Molecular Physics, and Optics |

| primary_topic.display_name | Color Science and Applications |

| related_works | https://openalex.org/W4391375266, https://openalex.org/W2899084033, https://openalex.org/W2748952813, https://openalex.org/W2378211422, https://openalex.org/W4321353415, https://openalex.org/W2745001401, https://openalex.org/W2130974462, https://openalex.org/W2028665553, https://openalex.org/W2086519370, https://openalex.org/W4246352526 |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2412.10594 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2412.10594 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2412.10594 |

| primary_location.id | pmh:oai:arXiv.org:2412.10594 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | |

| primary_location.pdf_url | https://arxiv.org/pdf/2412.10594 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2412.10594 |

| publication_date | 2024-12-13 |

| publication_year | 2024 |

| referenced_works_count | 0 |

| abstract_inverted_index.7 | 78 |

| abstract_inverted_index.a | 75, 84, 126, 130, 145, 182 |

| abstract_inverted_index.25 | 87 |

| abstract_inverted_index.As | 125 |

| abstract_inverted_index.To | 68 |

| abstract_inverted_index.as | 28, 38 |

| abstract_inverted_index.at | 202 |

| abstract_inverted_index.be | 36 |

| abstract_inverted_index.in | 50, 159 |

| abstract_inverted_index.is | 9 |

| abstract_inverted_index.it | 13 |

| abstract_inverted_index.of | 2, 86, 147, 180, 189, 194 |

| abstract_inverted_index.on | 99, 107, 144 |

| abstract_inverted_index.to | 15, 57, 117, 120, 173 |

| abstract_inverted_index.we | 72, 136 |

| abstract_inverted_index.Our | 89 |

| abstract_inverted_index.The | 196 |

| abstract_inverted_index.and | 6, 30, 42, 140, 158, 198 |

| abstract_inverted_index.are | 200 |

| abstract_inverted_index.can | 35 |

| abstract_inverted_index.for | 113 |

| abstract_inverted_index.it. | 22 |

| abstract_inverted_index.lag | 103 |

| abstract_inverted_index.the | 55, 148, 154, 177, 191 |

| abstract_inverted_index.CLIP | 29 |

| abstract_inverted_index.This | 151 |

| abstract_inverted_index.both | 138 |

| abstract_inverted_index.code | 197 |

| abstract_inverted_index.even | 162 |

| abstract_inverted_index.fail | 116 |

| abstract_inverted_index.have | 46 |

| abstract_inverted_index.some | 160 |

| abstract_inverted_index.step | 128 |

| abstract_inverted_index.such | 27 |

| abstract_inverted_index.that | 19, 92 |

| abstract_inverted_index.they | 101 |

| abstract_inverted_index.this | 70 |

| abstract_inverted_index.uni- | 5 |

| abstract_inverted_index.well | 98, 119 |

| abstract_inverted_index.with | 63, 83, 171 |

| abstract_inverted_index.Human | 0 |

| abstract_inverted_index.align | 62 |

| abstract_inverted_index.first | 127 |

| abstract_inverted_index.human | 64, 192 |

| abstract_inverted_index.large | 31 |

| abstract_inverted_index.mimic | 21 |

| abstract_inverted_index.often | 102 |

| abstract_inverted_index.still | 169 |

| abstract_inverted_index.tasks | 115 |

| abstract_inverted_index.these | 167 |

| abstract_inverted_index.total | 85 |

| abstract_inverted_index.which | 58 |

| abstract_inverted_index.while | 93 |

| abstract_inverted_index.works | 45 |

| abstract_inverted_index.across | 4 |

| abstract_inverted_index.behind | 104 |

| abstract_inverted_index.cases, | 161 |

| abstract_inverted_index.extent | 56 |

| abstract_inverted_index.highly | 10 |

| abstract_inverted_index.inputs | 8 |

| abstract_inverted_index.making | 12 |

| abstract_inverted_index.metric | 187 |

| abstract_inverted_index.models | 33, 48, 95, 106, 143, 168, 199 |

| abstract_inverted_index.narrow | 51 |

| abstract_inverted_index.notion | 193 |

| abstract_inverted_index.recent | 44 |

| abstract_inverted_index.subset | 146 |

| abstract_inverted_index.tasks, | 82, 175 |

| abstract_inverted_index.tasks. | 53, 109, 124, 150 |

| abstract_inverted_index.though | 122 |

| abstract_inverted_index.unseen | 174 |

| abstract_inverted_index.yields | 153 |

| abstract_inverted_index.(LMMs), | 34 |

| abstract_inverted_index.General | 23 |

| abstract_inverted_index.applied | 37 |

| abstract_inverted_index.average | 156 |

| abstract_inverted_index.capable | 188 |

| abstract_inverted_index.develop | 16 |

| abstract_inverted_index.highest | 155 |

| abstract_inverted_index.metric, | 135 |

| abstract_inverted_index.metrics | 18, 61, 111 |

| abstract_inverted_index.models, | 26 |

| abstract_inverted_index.models. | 165 |

| abstract_inverted_index.ongoing | 178 |

| abstract_inverted_index.perform | 96 |

| abstract_inverted_index.purpose | 24 |

| abstract_inverted_index.remains | 66 |

| abstract_inverted_index.reveals | 91 |

| abstract_inverted_index.robust, | 183 |

| abstract_inverted_index.several | 43 |

| abstract_inverted_index.towards | 129 |

| abstract_inverted_index.unified | 131, 184 |

| abstract_inverted_index.unseen, | 121 |

| abstract_inverted_index.However, | 54 |

| abstract_inverted_index.approach | 152 |

| abstract_inverted_index.average, | 100 |

| abstract_inverted_index.complex, | 11 |

| abstract_inverted_index.existing | 59 |

| abstract_inverted_index.learning | 181 |

| abstract_inverted_index.metrics, | 41 |

| abstract_inverted_index.related, | 123 |

| abstract_inverted_index.specific | 114 |

| abstract_inverted_index.struggle | 170 |

| abstract_inverted_index.unclear. | 67 |

| abstract_inverted_index.automated | 17 |

| abstract_inverted_index.available | 201 |

| abstract_inverted_index.benchmark | 76 |

| abstract_inverted_index.capturing | 190 |

| abstract_inverted_index.challenge | 179 |

| abstract_inverted_index.datasets. | 88 |

| abstract_inverted_index.developed | 47 |

| abstract_inverted_index.fine-tune | 137 |

| abstract_inverted_index.introduce | 73 |

| abstract_inverted_index.question, | 71 |

| abstract_inverted_index.surpasses | 163 |

| abstract_inverted_index.zero-shot | 39 |

| abstract_inverted_index.accurately | 20 |

| abstract_inverted_index.evaluation | 90 |

| abstract_inverted_index.fine-tuned | 112 |

| abstract_inverted_index.generalize | 118 |

| abstract_inverted_index.generative | 141 |

| abstract_inverted_index.individual | 108 |

| abstract_inverted_index.multi-task | 132 |

| abstract_inverted_index.multimodal | 7 |

| abstract_inverted_index.perception | 1, 65 |

| abstract_inverted_index.perceptual | 40, 52, 60, 80, 133, 185 |

| abstract_inverted_index.reasonably | 97 |

| abstract_inverted_index.similarity | 3, 81, 134, 186 |

| abstract_inverted_index.Conversely, | 110 |

| abstract_inverted_index.challenging | 14 |

| abstract_inverted_index.investigate | 69 |

| abstract_inverted_index.multi-modal | 32, 79 |

| abstract_inverted_index.similarity. | 195 |

| abstract_inverted_index.specialized | 49, 105 |

| abstract_inverted_index.UniSim-Bench | 149 |

| abstract_inverted_index.encompassing | 77 |

| abstract_inverted_index.highlighting | 176 |

| abstract_inverted_index.performance, | 157 |

| abstract_inverted_index.taskspecific | 164 |

| abstract_inverted_index.Nevertheless, | 166 |

| abstract_inverted_index.UniSim-Bench, | 74 |

| abstract_inverted_index.encoder-based | 139 |

| abstract_inverted_index.generalization | 172 |

| abstract_inverted_index.general-purpose | 94 |

| abstract_inverted_index.vision-language | 25, 142 |

| abstract_inverted_index.https://github.com/SaraGhazanfari/UniSim. | 203 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 6 |

| citation_normalized_percentile |