Use of U-Net Convolutional Neural Networks for Automated Segmentation of Fecal Material to Objective Evaluation of Bowel Preparation Quality in Colonoscopy (Preprint) Article Swipe

YOU?

·

· 2021

· Open Access

·

· DOI: https://doi.org/10.2196/preprints.29326

YOU?

·

· 2021

· Open Access

·

· DOI: https://doi.org/10.2196/preprints.29326

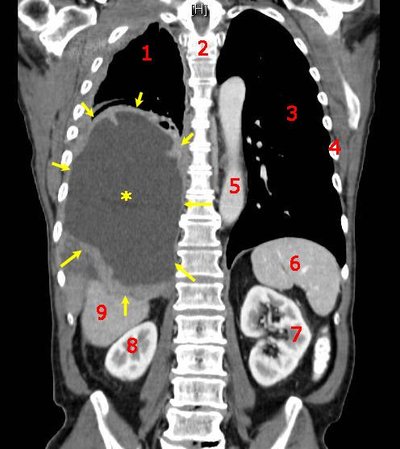

BACKGROUND Adequate bowel cleansing is important for a complete examination of the colon mucosa during colonoscopy. Current bowel cleansing evaluation scales are subjective with a wide variation in consistency among physicians and low reported rate. Artificial intelligence (AI) has been increasingly used in endoscopy. OBJECTIVE We aim to use machine learning to develop a fully automatic segmentation method to mark the fecal residue-coated mucosa for objective evaluation of the adequacy of colon preparation. METHODS Colonoscopy videos were retrieved from a video data cohort and transferred to qualified images, which were randomly divided into training, validation and verification datasets. The fecal residue was manually segmented by skilled technicians. Deep learning model based on the U-Net convolutional network architecture was developed to perform automatic segmentation. TheA total of 10,118 qualified images from 119 videos were captured, and labelled manually. The model averaged 0.3634 seconds to segmentate one image automatically. The models produced a strong high-overlap area with manual segmentation to 94.7% ± 0.67% with an intersection over union (IOU) of 0.607 ± 0.17. The area predicted by our AI model correlated well with the area measured manually (r=0.915, p<0.001). The AI system can be applied real-time to qualitatively and quantitatively display the mucosa covered by fecal residue. performance of the automatic segmentation was evaluated on the overlap area with the manual segmentation. RESULTS A total of 10,118 qualified images from 119 videos were captured, and labelled manually. The model averaged 0.3634 seconds to segmentate one image automatically. The models produced a strong high-overlap area with manual segmentation to 94.7% ± 0.67% with an intersection over union (IOU) of 0.607 ± 0.17. The area predicted by our AI model correlated well with the area measured manually (r=0.915, p<0.001). The AI system can be applied real-time to qualitatively and quantitatively display the mucosa covered by fecal residue. CONCLUSIONS We used machine learning to establish a fully automatic segmentation method to rapidly and accurately mark the fecal residue-coated mucosa for objective evaluation of colon preparation.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- https://doi.org/10.2196/preprints.29326

- OA Status

- gold

- References

- 42

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4205146942

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4205146942Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.2196/preprints.29326Digital Object Identifier

- Title

-

Use of U-Net Convolutional Neural Networks for Automated Segmentation of Fecal Material to Objective Evaluation of Bowel Preparation Quality in Colonoscopy (Preprint)Work title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2021Year of publication

- Publication date

-

2021-04-02Full publication date if available

- Authors

-

Yen‐Po Wang, Ying-Chun Jheng, Kuang‐Yi Sung, Hung-En Lin, I‐Fang Hsin, Ping‐Hsien Chen, Yuan-Chia Chu, David Lu, Yuan‐Jen Wang, Ming‐Chih Hou, Fa‐Yauh Lee, Ching‐Liang LuList of authors in order

- Landing page

-

https://doi.org/10.2196/preprints.29326Publisher landing page

- Open access

-

YesWhether a free full text is available

- OA status

-

goldOpen access status per OpenAlex

- OA URL

-

https://doi.org/10.2196/preprints.29326Direct OA link when available

- Concepts

-

Artificial intelligence, Segmentation, Computer science, Convolutional neural network, Colonoscopy, Deep learning, Pattern recognition (psychology), Computer vision, Medicine, Colorectal cancer, Internal medicine, CancerTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

0Total citation count in OpenAlex

- References (count)

-

42Number of works referenced by this work

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4205146942 |

|---|---|

| doi | https://doi.org/10.2196/preprints.29326 |

| ids.doi | https://doi.org/10.2196/preprints.29326 |

| ids.openalex | https://openalex.org/W4205146942 |

| fwci | 0.0 |

| type | preprint |

| title | Use of U-Net Convolutional Neural Networks for Automated Segmentation of Fecal Material to Objective Evaluation of Bowel Preparation Quality in Colonoscopy (Preprint) |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T10552 |

| topics[0].field.id | https://openalex.org/fields/27 |

| topics[0].field.display_name | Medicine |

| topics[0].score | 0.9998999834060669 |

| topics[0].domain.id | https://openalex.org/domains/4 |

| topics[0].domain.display_name | Health Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/2730 |

| topics[0].subfield.display_name | Oncology |

| topics[0].display_name | Colorectal Cancer Screening and Detection |

| topics[1].id | https://openalex.org/T10696 |

| topics[1].field.id | https://openalex.org/fields/27 |

| topics[1].field.display_name | Medicine |

| topics[1].score | 0.9940999746322632 |

| topics[1].domain.id | https://openalex.org/domains/4 |

| topics[1].domain.display_name | Health Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/2740 |

| topics[1].subfield.display_name | Pulmonary and Respiratory Medicine |

| topics[1].display_name | Gastric Cancer Management and Outcomes |

| topics[2].id | https://openalex.org/T12422 |

| topics[2].field.id | https://openalex.org/fields/27 |

| topics[2].field.display_name | Medicine |

| topics[2].score | 0.9865999817848206 |

| topics[2].domain.id | https://openalex.org/domains/4 |

| topics[2].domain.display_name | Health Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/2741 |

| topics[2].subfield.display_name | Radiology, Nuclear Medicine and Imaging |

| topics[2].display_name | Radiomics and Machine Learning in Medical Imaging |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C154945302 |

| concepts[0].level | 1 |

| concepts[0].score | 0.7378981113433838 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[0].display_name | Artificial intelligence |

| concepts[1].id | https://openalex.org/C89600930 |

| concepts[1].level | 2 |

| concepts[1].score | 0.7155000567436218 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q1423946 |

| concepts[1].display_name | Segmentation |

| concepts[2].id | https://openalex.org/C41008148 |

| concepts[2].level | 0 |

| concepts[2].score | 0.6809830069541931 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[2].display_name | Computer science |

| concepts[3].id | https://openalex.org/C81363708 |

| concepts[3].level | 2 |

| concepts[3].score | 0.645759105682373 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q17084460 |

| concepts[3].display_name | Convolutional neural network |

| concepts[4].id | https://openalex.org/C2778435480 |

| concepts[4].level | 4 |

| concepts[4].score | 0.525102972984314 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q840387 |

| concepts[4].display_name | Colonoscopy |

| concepts[5].id | https://openalex.org/C108583219 |

| concepts[5].level | 2 |

| concepts[5].score | 0.44716182351112366 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q197536 |

| concepts[5].display_name | Deep learning |

| concepts[6].id | https://openalex.org/C153180895 |

| concepts[6].level | 2 |

| concepts[6].score | 0.4222366213798523 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q7148389 |

| concepts[6].display_name | Pattern recognition (psychology) |

| concepts[7].id | https://openalex.org/C31972630 |

| concepts[7].level | 1 |

| concepts[7].score | 0.4075758457183838 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q844240 |

| concepts[7].display_name | Computer vision |

| concepts[8].id | https://openalex.org/C71924100 |

| concepts[8].level | 0 |

| concepts[8].score | 0.20718088746070862 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q11190 |

| concepts[8].display_name | Medicine |

| concepts[9].id | https://openalex.org/C526805850 |

| concepts[9].level | 3 |

| concepts[9].score | 0.14142706990242004 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q188874 |

| concepts[9].display_name | Colorectal cancer |

| concepts[10].id | https://openalex.org/C126322002 |

| concepts[10].level | 1 |

| concepts[10].score | 0.08139440417289734 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q11180 |

| concepts[10].display_name | Internal medicine |

| concepts[11].id | https://openalex.org/C121608353 |

| concepts[11].level | 2 |

| concepts[11].score | 0.0 |

| concepts[11].wikidata | https://www.wikidata.org/wiki/Q12078 |

| concepts[11].display_name | Cancer |

| keywords[0].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[0].score | 0.7378981113433838 |

| keywords[0].display_name | Artificial intelligence |

| keywords[1].id | https://openalex.org/keywords/segmentation |

| keywords[1].score | 0.7155000567436218 |

| keywords[1].display_name | Segmentation |

| keywords[2].id | https://openalex.org/keywords/computer-science |

| keywords[2].score | 0.6809830069541931 |

| keywords[2].display_name | Computer science |

| keywords[3].id | https://openalex.org/keywords/convolutional-neural-network |

| keywords[3].score | 0.645759105682373 |

| keywords[3].display_name | Convolutional neural network |

| keywords[4].id | https://openalex.org/keywords/colonoscopy |

| keywords[4].score | 0.525102972984314 |

| keywords[4].display_name | Colonoscopy |

| keywords[5].id | https://openalex.org/keywords/deep-learning |

| keywords[5].score | 0.44716182351112366 |

| keywords[5].display_name | Deep learning |

| keywords[6].id | https://openalex.org/keywords/pattern-recognition |

| keywords[6].score | 0.4222366213798523 |

| keywords[6].display_name | Pattern recognition (psychology) |

| keywords[7].id | https://openalex.org/keywords/computer-vision |

| keywords[7].score | 0.4075758457183838 |

| keywords[7].display_name | Computer vision |

| keywords[8].id | https://openalex.org/keywords/medicine |

| keywords[8].score | 0.20718088746070862 |

| keywords[8].display_name | Medicine |

| keywords[9].id | https://openalex.org/keywords/colorectal-cancer |

| keywords[9].score | 0.14142706990242004 |

| keywords[9].display_name | Colorectal cancer |

| keywords[10].id | https://openalex.org/keywords/internal-medicine |

| keywords[10].score | 0.08139440417289734 |

| keywords[10].display_name | Internal medicine |

| language | en |

| locations[0].id | doi:10.2196/preprints.29326 |

| locations[0].is_oa | True |

| locations[0].source | |

| locations[0].license | cc-by |

| locations[0].pdf_url | |

| locations[0].version | acceptedVersion |

| locations[0].raw_type | posted-content |

| locations[0].license_id | https://openalex.org/licenses/cc-by |

| locations[0].is_accepted | True |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | https://doi.org/10.2196/preprints.29326 |

| indexed_in | crossref |

| authorships[0].author.id | https://openalex.org/A5025513270 |

| authorships[0].author.orcid | https://orcid.org/0000-0002-3769-0020 |

| authorships[0].author.display_name | Yen‐Po Wang |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Yen-Po Wang |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5072833206 |

| authorships[1].author.orcid | https://orcid.org/0000-0002-2883-4432 |

| authorships[1].author.display_name | Ying-Chun Jheng |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Ying-Chun Jheng |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5089641395 |

| authorships[2].author.orcid | https://orcid.org/0000-0003-1100-6152 |

| authorships[2].author.display_name | Kuang‐Yi Sung |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Kuang-Yi Sung |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5102010003 |

| authorships[3].author.orcid | https://orcid.org/0000-0001-7590-8791 |

| authorships[3].author.display_name | Hung-En Lin |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Hung-En Lin |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5113808750 |

| authorships[4].author.orcid | |

| authorships[4].author.display_name | I‐Fang Hsin |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | I-Fang Hsin |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5026715232 |

| authorships[5].author.orcid | |

| authorships[5].author.display_name | Ping‐Hsien Chen |

| authorships[5].author_position | middle |

| authorships[5].raw_author_name | Ping-Hsien Chen |

| authorships[5].is_corresponding | False |

| authorships[6].author.id | https://openalex.org/A5059739856 |

| authorships[6].author.orcid | https://orcid.org/0000-0002-0997-5168 |

| authorships[6].author.display_name | Yuan-Chia Chu |

| authorships[6].author_position | middle |

| authorships[6].raw_author_name | Yuan-Chia Chu |

| authorships[6].is_corresponding | False |

| authorships[7].author.id | https://openalex.org/A5034317065 |

| authorships[7].author.orcid | https://orcid.org/0000-0001-8594-1384 |

| authorships[7].author.display_name | David Lu |

| authorships[7].author_position | middle |

| authorships[7].raw_author_name | David Lu |

| authorships[7].is_corresponding | False |

| authorships[8].author.id | https://openalex.org/A5023577493 |

| authorships[8].author.orcid | |

| authorships[8].author.display_name | Yuan‐Jen Wang |

| authorships[8].author_position | middle |

| authorships[8].raw_author_name | Yuan-Jen Wang |

| authorships[8].is_corresponding | False |

| authorships[9].author.id | https://openalex.org/A5111816794 |

| authorships[9].author.orcid | |

| authorships[9].author.display_name | Ming‐Chih Hou |

| authorships[9].author_position | middle |

| authorships[9].raw_author_name | Ming-Chih Hou |

| authorships[9].is_corresponding | False |

| authorships[10].author.id | https://openalex.org/A5109963985 |

| authorships[10].author.orcid | |

| authorships[10].author.display_name | Fa‐Yauh Lee |

| authorships[10].author_position | middle |

| authorships[10].raw_author_name | Fa-Yauh Lee |

| authorships[10].is_corresponding | False |

| authorships[11].author.id | https://openalex.org/A5087221607 |

| authorships[11].author.orcid | https://orcid.org/0000-0001-9335-7241 |

| authorships[11].author.display_name | Ching‐Liang Lu |

| authorships[11].author_position | last |

| authorships[11].raw_author_name | Ching-Liang Lu |

| authorships[11].is_corresponding | False |

| has_content.pdf | False |

| has_content.grobid_xml | False |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://doi.org/10.2196/preprints.29326 |

| open_access.oa_status | gold |

| open_access.any_repository_has_fulltext | False |

| created_date | 2025-10-10T00:00:00 |

| display_name | Use of U-Net Convolutional Neural Networks for Automated Segmentation of Fecal Material to Objective Evaluation of Bowel Preparation Quality in Colonoscopy (Preprint) |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T03:46:38.306776 |

| primary_topic.id | https://openalex.org/T10552 |

| primary_topic.field.id | https://openalex.org/fields/27 |

| primary_topic.field.display_name | Medicine |

| primary_topic.score | 0.9998999834060669 |

| primary_topic.domain.id | https://openalex.org/domains/4 |

| primary_topic.domain.display_name | Health Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/2730 |

| primary_topic.subfield.display_name | Oncology |

| primary_topic.display_name | Colorectal Cancer Screening and Detection |

| related_works | https://openalex.org/W2381279477, https://openalex.org/W3016587774, https://openalex.org/W2999920852, https://openalex.org/W4226493464, https://openalex.org/W4312417841, https://openalex.org/W3193565141, https://openalex.org/W3133861977, https://openalex.org/W3167935049, https://openalex.org/W3029198973, https://openalex.org/W4315434538 |

| cited_by_count | 0 |

| locations_count | 1 |

| best_oa_location.id | doi:10.2196/preprints.29326 |

| best_oa_location.is_oa | True |

| best_oa_location.source | |

| best_oa_location.license | cc-by |

| best_oa_location.pdf_url | |

| best_oa_location.version | acceptedVersion |

| best_oa_location.raw_type | posted-content |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by |

| best_oa_location.is_accepted | True |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | https://doi.org/10.2196/preprints.29326 |

| primary_location.id | doi:10.2196/preprints.29326 |

| primary_location.is_oa | True |

| primary_location.source | |

| primary_location.license | cc-by |

| primary_location.pdf_url | |

| primary_location.version | acceptedVersion |

| primary_location.raw_type | posted-content |

| primary_location.license_id | https://openalex.org/licenses/cc-by |

| primary_location.is_accepted | True |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | https://doi.org/10.2196/preprints.29326 |

| publication_date | 2021-04-02 |

| publication_year | 2021 |

| referenced_works | https://openalex.org/W2109453679, https://openalex.org/W2290774643, https://openalex.org/W2066194774, https://openalex.org/W2138141964, https://openalex.org/W2161185314, https://openalex.org/W1971606269, https://openalex.org/W2130543432, https://openalex.org/W2138134327, https://openalex.org/W2105961063, https://openalex.org/W2089290423, https://openalex.org/W2117920692, https://openalex.org/W2105410691, https://openalex.org/W2851918126, https://openalex.org/W1940447055, https://openalex.org/W2654136388, https://openalex.org/W2973140425, https://openalex.org/W3002021892, https://openalex.org/W2762406675, https://openalex.org/W2765527079, https://openalex.org/W2809105978, https://openalex.org/W2990396600, https://openalex.org/W2909244736, https://openalex.org/W2165188436, https://openalex.org/W1901129140, https://openalex.org/W2291833043, https://openalex.org/W2048326268, https://openalex.org/W2792546526, https://openalex.org/W2613456556, https://openalex.org/W2886281300, https://openalex.org/W2011237852, https://openalex.org/W2884530895, https://openalex.org/W2989631244, https://openalex.org/W3012192396, https://openalex.org/W2979829558, https://openalex.org/W3007028022, https://openalex.org/W2345368424, https://openalex.org/W2557738935, https://openalex.org/W2797069348, https://openalex.org/W2904843285, https://openalex.org/W2410282112, https://openalex.org/W2110693908, https://openalex.org/W2894010682 |

| referenced_works_count | 42 |

| abstract_inverted_index.A | 228 |

| abstract_inverted_index.a | 8, 25, 56, 84, 155, 255, 319 |

| abstract_inverted_index.AI | 181, 193, 281, 293 |

| abstract_inverted_index.We | 48, 313 |

| abstract_inverted_index.an | 167, 267 |

| abstract_inverted_index.be | 196, 296 |

| abstract_inverted_index.by | 109, 179, 207, 279, 307 |

| abstract_inverted_index.in | 28, 43 |

| abstract_inverted_index.is | 5 |

| abstract_inverted_index.of | 11, 70, 73, 130, 172, 211, 230, 272, 336 |

| abstract_inverted_index.on | 116, 217 |

| abstract_inverted_index.to | 50, 54, 61, 90, 124, 147, 162, 199, 247, 262, 299, 317, 324 |

| abstract_inverted_index.± | 164, 174, 264, 274 |

| abstract_inverted_index.119 | 135, 235 |

| abstract_inverted_index.The | 103, 142, 152, 176, 192, 242, 252, 276, 292 |

| abstract_inverted_index.aim | 49 |

| abstract_inverted_index.and | 32, 88, 100, 139, 201, 239, 301, 326 |

| abstract_inverted_index.are | 22 |

| abstract_inverted_index.can | 195, 295 |

| abstract_inverted_index.for | 7, 67, 333 |

| abstract_inverted_index.has | 39 |

| abstract_inverted_index.low | 33 |

| abstract_inverted_index.one | 149, 249 |

| abstract_inverted_index.our | 180, 280 |

| abstract_inverted_index.the | 12, 63, 71, 117, 186, 204, 212, 218, 222, 286, 304, 329 |

| abstract_inverted_index.use | 51 |

| abstract_inverted_index.was | 106, 122, 215 |

| abstract_inverted_index.(AI) | 38 |

| abstract_inverted_index.Deep | 112 |

| abstract_inverted_index.TheA | 128 |

| abstract_inverted_index.area | 158, 177, 187, 220, 258, 277, 287 |

| abstract_inverted_index.been | 40 |

| abstract_inverted_index.data | 86 |

| abstract_inverted_index.from | 83, 134, 234 |

| abstract_inverted_index.into | 97 |

| abstract_inverted_index.mark | 62, 328 |

| abstract_inverted_index.over | 169, 269 |

| abstract_inverted_index.used | 42, 314 |

| abstract_inverted_index.well | 184, 284 |

| abstract_inverted_index.were | 81, 94, 137, 237 |

| abstract_inverted_index.wide | 26 |

| abstract_inverted_index.with | 24, 159, 166, 185, 221, 259, 266, 285 |

| abstract_inverted_index.(IOU) | 171, 271 |

| abstract_inverted_index.0.17. | 175, 275 |

| abstract_inverted_index.0.607 | 173, 273 |

| abstract_inverted_index.0.67% | 165, 265 |

| abstract_inverted_index.94.7% | 163, 263 |

| abstract_inverted_index.<sec> | 0, 46, 77, 226, 311 |

| abstract_inverted_index.U-Net | 118 |

| abstract_inverted_index.among | 30 |

| abstract_inverted_index.based | 115 |

| abstract_inverted_index.bowel | 3, 18 |

| abstract_inverted_index.colon | 13, 74, 337 |

| abstract_inverted_index.fecal | 64, 104, 208, 308, 330 |

| abstract_inverted_index.fully | 57, 320 |

| abstract_inverted_index.image | 150, 250 |

| abstract_inverted_index.model | 114, 143, 182, 243, 282 |

| abstract_inverted_index.rate. | 35 |

| abstract_inverted_index.total | 129, 229 |

| abstract_inverted_index.union | 170, 270 |

| abstract_inverted_index.video | 85 |

| abstract_inverted_index.which | 93 |

| abstract_inverted_index.0.3634 | 145, 245 |

| abstract_inverted_index.10,118 | 131, 231 |

| abstract_inverted_index.</sec> | 45, 76, 225, 310, 339 |

| abstract_inverted_index.cohort | 87 |

| abstract_inverted_index.during | 15 |

| abstract_inverted_index.images | 133, 233 |

| abstract_inverted_index.manual | 160, 223, 260 |

| abstract_inverted_index.method | 60, 323 |

| abstract_inverted_index.models | 153, 253 |

| abstract_inverted_index.mucosa | 14, 66, 205, 305, 332 |

| abstract_inverted_index.scales | 21 |

| abstract_inverted_index.strong | 156, 256 |

| abstract_inverted_index.system | 194, 294 |

| abstract_inverted_index.videos | 80, 136, 236 |

| abstract_inverted_index.Current | 17 |

| abstract_inverted_index.applied | 197, 297 |

| abstract_inverted_index.covered | 206, 306 |

| abstract_inverted_index.develop | 55 |

| abstract_inverted_index.display | 203, 303 |

| abstract_inverted_index.divided | 96 |

| abstract_inverted_index.images, | 92 |

| abstract_inverted_index.machine | 52, 315 |

| abstract_inverted_index.network | 120 |

| abstract_inverted_index.overlap | 219 |

| abstract_inverted_index.perform | 125 |

| abstract_inverted_index.rapidly | 325 |

| abstract_inverted_index.residue | 105 |

| abstract_inverted_index.seconds | 146, 246 |

| abstract_inverted_index.skilled | 110 |

| abstract_inverted_index.Adequate | 2 |

| abstract_inverted_index.adequacy | 72 |

| abstract_inverted_index.averaged | 144, 244 |

| abstract_inverted_index.complete | 9 |

| abstract_inverted_index.labelled | 140, 240 |

| abstract_inverted_index.learning | 53, 113, 316 |

| abstract_inverted_index.manually | 107, 189, 289 |

| abstract_inverted_index.measured | 188, 288 |

| abstract_inverted_index.produced | 154, 254 |

| abstract_inverted_index.randomly | 95 |

| abstract_inverted_index.reported | 34 |

| abstract_inverted_index.residue. | 209, 309 |

| abstract_inverted_index.(r=0.915, | 190, 290 |

| abstract_inverted_index.automatic | 58, 126, 213, 321 |

| abstract_inverted_index.captured, | 138, 238 |

| abstract_inverted_index.cleansing | 4, 19 |

| abstract_inverted_index.datasets. | 102 |

| abstract_inverted_index.developed | 123 |

| abstract_inverted_index.establish | 318 |

| abstract_inverted_index.evaluated | 216 |

| abstract_inverted_index.important | 6 |

| abstract_inverted_index.manually. | 141, 241 |

| abstract_inverted_index.objective | 68, 334 |

| abstract_inverted_index.predicted | 178, 278 |

| abstract_inverted_index.qualified | 91, 132, 232 |

| abstract_inverted_index.real-time | 198, 298 |

| abstract_inverted_index.retrieved | 82 |

| abstract_inverted_index.segmented | 108 |

| abstract_inverted_index.training, | 98 |

| abstract_inverted_index.variation | 27 |

| abstract_inverted_index.Artificial | 36 |

| abstract_inverted_index.accurately | 327 |

| abstract_inverted_index.correlated | 183, 283 |

| abstract_inverted_index.endoscopy. | 44 |

| abstract_inverted_index.evaluation | 20, 69, 335 |

| abstract_inverted_index.physicians | 31 |

| abstract_inverted_index.segmentate | 148, 248 |

| abstract_inverted_index.subjective | 23 |

| abstract_inverted_index.validation | 99 |

| abstract_inverted_index.Colonoscopy | 79 |

| abstract_inverted_index.consistency | 29 |

| abstract_inverted_index.examination | 10 |

| abstract_inverted_index.performance | 210 |

| abstract_inverted_index.transferred | 89 |

| abstract_inverted_index.architecture | 121 |

| abstract_inverted_index.colonoscopy. | 16 |

| abstract_inverted_index.high-overlap | 157, 257 |

| abstract_inverted_index.increasingly | 41 |

| abstract_inverted_index.intelligence | 37 |

| abstract_inverted_index.intersection | 168, 268 |

| abstract_inverted_index.p<0.001). | 191, 291 |

| abstract_inverted_index.preparation. | 75, 338 |

| abstract_inverted_index.segmentation | 59, 161, 214, 261, 322 |

| abstract_inverted_index.technicians. | 111 |

| abstract_inverted_index.verification | 101 |

| abstract_inverted_index.convolutional | 119 |

| abstract_inverted_index.qualitatively | 200, 300 |

| abstract_inverted_index.segmentation. | 127, 224 |

| abstract_inverted_index.automatically. | 151, 251 |

| abstract_inverted_index.quantitatively | 202, 302 |

| abstract_inverted_index.residue-coated | 65, 331 |

| abstract_inverted_index.<title>METHODS</title> | 78 |

| abstract_inverted_index.<title>RESULTS</title> | 227 |

| abstract_inverted_index.<title>OBJECTIVE</title> | 47 |

| abstract_inverted_index.<title>BACKGROUND</title> | 1 |

| abstract_inverted_index.<title>CONCLUSIONS</title> | 312 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 12 |

| citation_normalized_percentile.value | 0.24862946 |

| citation_normalized_percentile.is_in_top_1_percent | False |

| citation_normalized_percentile.is_in_top_10_percent | False |