VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language Models Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2409.17066

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2409.17066

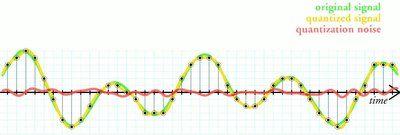

Scaling model size significantly challenges the deployment and inference of Large Language Models (LLMs). Due to the redundancy in LLM weights, recent research has focused on pushing weight-only quantization to extremely low-bit (even down to 2 bits). It reduces memory requirements, optimizes storage costs, and decreases memory bandwidth needs during inference. However, due to numerical representation limitations, traditional scalar-based weight quantization struggles to achieve such extreme low-bit. Recent research on Vector Quantization (VQ) for LLMs has demonstrated the potential for extremely low-bit model quantization by compressing vectors into indices using lookup tables. In this paper, we introduce Vector Post-Training Quantization (VPTQ) for extremely low-bit quantization of LLMs. We use Second-Order Optimization to formulate the LLM VQ problem and guide our quantization algorithm design by solving the optimization. We further refine the weights using Channel-Independent Second-Order Optimization for a granular VQ. In addition, by decomposing the optimization problem, we propose a brief and effective codebook initialization algorithm. We also extend VPTQ to support residual and outlier quantization, which enhances model accuracy and further compresses the model. Our experimental results show that VPTQ reduces model quantization perplexity by $0.01$-$0.34$ on LLaMA-2, $0.38$-$0.68$ on Mistral-7B, $4.41$-$7.34$ on LLaMA-3 over SOTA at 2-bit, with an average accuracy improvement of $0.79$-$1.5\%$ on LLaMA-2, $1\%$ on Mistral-7B, $11$-$22\%$ on LLaMA-3 on QA tasks on average. We only utilize $10.4$-$18.6\%$ of the quantization algorithm execution time, resulting in a $1.6$-$1.8\times$ increase in inference throughput compared to SOTA.

Related Topics

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2409.17066

- https://arxiv.org/pdf/2409.17066

- OA Status

- green

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4403785034

Raw OpenAlex JSON

- OpenAlex ID

-

https://openalex.org/W4403785034Canonical identifier for this work in OpenAlex

- DOI

-

https://doi.org/10.48550/arxiv.2409.17066Digital Object Identifier

- Title

-

VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language ModelsWork title

- Type

-

preprintOpenAlex work type

- Language

-

enPrimary language

- Publication year

-

2024Year of publication

- Publication date

-

2024-09-25Full publication date if available

- Authors

-

Yifei Liu, Jicheng Wen, Yang Wang, Shengyu Ye, Li Lyna Zhang, Ting Cao, Cheng Li, Mao YangList of authors in order

- Landing page

-

https://arxiv.org/abs/2409.17066Publisher landing page

- PDF URL

-

https://arxiv.org/pdf/2409.17066Direct link to full text PDF

- Open access

-

YesWhether a free full text is available

- OA status

-

greenOpen access status per OpenAlex

- OA URL

-

https://arxiv.org/pdf/2409.17066Direct OA link when available

- Concepts

-

Vector quantization, Bit (key), Computer science, Quantization (signal processing), Training (meteorology), Artificial intelligence, Theoretical computer science, Algorithm, Physics, Computer security, MeteorologyTop concepts (fields/topics) attached by OpenAlex

- Cited by

-

0Total citation count in OpenAlex

- Related works (count)

-

10Other works algorithmically related by OpenAlex

Full payload

| id | https://openalex.org/W4403785034 |

|---|---|

| doi | https://doi.org/10.48550/arxiv.2409.17066 |

| ids.doi | https://doi.org/10.48550/arxiv.2409.17066 |

| ids.openalex | https://openalex.org/W4403785034 |

| fwci | |

| type | preprint |

| title | VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language Models |

| biblio.issue | |

| biblio.volume | |

| biblio.last_page | |

| biblio.first_page | |

| topics[0].id | https://openalex.org/T10201 |

| topics[0].field.id | https://openalex.org/fields/17 |

| topics[0].field.display_name | Computer Science |

| topics[0].score | 0.9836999773979187 |

| topics[0].domain.id | https://openalex.org/domains/3 |

| topics[0].domain.display_name | Physical Sciences |

| topics[0].subfield.id | https://openalex.org/subfields/1702 |

| topics[0].subfield.display_name | Artificial Intelligence |

| topics[0].display_name | Speech Recognition and Synthesis |

| topics[1].id | https://openalex.org/T10028 |

| topics[1].field.id | https://openalex.org/fields/17 |

| topics[1].field.display_name | Computer Science |

| topics[1].score | 0.9460999965667725 |

| topics[1].domain.id | https://openalex.org/domains/3 |

| topics[1].domain.display_name | Physical Sciences |

| topics[1].subfield.id | https://openalex.org/subfields/1702 |

| topics[1].subfield.display_name | Artificial Intelligence |

| topics[1].display_name | Topic Modeling |

| topics[2].id | https://openalex.org/T10181 |

| topics[2].field.id | https://openalex.org/fields/17 |

| topics[2].field.display_name | Computer Science |

| topics[2].score | 0.9172999858856201 |

| topics[2].domain.id | https://openalex.org/domains/3 |

| topics[2].domain.display_name | Physical Sciences |

| topics[2].subfield.id | https://openalex.org/subfields/1702 |

| topics[2].subfield.display_name | Artificial Intelligence |

| topics[2].display_name | Natural Language Processing Techniques |

| is_xpac | False |

| apc_list | |

| apc_paid | |

| concepts[0].id | https://openalex.org/C199833920 |

| concepts[0].level | 2 |

| concepts[0].score | 0.6332188844680786 |

| concepts[0].wikidata | https://www.wikidata.org/wiki/Q612536 |

| concepts[0].display_name | Vector quantization |

| concepts[1].id | https://openalex.org/C117011727 |

| concepts[1].level | 2 |

| concepts[1].score | 0.6312629580497742 |

| concepts[1].wikidata | https://www.wikidata.org/wiki/Q1278488 |

| concepts[1].display_name | Bit (key) |

| concepts[2].id | https://openalex.org/C41008148 |

| concepts[2].level | 0 |

| concepts[2].score | 0.5909942984580994 |

| concepts[2].wikidata | https://www.wikidata.org/wiki/Q21198 |

| concepts[2].display_name | Computer science |

| concepts[3].id | https://openalex.org/C28855332 |

| concepts[3].level | 2 |

| concepts[3].score | 0.5302079916000366 |

| concepts[3].wikidata | https://www.wikidata.org/wiki/Q198099 |

| concepts[3].display_name | Quantization (signal processing) |

| concepts[4].id | https://openalex.org/C2777211547 |

| concepts[4].level | 2 |

| concepts[4].score | 0.42140626907348633 |

| concepts[4].wikidata | https://www.wikidata.org/wiki/Q17141490 |

| concepts[4].display_name | Training (meteorology) |

| concepts[5].id | https://openalex.org/C154945302 |

| concepts[5].level | 1 |

| concepts[5].score | 0.3923777639865875 |

| concepts[5].wikidata | https://www.wikidata.org/wiki/Q11660 |

| concepts[5].display_name | Artificial intelligence |

| concepts[6].id | https://openalex.org/C80444323 |

| concepts[6].level | 1 |

| concepts[6].score | 0.32251912355422974 |

| concepts[6].wikidata | https://www.wikidata.org/wiki/Q2878974 |

| concepts[6].display_name | Theoretical computer science |

| concepts[7].id | https://openalex.org/C11413529 |

| concepts[7].level | 1 |

| concepts[7].score | 0.3011298179626465 |

| concepts[7].wikidata | https://www.wikidata.org/wiki/Q8366 |

| concepts[7].display_name | Algorithm |

| concepts[8].id | https://openalex.org/C121332964 |

| concepts[8].level | 0 |

| concepts[8].score | 0.10586628317832947 |

| concepts[8].wikidata | https://www.wikidata.org/wiki/Q413 |

| concepts[8].display_name | Physics |

| concepts[9].id | https://openalex.org/C38652104 |

| concepts[9].level | 1 |

| concepts[9].score | 0.07354468107223511 |

| concepts[9].wikidata | https://www.wikidata.org/wiki/Q3510521 |

| concepts[9].display_name | Computer security |

| concepts[10].id | https://openalex.org/C153294291 |

| concepts[10].level | 1 |

| concepts[10].score | 0.0 |

| concepts[10].wikidata | https://www.wikidata.org/wiki/Q25261 |

| concepts[10].display_name | Meteorology |

| keywords[0].id | https://openalex.org/keywords/vector-quantization |

| keywords[0].score | 0.6332188844680786 |

| keywords[0].display_name | Vector quantization |

| keywords[1].id | https://openalex.org/keywords/bit |

| keywords[1].score | 0.6312629580497742 |

| keywords[1].display_name | Bit (key) |

| keywords[2].id | https://openalex.org/keywords/computer-science |

| keywords[2].score | 0.5909942984580994 |

| keywords[2].display_name | Computer science |

| keywords[3].id | https://openalex.org/keywords/quantization |

| keywords[3].score | 0.5302079916000366 |

| keywords[3].display_name | Quantization (signal processing) |

| keywords[4].id | https://openalex.org/keywords/training |

| keywords[4].score | 0.42140626907348633 |

| keywords[4].display_name | Training (meteorology) |

| keywords[5].id | https://openalex.org/keywords/artificial-intelligence |

| keywords[5].score | 0.3923777639865875 |

| keywords[5].display_name | Artificial intelligence |

| keywords[6].id | https://openalex.org/keywords/theoretical-computer-science |

| keywords[6].score | 0.32251912355422974 |

| keywords[6].display_name | Theoretical computer science |

| keywords[7].id | https://openalex.org/keywords/algorithm |

| keywords[7].score | 0.3011298179626465 |

| keywords[7].display_name | Algorithm |

| keywords[8].id | https://openalex.org/keywords/physics |

| keywords[8].score | 0.10586628317832947 |

| keywords[8].display_name | Physics |

| keywords[9].id | https://openalex.org/keywords/computer-security |

| keywords[9].score | 0.07354468107223511 |

| keywords[9].display_name | Computer security |

| language | en |

| locations[0].id | pmh:oai:arXiv.org:2409.17066 |

| locations[0].is_oa | True |

| locations[0].source.id | https://openalex.org/S4306400194 |

| locations[0].source.issn | |

| locations[0].source.type | repository |

| locations[0].source.is_oa | True |

| locations[0].source.issn_l | |

| locations[0].source.is_core | False |

| locations[0].source.is_in_doaj | False |

| locations[0].source.display_name | arXiv (Cornell University) |

| locations[0].source.host_organization | https://openalex.org/I205783295 |

| locations[0].source.host_organization_name | Cornell University |

| locations[0].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[0].license | cc-by |

| locations[0].pdf_url | https://arxiv.org/pdf/2409.17066 |

| locations[0].version | submittedVersion |

| locations[0].raw_type | text |

| locations[0].license_id | https://openalex.org/licenses/cc-by |

| locations[0].is_accepted | False |

| locations[0].is_published | False |

| locations[0].raw_source_name | |

| locations[0].landing_page_url | http://arxiv.org/abs/2409.17066 |

| locations[1].id | doi:10.48550/arxiv.2409.17066 |

| locations[1].is_oa | True |

| locations[1].source.id | https://openalex.org/S4306400194 |

| locations[1].source.issn | |

| locations[1].source.type | repository |

| locations[1].source.is_oa | True |

| locations[1].source.issn_l | |

| locations[1].source.is_core | False |

| locations[1].source.is_in_doaj | False |

| locations[1].source.display_name | arXiv (Cornell University) |

| locations[1].source.host_organization | https://openalex.org/I205783295 |

| locations[1].source.host_organization_name | Cornell University |

| locations[1].source.host_organization_lineage | https://openalex.org/I205783295 |

| locations[1].license | cc-by |

| locations[1].pdf_url | |

| locations[1].version | |

| locations[1].raw_type | article |

| locations[1].license_id | https://openalex.org/licenses/cc-by |

| locations[1].is_accepted | False |

| locations[1].is_published | |

| locations[1].raw_source_name | |

| locations[1].landing_page_url | https://doi.org/10.48550/arxiv.2409.17066 |

| indexed_in | arxiv, datacite |

| authorships[0].author.id | https://openalex.org/A5100352573 |

| authorships[0].author.orcid | https://orcid.org/0009-0009-1098-8044 |

| authorships[0].author.display_name | Yifei Liu |

| authorships[0].author_position | first |

| authorships[0].raw_author_name | Liu, Yifei |

| authorships[0].is_corresponding | False |

| authorships[1].author.id | https://openalex.org/A5065143999 |

| authorships[1].author.orcid | |

| authorships[1].author.display_name | Jicheng Wen |

| authorships[1].author_position | middle |

| authorships[1].raw_author_name | Wen, Jicheng |

| authorships[1].is_corresponding | False |

| authorships[2].author.id | https://openalex.org/A5100671049 |

| authorships[2].author.orcid | https://orcid.org/0000-0003-1619-6290 |

| authorships[2].author.display_name | Yang Wang |

| authorships[2].author_position | middle |

| authorships[2].raw_author_name | Wang, Yang |

| authorships[2].is_corresponding | False |

| authorships[3].author.id | https://openalex.org/A5010141774 |

| authorships[3].author.orcid | |

| authorships[3].author.display_name | Shengyu Ye |

| authorships[3].author_position | middle |

| authorships[3].raw_author_name | Ye, Shengyu |

| authorships[3].is_corresponding | False |

| authorships[4].author.id | https://openalex.org/A5103007399 |

| authorships[4].author.orcid | https://orcid.org/0000-0002-4465-1628 |

| authorships[4].author.display_name | Li Lyna Zhang |

| authorships[4].author_position | middle |

| authorships[4].raw_author_name | Zhang, Li Lyna |

| authorships[4].is_corresponding | False |

| authorships[5].author.id | https://openalex.org/A5101945161 |

| authorships[5].author.orcid | https://orcid.org/0000-0002-7675-0882 |

| authorships[5].author.display_name | Ting Cao |

| authorships[5].author_position | middle |

| authorships[5].raw_author_name | Cao, Ting |

| authorships[5].is_corresponding | False |

| authorships[6].author.id | https://openalex.org/A5100354297 |

| authorships[6].author.orcid | https://orcid.org/0000-0003-3424-2414 |

| authorships[6].author.display_name | Cheng Li |

| authorships[6].author_position | middle |

| authorships[6].raw_author_name | Li, Cheng |

| authorships[6].is_corresponding | False |

| authorships[7].author.id | https://openalex.org/A5100438310 |

| authorships[7].author.orcid | https://orcid.org/0009-0009-6455-3898 |

| authorships[7].author.display_name | Mao Yang |

| authorships[7].author_position | last |

| authorships[7].raw_author_name | Yang, Mao |

| authorships[7].is_corresponding | False |

| has_content.pdf | True |

| has_content.grobid_xml | True |

| is_paratext | False |

| open_access.is_oa | True |

| open_access.oa_url | https://arxiv.org/pdf/2409.17066 |

| open_access.oa_status | green |

| open_access.any_repository_has_fulltext | False |

| created_date | 2024-10-26T00:00:00 |

| display_name | VPTQ: Extreme Low-bit Vector Post-Training Quantization for Large Language Models |

| has_fulltext | False |

| is_retracted | False |

| updated_date | 2025-11-06T06:51:31.235846 |

| primary_topic.id | https://openalex.org/T10201 |

| primary_topic.field.id | https://openalex.org/fields/17 |

| primary_topic.field.display_name | Computer Science |

| primary_topic.score | 0.9836999773979187 |

| primary_topic.domain.id | https://openalex.org/domains/3 |

| primary_topic.domain.display_name | Physical Sciences |

| primary_topic.subfield.id | https://openalex.org/subfields/1702 |

| primary_topic.subfield.display_name | Artificial Intelligence |

| primary_topic.display_name | Speech Recognition and Synthesis |

| related_works | https://openalex.org/W2411923897, https://openalex.org/W4394546135, https://openalex.org/W4285347720, https://openalex.org/W4200259850, https://openalex.org/W2333831899, https://openalex.org/W230091440, https://openalex.org/W2484894494, https://openalex.org/W2367385042, https://openalex.org/W4381186982, https://openalex.org/W3209251257 |

| cited_by_count | 0 |

| locations_count | 2 |

| best_oa_location.id | pmh:oai:arXiv.org:2409.17066 |

| best_oa_location.is_oa | True |

| best_oa_location.source.id | https://openalex.org/S4306400194 |

| best_oa_location.source.issn | |

| best_oa_location.source.type | repository |

| best_oa_location.source.is_oa | True |

| best_oa_location.source.issn_l | |

| best_oa_location.source.is_core | False |

| best_oa_location.source.is_in_doaj | False |

| best_oa_location.source.display_name | arXiv (Cornell University) |

| best_oa_location.source.host_organization | https://openalex.org/I205783295 |

| best_oa_location.source.host_organization_name | Cornell University |

| best_oa_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| best_oa_location.license | cc-by |

| best_oa_location.pdf_url | https://arxiv.org/pdf/2409.17066 |

| best_oa_location.version | submittedVersion |

| best_oa_location.raw_type | text |

| best_oa_location.license_id | https://openalex.org/licenses/cc-by |

| best_oa_location.is_accepted | False |

| best_oa_location.is_published | False |

| best_oa_location.raw_source_name | |

| best_oa_location.landing_page_url | http://arxiv.org/abs/2409.17066 |

| primary_location.id | pmh:oai:arXiv.org:2409.17066 |

| primary_location.is_oa | True |

| primary_location.source.id | https://openalex.org/S4306400194 |

| primary_location.source.issn | |

| primary_location.source.type | repository |

| primary_location.source.is_oa | True |

| primary_location.source.issn_l | |

| primary_location.source.is_core | False |

| primary_location.source.is_in_doaj | False |

| primary_location.source.display_name | arXiv (Cornell University) |

| primary_location.source.host_organization | https://openalex.org/I205783295 |

| primary_location.source.host_organization_name | Cornell University |

| primary_location.source.host_organization_lineage | https://openalex.org/I205783295 |

| primary_location.license | cc-by |

| primary_location.pdf_url | https://arxiv.org/pdf/2409.17066 |

| primary_location.version | submittedVersion |

| primary_location.raw_type | text |

| primary_location.license_id | https://openalex.org/licenses/cc-by |

| primary_location.is_accepted | False |

| primary_location.is_published | False |

| primary_location.raw_source_name | |

| primary_location.landing_page_url | http://arxiv.org/abs/2409.17066 |

| publication_date | 2024-09-25 |

| publication_year | 2024 |

| referenced_works_count | 0 |

| abstract_inverted_index.2 | 35 |

| abstract_inverted_index.a | 137, 149, 231 |

| abstract_inverted_index.In | 92, 140 |

| abstract_inverted_index.It | 37 |

| abstract_inverted_index.QA | 215 |

| abstract_inverted_index.VQ | 115 |

| abstract_inverted_index.We | 107, 127, 156, 219 |

| abstract_inverted_index.an | 200 |

| abstract_inverted_index.at | 197 |

| abstract_inverted_index.by | 84, 123, 142, 185 |

| abstract_inverted_index.in | 18, 230, 234 |

| abstract_inverted_index.of | 9, 105, 204, 223 |

| abstract_inverted_index.on | 25, 69, 187, 190, 193, 206, 209, 212, 214, 217 |

| abstract_inverted_index.to | 15, 29, 34, 53, 62, 111, 160, 238 |

| abstract_inverted_index.we | 95, 147 |

| abstract_inverted_index.Due | 14 |

| abstract_inverted_index.LLM | 19, 114 |

| abstract_inverted_index.Our | 175 |

| abstract_inverted_index.VQ. | 139 |

| abstract_inverted_index.and | 7, 44, 117, 151, 163, 170 |

| abstract_inverted_index.due | 52 |

| abstract_inverted_index.for | 73, 79, 101, 136 |

| abstract_inverted_index.has | 23, 75 |

| abstract_inverted_index.our | 119 |

| abstract_inverted_index.the | 5, 16, 77, 113, 125, 130, 144, 173, 224 |

| abstract_inverted_index.use | 108 |

| abstract_inverted_index.(VQ) | 72 |

| abstract_inverted_index.LLMs | 74 |

| abstract_inverted_index.SOTA | 196 |

| abstract_inverted_index.VPTQ | 159, 180 |

| abstract_inverted_index.also | 157 |

| abstract_inverted_index.down | 33 |

| abstract_inverted_index.into | 87 |

| abstract_inverted_index.only | 220 |

| abstract_inverted_index.over | 195 |

| abstract_inverted_index.show | 178 |

| abstract_inverted_index.size | 2 |

| abstract_inverted_index.such | 64 |

| abstract_inverted_index.that | 179 |

| abstract_inverted_index.this | 93 |

| abstract_inverted_index.with | 199 |

| abstract_inverted_index.$1\%$ | 208 |

| abstract_inverted_index.(even | 32 |

| abstract_inverted_index.LLMs. | 106 |

| abstract_inverted_index.Large | 10 |

| abstract_inverted_index.SOTA. | 239 |

| abstract_inverted_index.brief | 150 |

| abstract_inverted_index.guide | 118 |

| abstract_inverted_index.model | 1, 82, 168, 182 |

| abstract_inverted_index.needs | 48 |

| abstract_inverted_index.tasks | 216 |

| abstract_inverted_index.time, | 228 |

| abstract_inverted_index.using | 89, 132 |

| abstract_inverted_index.which | 166 |

| abstract_inverted_index.(VPTQ) | 100 |

| abstract_inverted_index.2-bit, | 198 |

| abstract_inverted_index.Models | 12 |

| abstract_inverted_index.Recent | 67 |

| abstract_inverted_index.Vector | 70, 97 |

| abstract_inverted_index.bits). | 36 |

| abstract_inverted_index.costs, | 43 |

| abstract_inverted_index.design | 122 |

| abstract_inverted_index.during | 49 |

| abstract_inverted_index.extend | 158 |

| abstract_inverted_index.lookup | 90 |

| abstract_inverted_index.memory | 39, 46 |

| abstract_inverted_index.model. | 174 |

| abstract_inverted_index.paper, | 94 |

| abstract_inverted_index.recent | 21 |

| abstract_inverted_index.refine | 129 |

| abstract_inverted_index.weight | 59 |

| abstract_inverted_index.(LLMs). | 13 |

| abstract_inverted_index.LLaMA-3 | 194, 213 |

| abstract_inverted_index.Scaling | 0 |

| abstract_inverted_index.achieve | 63 |

| abstract_inverted_index.average | 201 |

| abstract_inverted_index.extreme | 65 |

| abstract_inverted_index.focused | 24 |

| abstract_inverted_index.further | 128, 171 |

| abstract_inverted_index.indices | 88 |

| abstract_inverted_index.low-bit | 31, 81, 103 |

| abstract_inverted_index.outlier | 164 |

| abstract_inverted_index.problem | 116 |

| abstract_inverted_index.propose | 148 |

| abstract_inverted_index.pushing | 26 |

| abstract_inverted_index.reduces | 38, 181 |

| abstract_inverted_index.results | 177 |

| abstract_inverted_index.solving | 124 |

| abstract_inverted_index.storage | 42 |

| abstract_inverted_index.support | 161 |

| abstract_inverted_index.tables. | 91 |

| abstract_inverted_index.utilize | 221 |

| abstract_inverted_index.vectors | 86 |

| abstract_inverted_index.weights | 131 |

| abstract_inverted_index.However, | 51 |

| abstract_inverted_index.LLaMA-2, | 188, 207 |

| abstract_inverted_index.Language | 11 |

| abstract_inverted_index.accuracy | 169, 202 |

| abstract_inverted_index.average. | 218 |

| abstract_inverted_index.codebook | 153 |

| abstract_inverted_index.compared | 237 |

| abstract_inverted_index.enhances | 167 |

| abstract_inverted_index.granular | 138 |

| abstract_inverted_index.increase | 233 |

| abstract_inverted_index.low-bit. | 66 |

| abstract_inverted_index.problem, | 146 |

| abstract_inverted_index.research | 22, 68 |

| abstract_inverted_index.residual | 162 |

| abstract_inverted_index.weights, | 20 |

| abstract_inverted_index.addition, | 141 |

| abstract_inverted_index.algorithm | 121, 226 |

| abstract_inverted_index.bandwidth | 47 |

| abstract_inverted_index.decreases | 45 |

| abstract_inverted_index.effective | 152 |

| abstract_inverted_index.execution | 227 |

| abstract_inverted_index.extremely | 30, 80, 102 |

| abstract_inverted_index.formulate | 112 |

| abstract_inverted_index.inference | 8, 235 |

| abstract_inverted_index.introduce | 96 |

| abstract_inverted_index.numerical | 54 |

| abstract_inverted_index.optimizes | 41 |

| abstract_inverted_index.potential | 78 |

| abstract_inverted_index.resulting | 229 |

| abstract_inverted_index.struggles | 61 |

| abstract_inverted_index.algorithm. | 155 |

| abstract_inverted_index.challenges | 4 |

| abstract_inverted_index.compresses | 172 |

| abstract_inverted_index.deployment | 6 |

| abstract_inverted_index.inference. | 50 |

| abstract_inverted_index.perplexity | 184 |

| abstract_inverted_index.redundancy | 17 |

| abstract_inverted_index.throughput | 236 |

| abstract_inverted_index.$11$-$22\%$ | 211 |

| abstract_inverted_index.Mistral-7B, | 191, 210 |

| abstract_inverted_index.compressing | 85 |

| abstract_inverted_index.decomposing | 143 |

| abstract_inverted_index.improvement | 203 |

| abstract_inverted_index.traditional | 57 |

| abstract_inverted_index.weight-only | 27 |

| abstract_inverted_index.Optimization | 110, 135 |

| abstract_inverted_index.Quantization | 71, 99 |

| abstract_inverted_index.Second-Order | 109, 134 |

| abstract_inverted_index.demonstrated | 76 |

| abstract_inverted_index.experimental | 176 |

| abstract_inverted_index.limitations, | 56 |

| abstract_inverted_index.optimization | 145 |

| abstract_inverted_index.quantization | 28, 60, 83, 104, 120, 183, 225 |

| abstract_inverted_index.scalar-based | 58 |

| abstract_inverted_index.$0.01$-$0.34$ | 186 |

| abstract_inverted_index.$0.38$-$0.68$ | 189 |

| abstract_inverted_index.$4.41$-$7.34$ | 192 |

| abstract_inverted_index.Post-Training | 98 |

| abstract_inverted_index.optimization. | 126 |

| abstract_inverted_index.quantization, | 165 |

| abstract_inverted_index.requirements, | 40 |

| abstract_inverted_index.significantly | 3 |

| abstract_inverted_index.$0.79$-$1.5\%$ | 205 |

| abstract_inverted_index.initialization | 154 |

| abstract_inverted_index.representation | 55 |

| abstract_inverted_index.$10.4$-$18.6\%$ | 222 |

| abstract_inverted_index.$1.6$-$1.8\times$ | 232 |

| abstract_inverted_index.Channel-Independent | 133 |

| cited_by_percentile_year | |

| countries_distinct_count | 0 |

| institutions_distinct_count | 8 |

| citation_normalized_percentile |