FusionNet: A parallel deep learning model for speech recognition with feature clustering Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.5281/zenodo.17862065

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.5281/zenodo.17862065

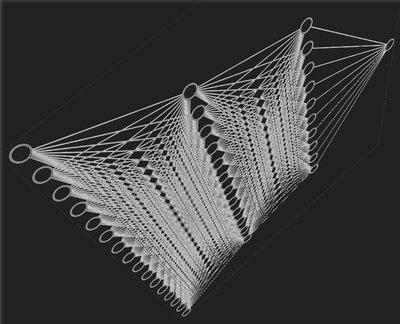

FusionNet is a parallel, hybrid deep-learning framework engineered for next-generation speech recognition and on-device speech-to-text processing. The system is implemented as an Android application (Java/XML) and integrated with Firebase Realtime Database to support secure, user-centric data management. Audio input undergoes a multi-stage preprocessing pipeline where MFCC, spectral, and temporal features are extracted and clustered using K-Means to group acoustically similar speech segments. These clustered representations are simultaneously processed through a dual-branch architecture: a Convolutional Neural Network (CNN) that learns spectral signatures and a Bidirectional Long Short-Term Memory (BiLSTM) network that models temporal dependencies. The fused embeddings are then classified using a Random Forest classifier, improving prediction stability in noisy or accent-variable conditions. To enhance semantic clarity, an NLP engine supported by a generative AI model refines the raw transcriptions, corrects contextual errors, and extracts user intent. Real-time inference is achieved via TensorFlow Lite (TFLite), enabling low-latency, energy-efficient execution directly on mobile hardware without cloud dependency. FusionNet demonstrates robustness against ambient noise, speaker variability, and multilingual inputs, making it a practical and scalable solution for voice-driven applications. This hybrid architecture effectively combines clustering, parallel deep learning, classical ML classification, and generative AI reasoning to deliver an intelligent, high-accuracy speech recognition system tailored for real-world deployment.

Related Topics To Compare & Contrast

- Type

- article

- Language

- en

- Landing Page

- https://doi.org/10.5281/zenodo.17862065

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W7111273844