Preference Optimization with Multi-Sample Comparisons Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2410.12138

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2410.12138

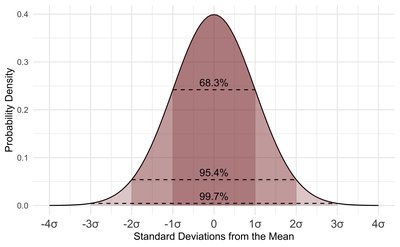

Recent advancements in generative models, particularly large language models (LLMs) and diffusion models, have been driven by extensive pretraining on large datasets followed by post-training. However, current post-training methods such as reinforcement learning from human feedback (RLHF) and direct alignment from preference methods (DAP) primarily utilize single-sample comparisons. These approaches often fail to capture critical characteristics such as generative diversity and bias, which are more accurately assessed through multiple samples. To address these limitations, we introduce a novel approach that extends post-training to include multi-sample comparisons. To achieve this, we propose Multi-sample Direct Preference Optimization (mDPO) and Multi-sample Identity Preference Optimization (mIPO). These methods improve traditional DAP methods by focusing on group-wise characteristics. Empirically, we demonstrate that multi-sample comparison is more effective in optimizing collective characteristics~(e.g., diversity and bias) for generative models than single-sample comparison. Additionally, our findings suggest that multi-sample comparisons provide a more robust optimization framework, particularly for dataset with label noise.

Related Topics To Compare & Contrast

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2410.12138

- https://arxiv.org/pdf/2410.12138

- OA Status

- green

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4403577580