Beyond Axis-Aligned Splits: Learnable Decision Tree Inductions for Complex Feature Interactions Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.5281/zenodo.17819445

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.5281/zenodo.17819445

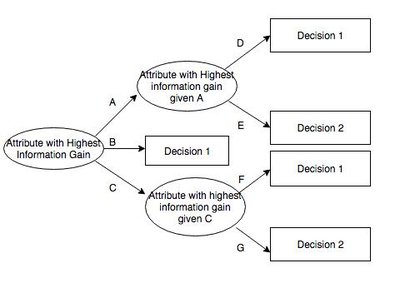

Decision trees are fundamental machine learning models known for their interpretability and efficiency. However, standard decision tree algorithms, such as CART and C4.5, predominantly rely on axis-aligned splits, which limit their ability to capture complex feature interactions effectively. This paper introduces a novel approach to decision tree induction that transcends the limitations of axis-aligned splits by employing learnable splitting functions. Our method learns splitting criteria directly from the data, enabling the trees to capture non-linear relationships and intricate feature combinations. We propose a differentiable decision tree framework that uses gradient-based optimization to train the splitting parameters. This framework allows us to seamlessly integrate decision tree learning with other deep learning architectures. We demonstrate the effectiveness of our approach through extensive experiments on various benchmark datasets, showing significant improvements in accuracy and model complexity compared to traditional decision tree algorithms. Furthermore, we analyze the learned splitting functions to provide insights into the feature interactions captured by the model. Our findings highlight the potential of learnable decision trees to address complex classification and regression problems, offering a powerful alternative to existing methods.

Related Topics To Compare & Contrast

- Type

- article

- Landing Page

- https://doi.org/10.5281/zenodo.17819445

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W7108750104