SSDD: Single-Step Diffusion Decoder for Efficient Image Tokenization Article Swipe

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2510.04961

YOU?

·

· 2025

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2510.04961

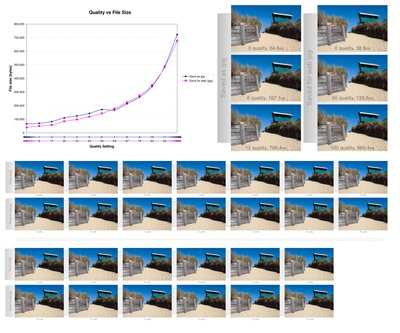

Tokenizers are a key component of state-of-the-art generative image models, extracting the most important features from the signal while reducing data dimension and redundancy. Most current tokenizers are based on KL-regularized variational autoencoders (KL-VAE), trained with reconstruction, perceptual and adversarial losses. Diffusion decoders have been proposed as a more principled alternative to model the distribution over images conditioned on the latent. However, matching the performance of KL-VAE still requires adversarial losses, as well as a higher decoding time due to iterative sampling. To address these limitations, we introduce a new pixel diffusion decoder architecture for improved scaling and training stability, benefiting from transformer components and GAN-free training. We use distillation to replicate the performance of the diffusion decoder in an efficient single-step decoder. This makes SSDD the first diffusion decoder optimized for single-step reconstruction trained without adversarial losses, reaching higher reconstruction quality and faster sampling than KL-VAE. In particular, SSDD improves reconstruction FID from $0.87$ to $0.50$ with $1.4\times$ higher throughput and preserve generation quality of DiTs with $3.8\times$ faster sampling. As such, SSDD can be used as a drop-in replacement for KL-VAE, and for building higher-quality and faster generative models.

Related Topics To Compare & Contrast

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2510.04961

- https://arxiv.org/pdf/2510.04961

- OA Status

- green

- OpenAlex ID

- https://openalex.org/W4414972763