Dynamic Gated Recurrent Neural Network for Compute-efficient Speech Enhancement Article Swipe

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.21437/interspeech.2024-958

YOU?

·

· 2024

· Open Access

·

· DOI: https://doi.org/10.21437/interspeech.2024-958

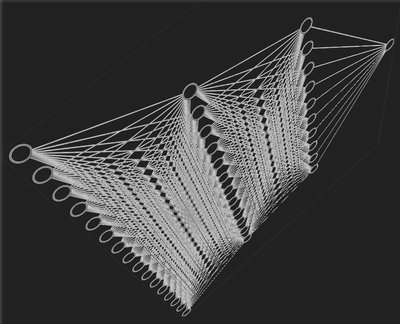

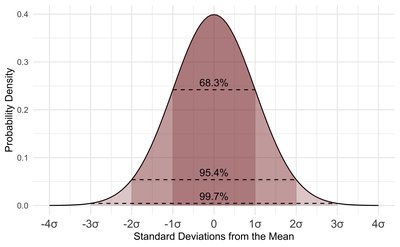

This paper introduces a new Dynamic Gated Recurrent Neural Network (DG-RNN)\nfor compute-efficient speech enhancement models running on resource-constrained\nhardware platforms. It leverages the slow evolution characteristic of RNN\nhidden states over steps, and updates only a selected set of neurons at each\nstep by adding a newly proposed select gate to the RNN model. This select gate\nallows the computation cost of the conventional RNN to be reduced during\nnetwork inference. As a realization of the DG-RNN, we further propose the\nDynamic Gated Recurrent Unit (D-GRU) which does not require additional\nparameters. Test results obtained from several state-of-the-art\ncompute-efficient RNN-based speech enhancement architectures using the DNS\nchallenge dataset, show that the D-GRU based model variants maintain similar\nspeech intelligibility and quality metrics comparable to the baseline GRU based\nmodels even with an average 50% reduction in GRU computes.\n

Related Topics To Compare & Contrast

- Type

- preprint

- Language

- en

- Landing Page

- https://doi.org/10.21437/interspeech.2024-958

- OA Status

- green

- Cited By

- 6

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4402112230