Character Motion Control by Using Limited Sensors and Animation Data Article Swipe

YOU?

·

· 2019

· Open Access

·

· DOI: https://doi.org/10.15701/kcgs.2019.25.3.85

YOU?

·

· 2019

· Open Access

·

· DOI: https://doi.org/10.15701/kcgs.2019.25.3.85

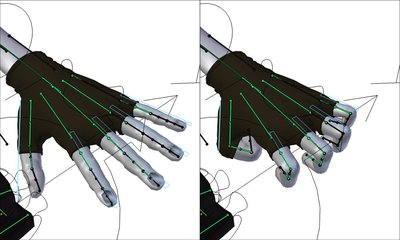

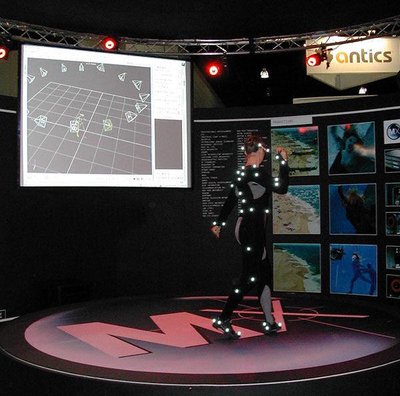

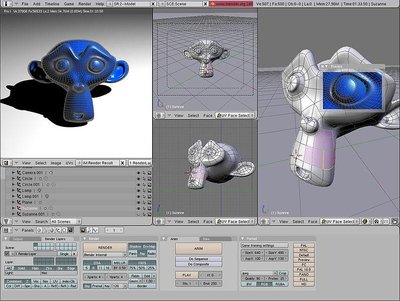

A 3D virtual character playing a role in a digital story-telling has a unique style in its appearance and motion. Because the style reflects the unique personality of the character, it is very important to preserve the style and keep its consistency. However, when the character’s motion is directly controlled by a user’s motion who is wearing motion sensors, the unique style can be discarded. We present a novel character motion control method that uses only a small amount of animation data created only for the character to preserve the style of the character motion. Instead of machine learning approaches requiring a large amount of training data, we suggest a search-based method, which directly searches the most similar character pose from the animation data to the current user’s pose. To show the usability of our method, we conducted our experiments with a character model and its animation data created by an expert designer for a virtual reality game. To prove that our method preserves well the original motion style of the character, we compared our result with the result obtained by using general human motion capture data. In addition, to show the scalability of our method, we presented experimental results with different numbers of motion sensors.

Related Topics To Compare & Contrast

- Type

- article

- Language

- en

- Landing Page

- https://doi.org/10.15701/kcgs.2019.25.3.85

- http://journal.cg-korea.org/download/download_pdf?pid=jkcgs-25-3-85

- OA Status

- diamond

- Cited By

- 2

- References

- 36

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W2954865904