Regularizing Deep Neural Networks with Stochastic Estimators of Hessian Trace Article Swipe

Yucong Liu

,

Shixing Yu

,

Tong Lin

·

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2208.05924

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2208.05924

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2208.05924

YOU?

·

· 2022

· Open Access

·

· DOI: https://doi.org/10.48550/arxiv.2208.05924

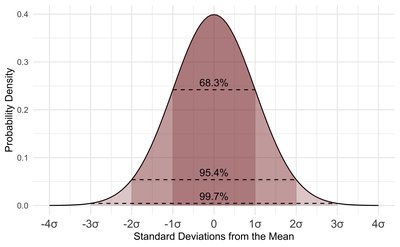

In this paper, we develop a novel regularization method for deep neural networks by penalizing the trace of Hessian. This regularizer is motivated by a recent guarantee bound of the generalization error. We explain its benefits in finding flat minima and avoiding Lyapunov stability in dynamical systems. We adopt the Hutchinson method as a classical unbiased estimator for the trace of a matrix and further accelerate its calculation using a dropout scheme. Experiments demonstrate that our method outperforms existing regularizers and data augmentation methods, such as Jacobian, Confidence Penalty, Label Smoothing, Cutout, and Mixup.

Related Topics To Compare & Contrast

Concepts

Hessian matrix

Estimator

TRACE (psycholinguistics)

Maxima and minima

Smoothing

Regularization (linguistics)

Computer science

Artificial neural network

Generalization

Jacobian matrix and determinant

Mathematical optimization

Applied mathematics

Algorithm

Mathematics

Artificial intelligence

Mathematical analysis

Linguistics

Computer vision

Statistics

Philosophy

Metadata

- Type

- preprint

- Language

- en

- Landing Page

- http://arxiv.org/abs/2208.05924

- https://arxiv.org/pdf/2208.05924

- OA Status

- green

- Related Works

- 10

- OpenAlex ID

- https://openalex.org/W4291238339

All OpenAlex metadata

Raw OpenAlex JSON

No additional metadata available.